Serverless Architecture The Future of Business Computing

What Is Serverless Architecture?

Initially, the definition of serverless architecture was limited to applications which are dependent on third-party services in the cloud. These 3rd party apps or services would manage the server-side logic and state. Alongside a related term – Mobile backend as a service (MBaaS) also became popular. MBaaS is a form of cloud computing that makes it easier for developers to use ecosystem of cloud accessible databases such as Heroku, Firebase, and authentication services like Auth0 and AWS cognito.

But now serverless architecture is defined by stateless compute containers and modeled for an event-driven solution. AWS Lambda is the perfect example of serverless architecture and employs Functions as a service (FaaS) model of cloud computing. Platform as a Service (PaaS) architectures popularized by Salesforce Heroku, AWS Elastic Beanstalk and Microsoft Azure simplify applications deployment for developers. And serverless architecture or FaaS is the next step in that direction.

FaaS provides a platform allowing the developers to execute code in response to events without the complexity of building and maintaining the infrastructure. Thus despite the name ‘serverless’, it does require servers to run code. The term serverless signifies, the organization or person doesn’t need to purchase, rent or provision servers or virtual machines to develop the application.

Microservices to FaaS

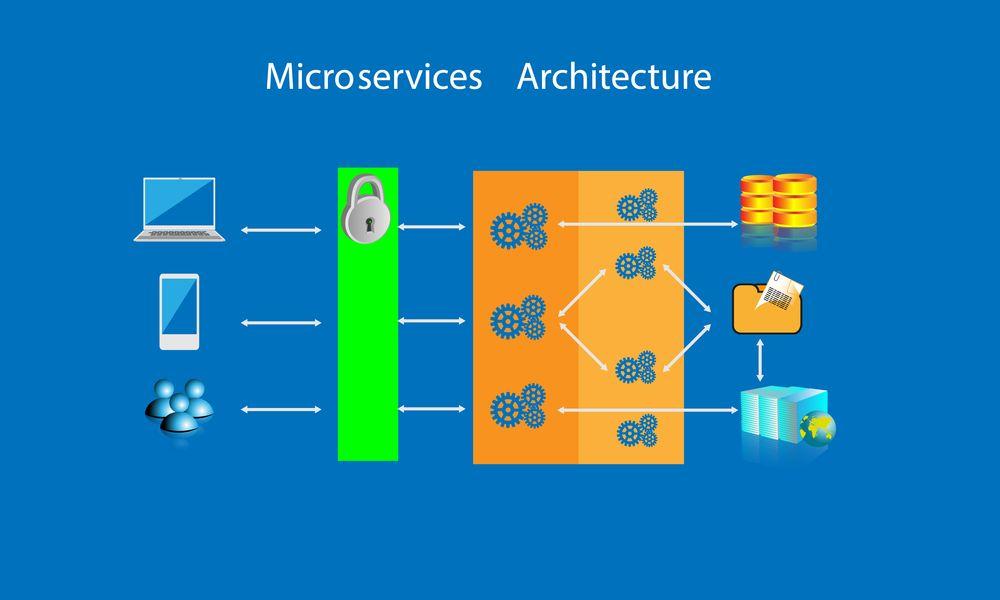

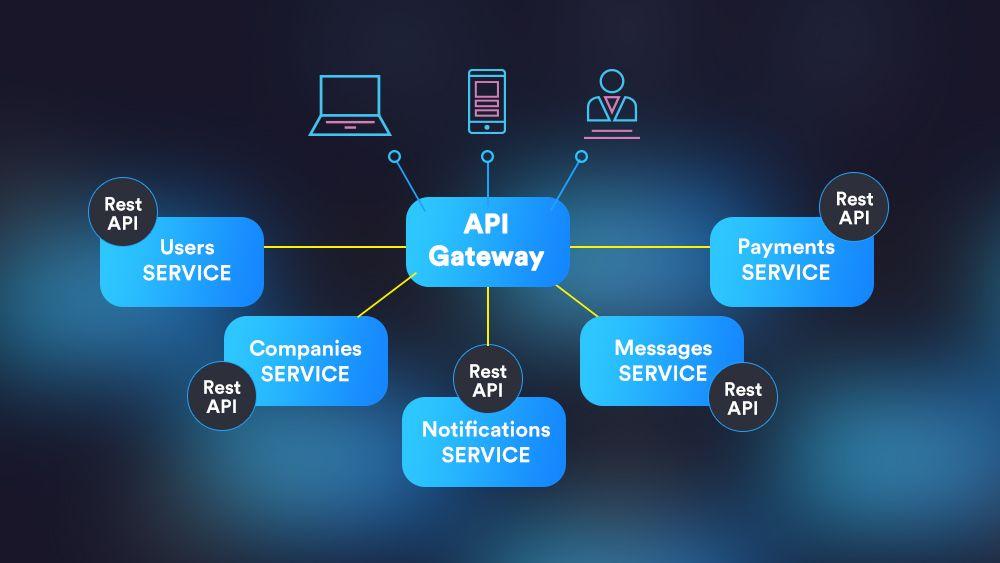

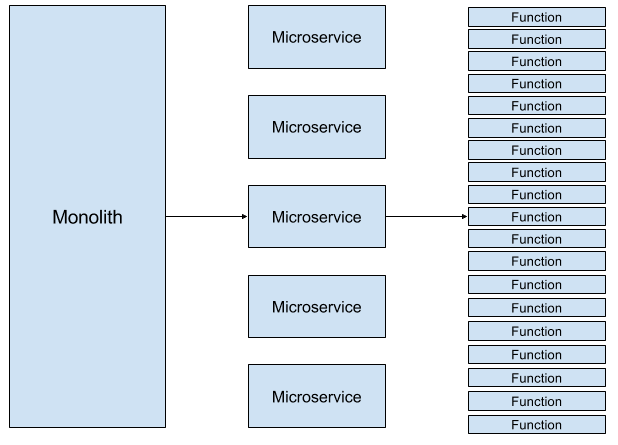

Serverless code written using FaaS can be used in conjunction with code written in traditional server style, such as microservices. In a microservice architecture, monolithic applications are broken down into smaller services so you can develop, manage and scale them independently. And FaaS takes that a step further by breaking applications to the level of functions and events.

There will always be a place for both microservices and FaaS. For example, the code for a web application is partly as a microservices and partly as a serverless code. Also, some things you can’t do with functions, like keep an open websocket connection for a bot for an instance. Here an API/microservice will almost always be able to respond faster since it can keep connections to databases and other things open and ready.

Another interesting explanation – you can have a microservice by grouping a set of functions together using an API gateway. So microservices and FaaS can coexist in a nice way. The end user is least bothered about your API is implemented as a single app or a bunch of functions, it still acts the same.

Going Beyond PaaS and Containers

Serverless computing or FaaS completely alleviates the shortcomings of PaaS model. PaaS has operational concerns of scaling and friction between development and operations. With most of the PaaS applications you need to think about scaling, e.g. how many virtual images for AWS beanstalk or dynos for Heroku. Whereas services like AWS Lambda, Google’s Cloud Functions or Azure Functions allow developers to write just the request processing logic in a function. And other aspects of architecture such as middleware, bootstrapping and scaling are automatically handled.

With a FaaS application scaling is completely transparent. Even if you setup your PaaS application to auto-scale you won’t be doing this to the level of individual requests unless you know the traffic trend. So a FaaS application is much more efficient when it comes to costs.

In a FaaS system, the functions are expected to start within milliseconds to allow handling of individual requests. In a PaaS systems, by contrast, there is an application thread which keeps running for a long period of time and handles many requests. This difference is visible in the pricing. FaaS services charge per execution time of the function while PaaS services charge per running time of the thread in which the server application is running.

Another popular technology that serverless computing can disrupt is containerization. DevOps teams use containerization tools such as Docker, Mesos, and Kubernetes as an open platform that makes it easier for developers and sysadmins to push code from development to production. You don’t have to use different, clashing environments during the entire application lifecycle. Read more about containerization tools in the blog ‘5 Essential Tools For DevOps Adoption’. Like PaaS, containers don’t offer automatic scaling. Kubernetes with ‘Horizontal Pod Autoscaling’ using smart traffic pattern analysis and load-implying metrics may implement automatic scaling in the future.

Framework of a Serverless Architecture

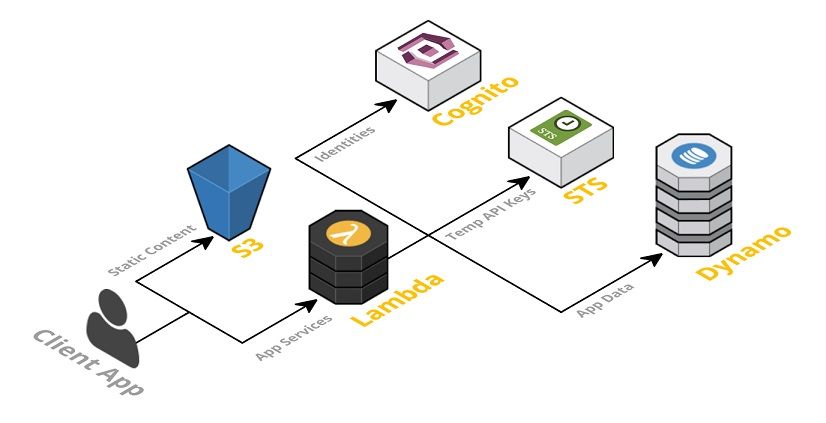

A serverless solution consists of a web server, FaaS layer, security token service (STS), user authentication and database.

Reference: https://blog.tonyfendall.com/2015/12/serverless-architectures-using-aws-lambda/

- Client Application – The UI of your application is best-rendered client side in Javascript which allows you to use a simple, static web server.

- Web Server – Amazon S3 provides a robust and simple web server. All of the static HTML, CSS and js files for your application can be served from S3.

- FaaS solution – It is the key enabler in serverless architecture. Some popular examples of FaaS are AWS Lambda, Google Cloud Functions, and Microsoft Azure Functions. AWS Lambda is used in this framework. The application services for logging in and accessing data will be built as Lambda functions. These functions will read and write from your database and provide JSON responses.

- Security Token Service (STS) will generate temporary AWS credentials (API key and secret key) for users of the application. These temporary credentials are used by the client application to invoke the AWS API (and thus invoke Lambda).

- User Authentication – AWS Cognito is an identity service which is integrated with AWS Lambda. With Amazon Cognito, you can easily add user sign-up and sign-in to your mobile and web apps. It also has the options to authenticate users through social identity providers such as Facebook, Twitter, or Amazon, with SAML identity solutions, or by using your own identity system.

- Database – AWS DynamoDB provides a fully managed NoSQL database. DynamoDB is not essential for a serverless application but is used as an example here.

To gain a more comprehensive understanding of the serverless architecture framework and its potential benefits for your business, reach out to our IT outsource consulting team. Our experts are well-versed in serverless architecture and can provide valuable insights tailored to your specific business needs.

Benefits of Serverless Architecture

1. Easier operational management

The serverless platform provides a clear separation between infrastructure services and applications running on top of the platform. Automatic scaling functionality of FaaS not only reduces compute cost but also reduces operational management overheads. System Engineers and SREs can focus on managing and running the underlying platform and core services such as databases and load balancers while product engineers manage the functions running on top of the platform.

Compared to deploying an entire server, packaging and deploying a FaaS architecture is pretty simple. In purist terms, a serverless system won’t require continuous integration, continuous delivery or containerization tool. Developers can write the code directly in the vendor console. Thus a fully serverless solution will require zero system administration.

2. Faster innovation

Product engineers can innovate at a rapid pace as serverless architecture has alleviated the problems of system engineering in the underlying platform. Thus less time for operations translates into a smooth application of DevOps and agile methodologies. Our teams have the flexibility to experiment in new things and update our technology stack. Also, regular concerns of an internet facing application like identity management, storage, etc are exposed to FaaS or handled by the underlying middleware. Product engineers can concentrate on developing the actual business logic of the application.

3. Reduced operational costs

Similar to IaaS and PaaS, infrastructure and human resource cost reduction is the basic advantage of the serverless architecture. In the serverless solution, you pay for managed servers, databases and application logic. AWS Lambda bills you only for the time when the function is called. As a result, the cost of running FaaS Lambda functions can be 95% less than running a server for the entire month with the container on it. Now services that were renting servers in AWS costing thousands of dollars have reduced to less than $10. The savings can be incredible. The basic advantage of this technology is that you only pay for the time your function executes and the resources it needs to execute.

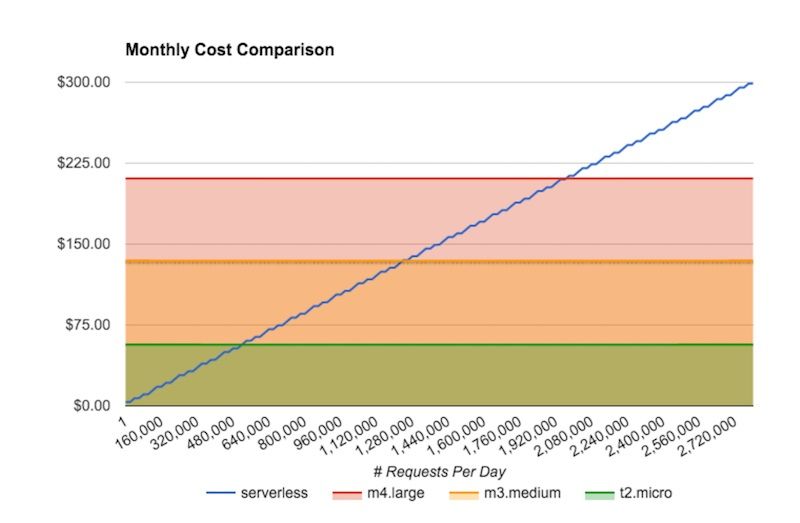

Stelligent have explained this point in their blog on Serverless Delivery: Architecture (Part 1). Applications running on AWS Lambda using AWS API Gateway will be cost effective only when the transaction volume is low. At higher scale using API gateway becomes cost prohibitive. Almost 98% of the cost of serverless deployment is due to API gateway while the cost of AWS Lambda as FaaS is negligible.

Reference – https://stelligent.com/2016/03/17/serverless-delivery-architecture-part-1/

The diagram above compares the pricing for running a Node.js application with Lambda and API Gateway versus a pair of EC2 instances and an ELB. Notice that for the m4.large, the break even is around two million requests per day.

To fully leverage the benefits of serverless architecture for your business, consider our top-quality web application development services, where we can design, develop, and deploy a custom web application that meets your specific needs and requirements.

Drawbacks of Serverless Architecture

1. Problems due to third-party API system

Vendor control, multitenancy problems, vendor lock-in and security concerns are some of the problems due to the use of 3rd party APIs. Giving up system control while implementing APIs can lead to system downtime, forced API upgrades, loss of functionality, unexpected limits and cost changes. Multitenancy problem is also seen in other cloud computing frameworks. Salesforce (PaaS) imposes governor limits due to its multitenant cloud structure and developers have to avoid some mistakes while using Salesforce. Multitenant solutions can have problems with security, robustness, and performance.

Switching vendors in serverless architecture can be little tricky. While switching you would need to update your tools, code, and design. Also migrating serverless functions from one vendor to another is easier than migrating all those other services with which a function must integrate. Parse is an example of BaaS which closed down and left many developers stranded. Many APIs in your serverless system exposes your ecosystem to malicious attacks and each API increases the number of security implementations.

2. Lack of operational tools

The developers are dependent on vendors for debugging and monitoring tools. Debugging Distributed Systems is difficult and usually requires access to a significant amount of relevant metrics to identify the root cause. IaaS and PaaS systems have exposure to traffic shaping and load balancer techniques such as Geo DNS and Zuul. In serverless architecture, we lose this flexibility as user requests are handled by opaque load balancers such as AWS API Gateway. In such cases, the platform chooses a lambda function deployed in the same region where the request arrives. So when there are outages in caching or data store services, it is hard to steer part of the traffic to other regions.

As the serverless architecture is adopted by more organizations we may see mature debugging tools. Also, platforms need not expose more operational metrics than they do today. Distributed tracing is a helpful technique in aiding in the understanding of how a request fans out across multiple services and helps in debugging systems based on the microservices architecture.

3. Architectural complexity

Distributed computing architectures are complex and time-consuming to build. This applies to microservices and serverless architectures in equal measure. Decisions about how small (granular) the function should be, takes time to assess, implement and test. There should be a balance between the number of functions should an application call. It gets cumbersome to manage too many functions and ignoring granularity will end up creating mini-monoliths.

Serverless architecture inherently provides benefits of low operational cost, scaling and less time to market. Due to which it has the potential to quickly become one of the foundational pieces of modern distributed systems. But it is still in the nascent stage where organizations adopting serverless systems should take into consideration the over-reliance on third-party APIs and architectural complexity. Organizations already using cloud technologies will be the early adopters and we might see a marketplace for serverless functions, and widespread adoption of serverless architectures. Blockchain, IoT, gaming and enterprise middleware are some of the future applications of serverless architecture.

Technology is constantly evolving. You need a reliable, trusted partner to help you adapt to the latest technologies and future-proof your investments. Reach out to a company like Maruti Techlabs, offering top IT staff augmentation services for reliable, cost-effective, and sustainable solutions. Our enterprise application modernization services help businesses adopt new technologies with our custom migration and modernization services. We can help you modernize your applications and infrastructure while reducing costs and improving the end-user experience. Give us a call today and discover how you can modernize your business.