Building a Predictive Model using Python Framework: A Step-by-Step Guide

Predictive modeling lies at the heart of transforming raw data into actionable insights. It allows businesses to anticipate customer behavior, detect risks, and make informed, data-driven decisions.

Python has become the preferred language for predictive modeling due to its simplicity, versatility, and vast ecosystem of libraries that streamline the entire process.

With tools like scikit‑learn, TensorFlow, and PyTorch, developers can easily experiment with regression, classification, and forecasting techniques. Python’s strong support for data manipulation through Pandas and NumPy, coupled with visualization options in Matplotlib and Seaborn, makes it a one‑stop solution for the entire workflow.

Additionally, Python integrates seamlessly with big‑data and cloud environments, enabling the deployment of models into production with minimal friction. Whether applied to finance, healthcare, or marketing mastering predictive modeling in Python offers professionals a competitive edge.

According to eSparkBiz, 90.6 % of data scientists use Python for data science tasks, making it the most widely preferred language in the field.

This blog explores Python‑based predictive modeling through a step‑by‑step process, covering EDA, feature engineering, model training, validation, deployment, libraries, workflows, and real‑world use cases for multiple industries.

When was the last time a piece of technology’s popularity grew exponentially, suddenly becoming a necessity for businesses and people? We see predictive analytics – tech that has been around for decades worth implementing into everyday life. To know why that has happened, let’s consider the reasons why:

The ability to use predictive algorithms is becoming more and more valuable for organizations of all sizes. It is particularly true for small businesses, which can use predictive programming to increase their competitive advantage by better understanding their customers and improving their sales.

Predictive programming has become a considerable part of businesses in the last decade. Companies turn to predictive programming to identify issues and opportunities, predict customer behavior and trends, and make better decisions. Fraud detection is one of the everyday use cases that regularly suggests the importance of predictive modeling in machine learning.

Combining multiple data sets helps to spot anomalies and prevent criminal behavior. The ability to conduct real-time remote analysis can improve fraud detection scenarios and make security more effective.

Additional Read – Top 17 Real-Life Predictive Analytics Use Cases

Here’s how predictive modeling works:

Data collection can take up a considerable amount of your time. However, the more data you have, the more accurate your predictions.

In the future, you’ll need to be working with data from multiple sources, so there needs to be a unitary approach to all that data. Hence, the data collection phase is crucial to make accurate predictions. Before doing that, ensure that you have the proper infrastructure in place and that your organization has the right team to get the job done.

Hiring Python developers can be a great solution if you lack the necessary resources or expertise to develop predictive models and implement data manipulation and analysis. Python is a popular data science and machine learning programming language due to its extensive libraries and frameworks like NumPy, pandas, scikit-learn, TensorFlow, and PyTorch.

It is observed that most data scientists spend 50% of their time collecting and exploring their data for the project. Doing this will help you identify and relate your data with your problem statement, eventually leading you to design more robust business solutions.

One of the critical challenges for data scientists is dealing with the massive amounts of data they process. Identifying the best dataset for your model is essential for good performance. This is where data cleaning comes in.

Data cleaning involves removing redundant and duplicate data from our data sets, making them more usable and efficient.

Converting data requires some data manipulation and preparation, allowing you to uncover valuable insights and make critical business decisions. You will also need to be concerned with the cleaning and filter part. Sometimes, data is stored in an unstructured format — such as a CSV file or text — and you have to clean it up and put it into a structured layout to analyze it.

Feature engineering is a machine learning technique using domain knowledge to pull out features from raw data. In other words, feature engineering transforms raw observations into desired features using statistical or machine learning methods.

A “feature,” as you may know, is any quantifiable input that may be utilized in a predictive model, such as the color of an object or the tone of someone’s voice. When feature engineering procedures are carried out effectively, the final dataset is optimal and contains all relevant aspects that impact the business challenge. These datasets generate the most accurate predictive modeling tasks and relevant insights.

You can use various predictive analytics models such as classification or clustering models. This is where predictive model building begins. In this step of predictive analysis, we employ several algorithms to develop prediction models based on the patterns seen.

Open-source programming languages like Python and R consist of countless libraries that can efficiently help you develop any form of machine learning model. It is also essential to reexamine the existing data and determine if it is the right kind for your predictive model.

For example, do you have the correct data in the first place? IT and marketing teams often have the necessary information, but they don’t know how best to frame it in a predictive model. Reframing existing data can change how an algorithm predicts outcomes.

In this step, we will check the efficiency of our model. Consider using the test dataset to determine the validity and accuracy of your prediction model. If the precision is good, you must repeat the feature engineering and data preprocessing steps until good results are achieved.

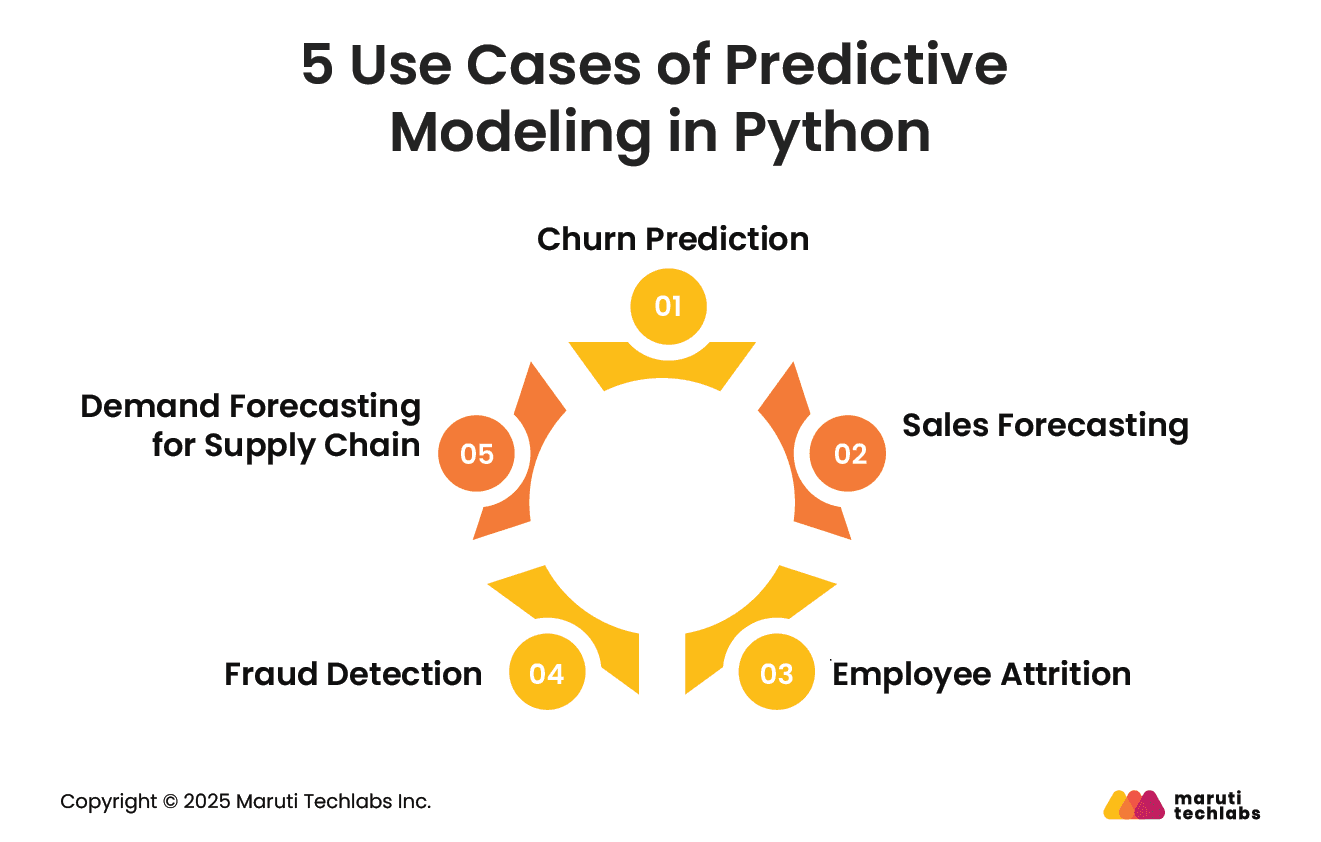

Here are the top use cases of predictive modeling.

Financial institutions leverage predictive modeling to identify customers at risk of closing accounts or discontinuing services.

By analyzing historical transactions, demographics, and engagement data, banks can proactively target retention campaigns, offer personalized solutions, and minimize revenue loss. It ensures customer lifetime value is maximized through data-driven interventions.

Predictive models help companies anticipate future sales by analyzing historical performance, market trends, seasonality, and promotional activity.

Businesses can optimize inventory, align marketing strategies, and plan production efficiently. Accurate sales forecasting with Python empowers companies to make timely decisions that reduce costs, avoid overstocking, and capitalize on high-demand periods.

HR departments use predictive modeling to identify employees most likely to resign. Factors like performance ratings, tenure, engagement scores, and compensation are analyzed to uncover patterns.

Python-based models help organizations design retention programs, reduce hiring costs, and maintain workforce stability by addressing potential turnover before it impacts productivity.

Fraud detection models analyze vast financial transaction datasets to spot unusual behaviors and anomalies in real time. Using Python’s machine learning capabilities, organizations can detect suspicious activity in banking, insurance, and e-commerce.

This reduces financial risks and improves security while ensuring legitimate transactions remain unaffected.

Predictive modeling enhances supply chain efficiency by forecasting product demand based on historical sales, external market conditions, and seasonal variations.

Python models help businesses prevent stockouts, optimize logistics, and reduce operational costs, ensuring products are available where and when they are needed most.

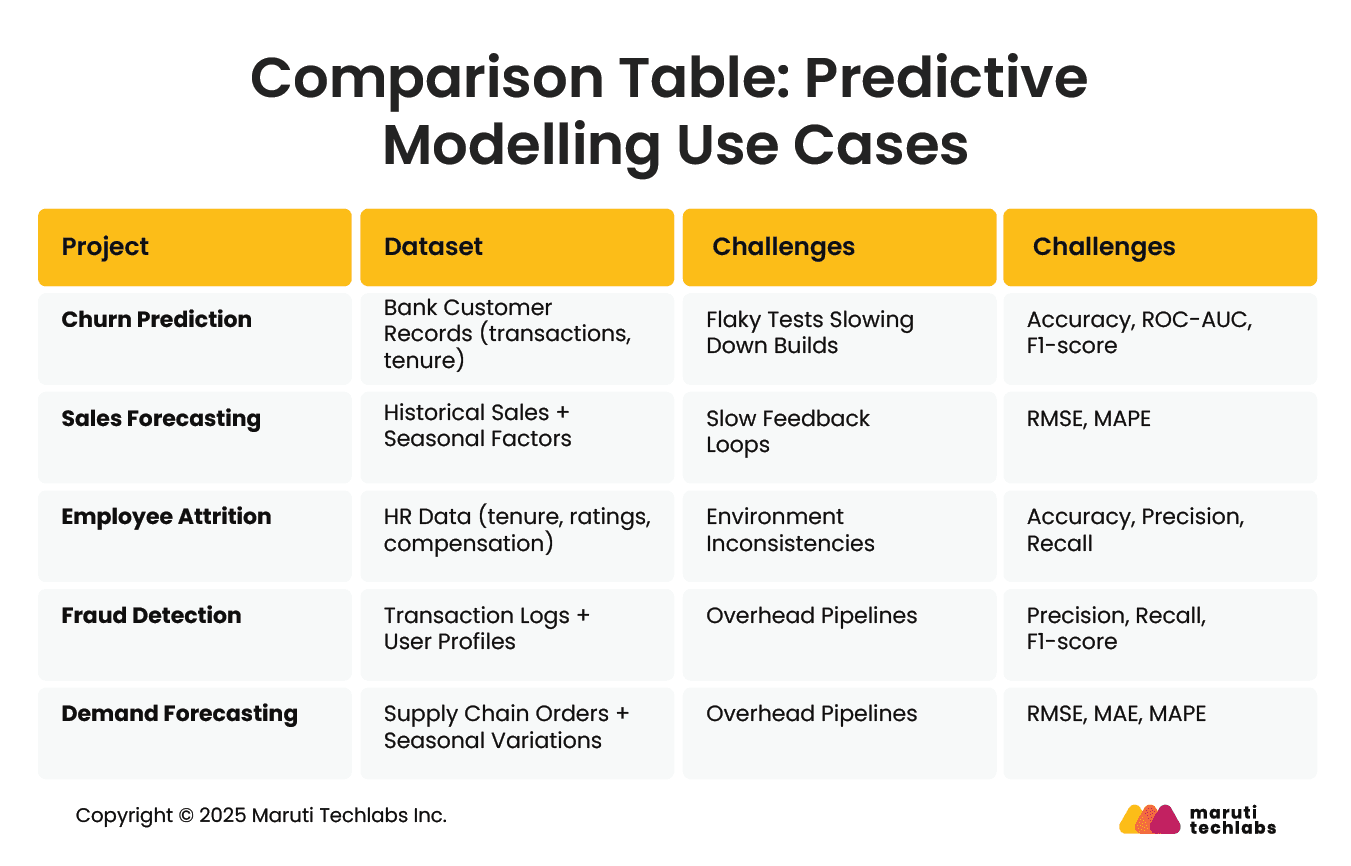

This table provides a quick reference for selecting datasets, libraries, and evaluation metrics for everyday predictive modeling tasks. Accuracy and classification metrics dominate in churn, attrition, and fraud detection projects.

For time-series tasks like sales or demand forecasting, error-based metrics (RMSE, MAE, MAPE) are preferred. The combination of Pandas for preprocessing, Scikit-learn/XGBoost for traditional models, and TensorFlow or PyTorch for deep learning ensures a robust, end-to-end workflow suitable for production.

To start with python modeling, you must first deal with data collection and exploration. Therefore, the first step to building a predictive analytics model is importing the required libraries and exploring them for your project.

To analyze the data, one needs to load the data within the program, for which we can use one of the python libraries called “Pandas.”

The following code illustrates how you can load your data from a CSV file into the memory for performing the following steps.

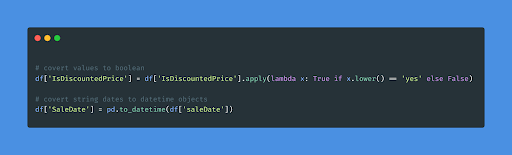

Now that you have your dataset, it’s time to look at the description and contents of the dataset using df.info() and df.head(). Moreover, as you noticed, the target variable is changed to (1/0) rather than (Yes/No), as shown in the below snippet.

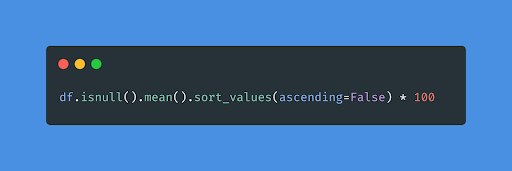

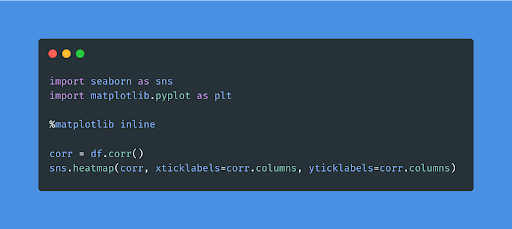

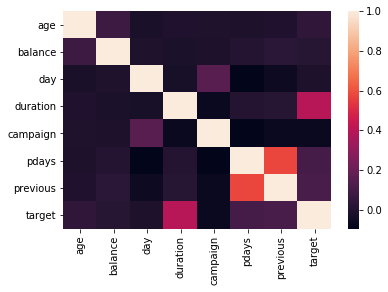

Descriptive statistics enables you to understand your python data model better and more meaningfully. As studied earlier, a better correlation between the data provides better accuracy in results. Hence, check for the correlation between various dataset variables using the below-given code.

When dealing with any python modeling, feature engineering plays an essential role. A lousy feature will immediately impact your predictive model, regardless of the data or architecture.

It may be essential to build and train better features for machine learning to perform effectively on new tasks. Feature engineering provides the potential to generate new features to simplify and speed up data processing while simultaneously improving model performance. You may use tools like FeatureTools and TsFresh to make feature engineering easier and more efficient for your predictive model.

Before you go further, double-check that your data gathering is compatible with your predictive model. Once you’ve collected the data, examine and refine it until you find the required information for your python modeling.

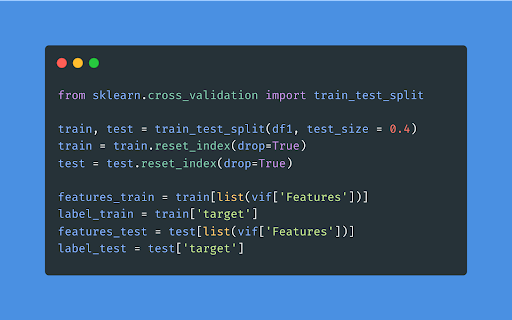

The dataset preparation majorly focuses on dividing the datasets into three sub-datasets used to train and assess the model’s performance.

The choice of the variable for your project purely depends on which Python data model you use for your predictive analytics model. Moreover, various predictive algorithms are available to help you select features of your dataset and make your task easy and efficient.

Below are some of the steps to follow during the variable selection procedure of your dataset

You have to dissect your dataset into train and test data and try various new predictive algorithms to identify the best one. This fundamental but complicated process may require external assistance from a custom AI software development company. Moreover, doing this will help you evaluate the performance of the test dataset and make sure the model is stable. By this stage, 80% of your python modeling is done.

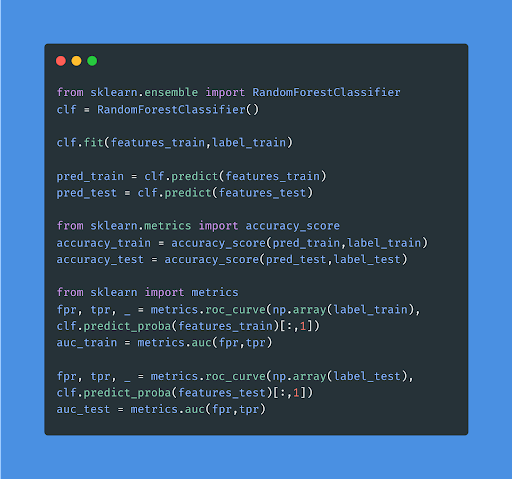

Let’s utilize the random forest predictive analytics framework to analyze the performance of our test data.

You can also tweak the model’s hyperparameters to improve overall performance. For a better understanding, check out the snippet code below.

Testing with various predictive analytics models, the one that gives the best accuracy is selected as the final one.

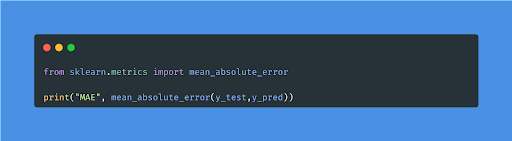

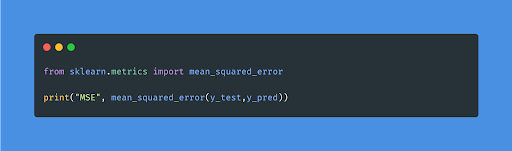

No, we are not done yet. While building a predictive analytics model, finalizing the model is not all to deal with. You also have to evaluate the model performance based on various metrics. Let’s go through some of these metrics in detail below:

MAE is a straightforward metric that calculates the absolute difference between actual and predicted values. The degree of errors for predictions and observations is measured using the average absolute errors for the entire group.

MSE is a popular and straightforward statistic with a bit of variation in mean absolute error. The squared difference between the actual and anticipated values is calculated using mean squared error.

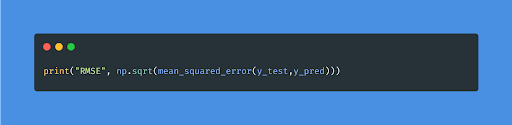

As the term, RMSE implies that it is a straightforward square root of mean squared error.

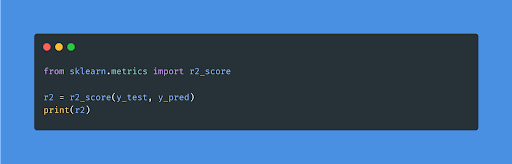

The R2 score, also called the coefficient of determination, is one of the performance evaluation measures for the regression-based machine learning model. Simply put, it measures how close the target data points are to the fitted line. As we have shown, MAE and MSE are context-dependent, but the R2 score is context neutral.

So, with the help of R squared, we have a baseline model to compare to a model that none of the other metrics give.

Let’s explore the top Python libraries that you can leverage for predictive modelling.

TensorFlow is an open-source deep learning library used to build, train, and deploy predictive models efficiently. Its flexible architecture supports CPUs, GPUs, and TPUs for large-scale computations.

TensorFlow excels in neural networks, time-series forecasting, and production-ready model deployment, making it ideal for complex predictive modeling in finance, healthcare, and retail.

PyTorch is a dynamic deep learning framework favored for research and production due to its flexible computation graphs and strong GPU support.

It simplifies building custom models for tasks like image recognition, NLP, and time-series prediction. Its seamless integration with Python makes it intuitive for developing advanced predictive modeling solutions.

Pandas is Python’s go-to library for data manipulation and preprocessing in predictive modeling. It allows handling structured data using DataFrames, performing tasks like cleaning, transformation, and feature engineering.

Efficient slicing, grouping, and merging of large datasets with Pandas simplifies preparing input features before feeding them into machine learning or deep learning models.

Scikit‑learn provides an extensive suite of machine learning algorithms for classification, regression, clustering, and dimensionality reduction. It’s the backbone of traditional predictive modeling in Python.

It offers tools for model training, evaluation, cross-validation, and pipelines. Its simplicity and rich documentation make it a staple for building baseline models and experimentation workflows.

Matplotlib is Python’s foundational visualization library for creating static, interactive, and publication-quality charts. In predictive modeling, it visualizes trends, residuals, feature importance, and model performance metrics.

When combined with libraries like Seaborn, it provides clear insights into patterns within datasets, enabling analysts to validate assumptions and communicate predictions effectively.

XGBoost is a high-performance gradient boosting library known for delivering state-of-the-art predictive accuracy. It is widely used for structured/tabular data problems like classification, regression, and ranking.

Its efficiency in handling missing values, built-in cross-validation, and parallelized computations make it a top choice in Kaggle competitions and enterprise predictive modeling projects.

Modern predictive modeling in Python goes beyond building and evaluating models. It also involves automating optimizations and extracting high‑value features from raw data. Advanced tools can significantly enhance accuracy, efficiency, and scalability. Let’s learn more about them in brief.

Optuna is a state‑of‑the‑art hyperparameter optimization framework that intelligently searches for the best parameters to improve model performance. Unlike traditional grid or random search, Optuna uses Tree‑structured Parzen Estimator (TPE) and pruning techniques to converge on optimal configurations quickly.

It works seamlessly with libraries like XGBoost, LightGBM, PyTorch, and TensorFlow, making it invaluable for both classical ML and deep learning projects. Automating tuning saves hours of manual experimentation while often delivering superior predictive accuracy.

Tsfresh focuses on extracting hundreds of statistical and domain‑agnostic features from time‑series datasets, such as mean, variance, entropy, and autocorrelation metrics.

This reduces the need for manual feature engineering in forecasting tasks like sales prediction, demand planning, or sensor data analysis, and helps models capture hidden temporal patterns.

Featuretools is designed for relational datasets and automates the creation of new predictive features through its Deep Feature Synthesis (DFS) technique.

It can generate meaningful aggregates, trends, and transformations across multiple linked tables. This is critical for projects involving customer analytics, churn prediction, and financial risk modeling.

In essence, these tools enhance predictive modeling pipelines by reducing manual effort, improving model accuracy, and enabling complex feature discovery that would be difficult to achieve with traditional methods alone.

Maruti Techlabs partnered with a SaaS firm, Core Nova, which provides telemarketing services across sectors, including finance and automotive.

They struggled to distinguish human voices from answering machines in the first 500 ms, with overlapping audio patterns causing 73 % of answering‑machine and 27 % of human‑answered calls clustering together incorrectly.

Over four weeks, Maruti Techlabs conducted an AI readiness audit, cleaned and labeled the audio datasets, and built a Python-based predictive model capable of classifying calls within 500 ms. The solution was integrated into the client’s backend and included iterative relabeling to improve data quality.

This automation saved each agent 30 minutes per day, and operations costs dropped by USD 110K per month. The efficiency gains allowed agents to engage more prospects, leading the client to renew the partnership for further phases.

As competition grows, businesses seek an edge in delivering products and services to crowded marketplaces. Data-driven predictive models can assist these companies in resolving long-standing issues in unusual ways.

While there are numerous programming languages to pick from in today’s world, there are many reasons why Python has evolved as one of the top competitors. Python’s foundations are rooted in versatility since it can be used to construct applications ranging from Raspberry Pi to web servers and desktop applications.

This article discusses Python as a popular programming language and framework that can efficiently solve business-level problems. Out of the extensive collection of Python frameworks, Scikit-learn is one of the commonly used Python libraries, which contains many algorithms, including machine learning capabilities, to help your company leverage the power of automation and future possibilities.

The team of data scientists at Maruti Techlabs takes on the preliminary work of evaluating your business objectives and determining the relevant solutions to the posed problems. For instance, using the power of predictive analytics algorithms in machine learning, we developed a sales prediction tool for an auto parts manufacturer. The goal was to have a more accurate prediction of sales, and to date, our team is helping the company improve the tool and, thus, predict sales more accurately.

As a part of the agreement, we broke down the entire project into three stages, each consisting of a distinctive set of responsibilities. The first stage included a comprehensive understanding of our client’s business values and data points. Subsequently, our data scientists refined and organized the dataset to pull out patterns and insights as needed. In the final stage, our data engineers used the refined data and developed a predictive analytics machine learning model to accurately predict upcoming sales cycles. It helped our client prepare better for the upcoming trends in the market and, resultantly, outdo their competitors.

Here’s what the VP of Product had to say about working with us –

“The quality of their service is great. There hasn’t been a time when I’ve sent an email and they don’t respond. We expect the model to improve the accuracy of our predictions by 100%. Our predictions above a thousand pieces of parts are going to be 30% accurate, which is pretty decent for us. Everything under a thousand pieces will be 80% accurate.

Maruti Techlabs is attentive to customer service. Their communication is great even after the development. They always make sure that we’re happy with what we have. I’m sure that they’ll continue doing that based on the experience I’ve had with them in the past 8–9 months”.

With the help of our machine learning services, we conduct a thorough analysis of your business and deliver insights to help you identify market trends, predict user behavior, personalize your products and services, and make overall improved business decisions.

Get in touch with us today and uncover the hidden patterns in your business data.