How Multi-Agent LLM Architectures Support Better Business Decisions

Enterprises are moving fast toward multi-agent Large Language Models (LLMs) to manage their most critical workloads. According to Gartner, by 2026, more than 80% of enterprise workloads will run on AI-driven systems, and multi-agent LLMs will play a significant role in this shift.

A single model can no longer handle every task effectively. Businesses are now adopting groups of models that work together like a team. One gathers data, another analyzes patterns, and a third recommends next steps. This collaboration enables faster, safer, and more reliable processes.

From managing compliance to streamlining decision-making, multi-agent LLMs are helping enterprises stay efficient and competitive. In this blog, we’ll cover what multi-agent LLM systems are, how they work, their enterprise applications, the key benefits and challenges, and best practices for managing them.

A multi-agent LLM system is like a team of AI helpers, each with its own job. Instead of one big model trying to do everything, different models focus on what they do best. They talk to each other, share results, and work together to solve bigger problems. This setup makes the system smarter, faster, and more reliable for enterprises.

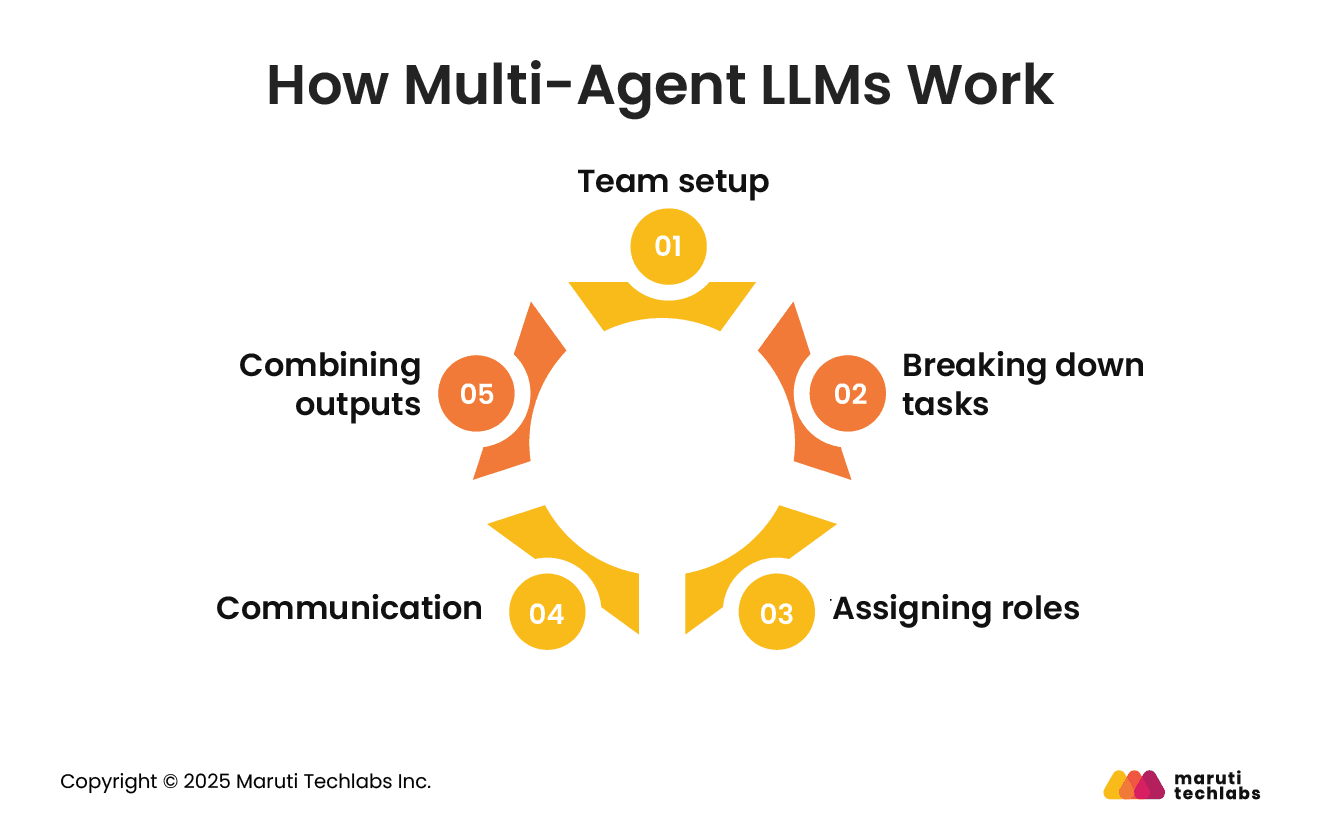

Here is how multi-agent LLMs work:

This teamwork model enables companies to manage complex workloads, such as compliance checks, customer service, or risk analysis, without placing all the pressure on a single individual. By dividing the work, multi-agent LLMs ensure efficiency, accuracy, and smoother operations.

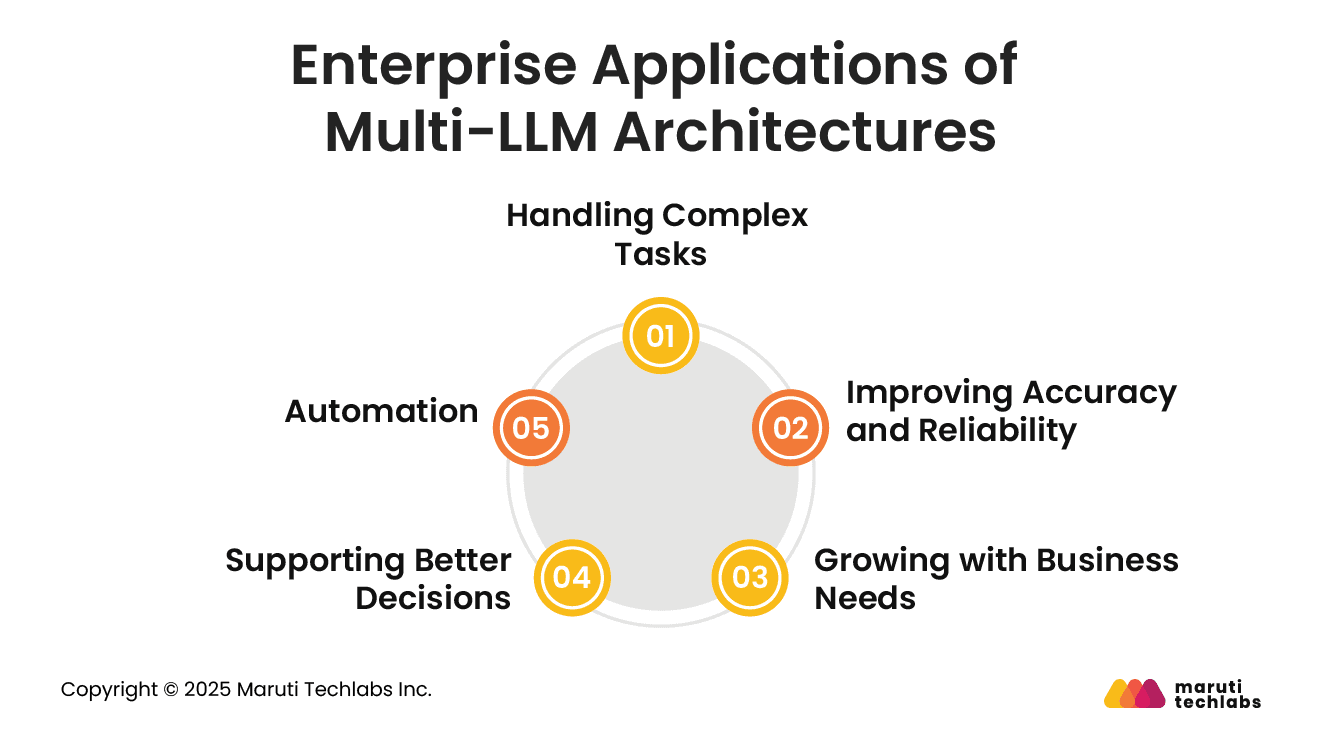

Multi-LLM architectures can support businesses in many practical ways. Instead of relying on one big system, companies use several smaller ones, each focusing on a different job. By working together, they make everyday work smoother and more dependable.

Here’s how businesses use them:

Many business tasks involve multiple steps, such as reviewing contracts, analyzing sales data, or managing supplies. With multiple systems working together, each takes care of a part of the job. This teamwork makes the whole process quicker and easier.

Errors can be costly. In this setup, one system produces an answer, and another checks it. This reduces mistakes and gives businesses more confidence in the results, enabling them to make stronger decisions.

As businesses grow, so does their data. Multi-LLM setups can also grow by adding more systems to handle additional work. Whether it’s customer questions, reports, or records, the system keeps pace without slowing down.

Good choices come from clear insights. These systems look at data from different angles and share useful options with managers. For example, they can help plan inventory, identify financial risks, or design marketing campaigns, providing leaders with the support they need to make informed decisions more quickly and effectively.

Repetitive work like answering customer questions, preparing reports, or sorting documents can take up valuable time. Multi-LLM systems automate these tasks, reducing manual effort. This gives employees more time to focus on strategic or creative work that adds higher value.

In short, multi-LLM architectures offer efficiency, reliability, and scalability, enabling enterprises to perform better across multiple areas.

Multi-agent LLM systems are proving useful for businesses because they make daily work faster, more accurate, and better organized. Instead of depending on one big model, companies use several smaller ones, each handling a specific job. Working together, they bring practical benefits that help companies run more smoothly:

Specialized agents focus on defined jobs like compliance, customer support, or reporting. This reduces mistakes, minimizes human error, and ensures consistent results across the organization.

These systems can remember long conversations or follow complex datasets. For example, in customer support, agents recall earlier issues and respond with more complete and helpful answers.

Multiple agents can operate in parallel. One might handle customer questions, another analyzes market data, while a third monitors compliance. This saves time and increases productivity.

Agents share information instantly. A sales trend identified by one can be shared with marketing and sales teams in real-time, enabling them to act quickly and in sync.

Multi-agent systems can spot problems quickly and act right away. For example, if a shipment is delayed, one agent alerts the team to find an alternative option so that work continues smoothly.

But along with these benefits, businesses also face some challenges they need to handle carefully:

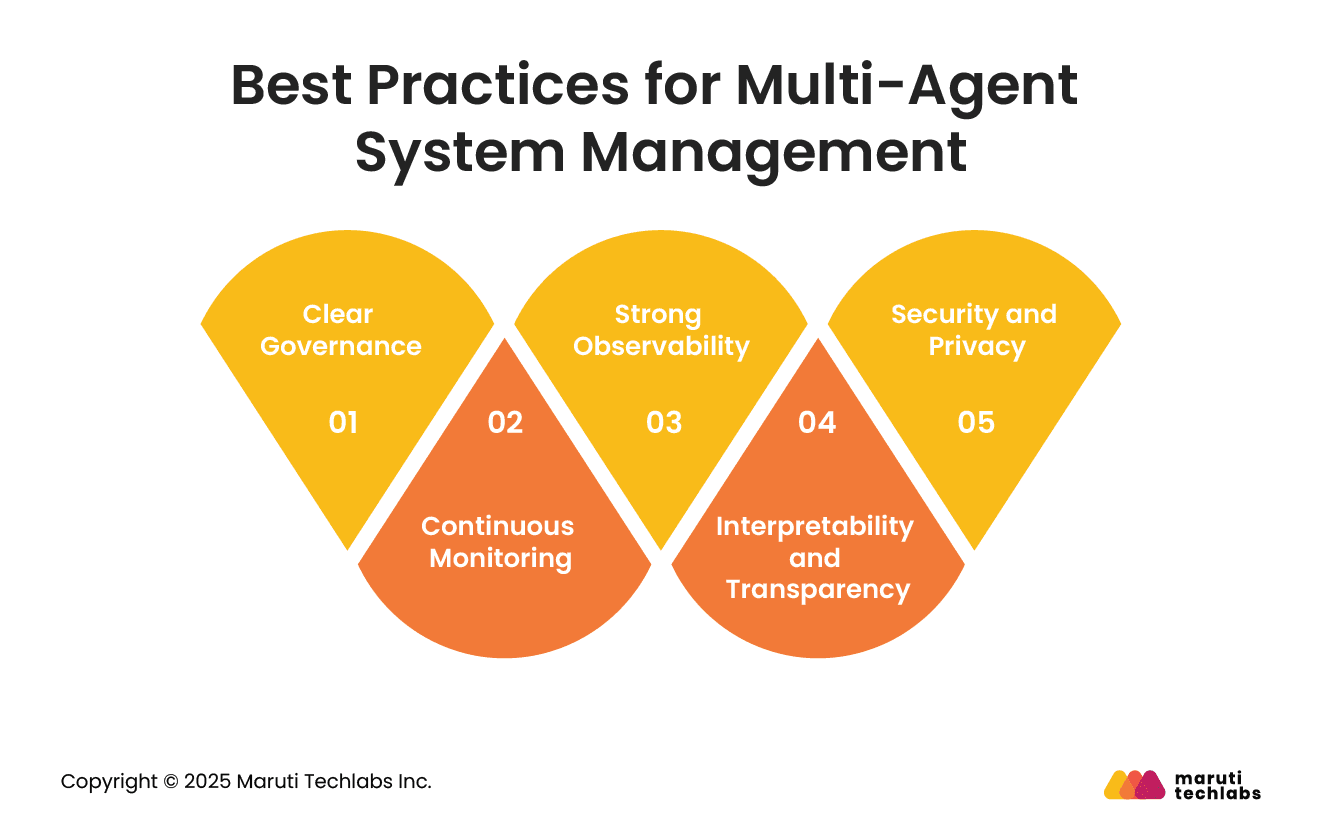

Managing a multi-agent LLM system effectively helps it work smoothly, stay secure, and deliver tangible benefits to the business. Here are some simple best practices to keep LLM agent architectures effective:

1. Clear Governance: Define roles and responsibilities for each agent and the humans involved. Set clear rules for when people should step in, and build fairness and safety into the LLM architecture.

2. Continuous Monitoring: Keep track of agent activity in real time. Watch for wrong outputs, failed steps, or breakdowns in coordination so issues can be fixed quickly.

3. Strong Observability: Use observability platforms to see how agents reason, interact, and complete tasks. Full visibility enables teams to catch problems early, before they impact business operations.

4. Interpretability and Transparency: Log inputs, outputs, and reasoning steps. This makes it easier to review decisions, audit workflows, and understand why agents acted a certain way.

5. Security and Privacy: Ensure agents follow company policies and regulations when handling sensitive data. Protect the system from risks like prompt injection, unauthorized access, or tool misuse.

Following these practices helps businesses run multi-agent LLM systems smoothly and actually see the real benefits of LLM agent architectures in their day-to-day work.

Multi-agent LLMs help businesses work better by connecting data and making daily tasks easier to manage. With different agents working together, companies can handle complex jobs faster and with fewer mistakes.

Of course, challenges exist, but with the proper practices, such as transparent governance, continuous monitoring, and robust security, enterprises can manage these systems effectively. Doing so not only reduces risks but also gives them a real competitive edge in their industry.

At Maruti Techlabs, we help businesses unlock the full potential of GenAI with practical solutions tailored to their needs. To learn more, explore our GenAI services or contact us to discuss how we can support your goals.

Want to know how prepared your organization is for multi-agent AI adoption? Try our AI Readiness Calculator to evaluate your current maturity and identify the right next steps for success.

LLM architecture needs vast amounts of data and computing power, which can be costly. It may still make mistakes, give biased answers, or produce wrong facts. These models are also hard to explain, making it difficult to understand why they provide certain responses in specific situations entirely.

An LLM architecture works by training on massive text data and learning patterns in language. It breaks down sentences into tokens and predicts the next word step by step. Layers of neural networks process this data, helping the model generate human-like answers, summaries, or translations quickly and accurately.

LLM architecture makes it easy to generate human-like text, answer questions, translate languages, and summarize long content. It helps businesses save time and effort by automating repetitive tasks. These models can also be applied to various industries, such as healthcare, education, and customer service, making them highly versatile and functional in real-world applications.

LLM architecture is used in chatbots for customer support, virtual assistants like Siri or Alexa, and tools like Grammarly for writing help. Businesses also use it to analyze documents, create marketing content, translate languages, and assist doctors and lawyers with research by quickly processing large amounts of information.