What is the Working of Image Recognition and How is it Used?

When it comes to identifying and analyzing the images, humans recognize and distinguish different features of objects. It is because human brains are trained unconsciously to differentiate between objects and images effortlessly.

In contrast, the computer visualizes the images as an array of numbers and analyzes the patterns in the digital image, video graphics, or distinguishes the critical features of images. Thanks to AI software solutions such as deep learning approaches, the rise of smartphones and cheaper cameras has opened a new era of image recognition.

Different industry sectors such as gaming, automotive, and e-commerce are adopting the high use of image recognition daily. The image recognition market is assumed to rise globally to a market size of $42.2 billion by 2022.

While choosing an image recognition solution, its accuracy plays an important role. However, continuous learning, flexibility, and speed are also considered essential criteria depending on the applications.

Image recognition is a technology that enables us to identify objects, people, entities, and several other variables in images. In today’s era, users are sharing a massive amount of data through apps, social networks, and using websites. Moreover, the rise of smartphones equipped with high-resolution cameras generates many digital images and videos. Hence, the industries use a vast volume of digital data to deliver better and more innovative services.

Image recognition is a sub-category of computer vision technology and a process that helps to identify the object or attribute in digital images or video. However, computer vision is a broader team including different methods of gathering, processing, and analyzing data from the real world. As the data is high-dimensional, it creates numerical and symbolic information in the form of decisions. Apart from image recognition, computer vision also consists of object recognition, image reconstruction, event detection, and video tracking.

Depending on the type of information required, you can perform image recognition at various levels of accuracy. An algorithm or model can identify the specific element, just as it can simply assign an image to a large category.

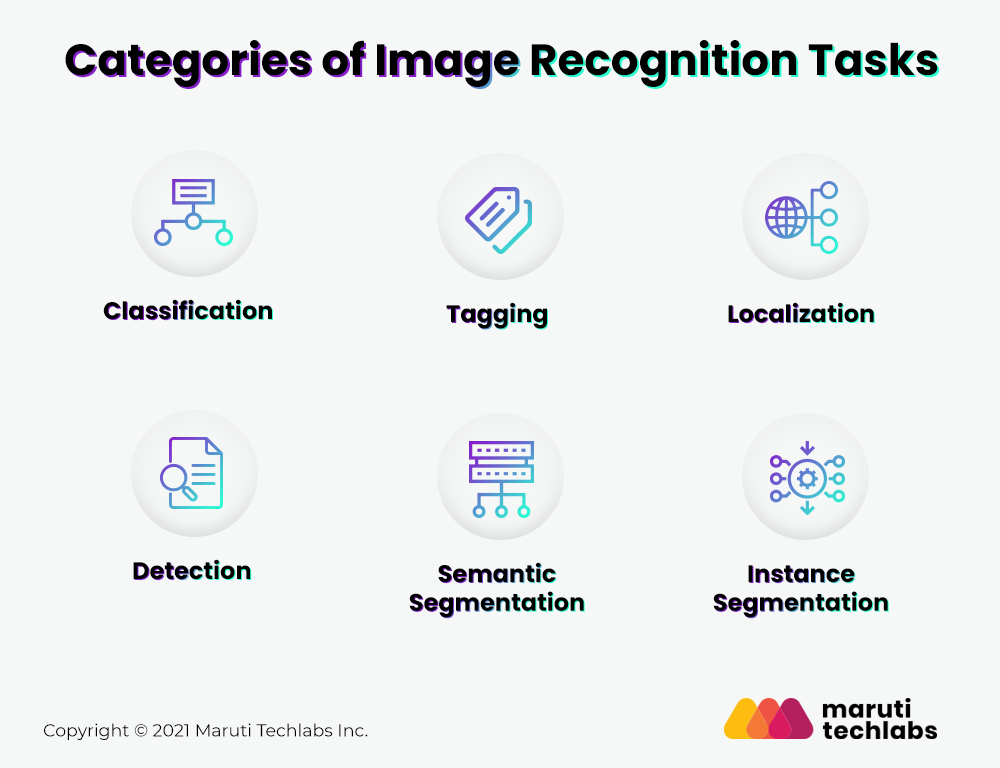

So, you can categorize the image recognition tasks into the following parts:

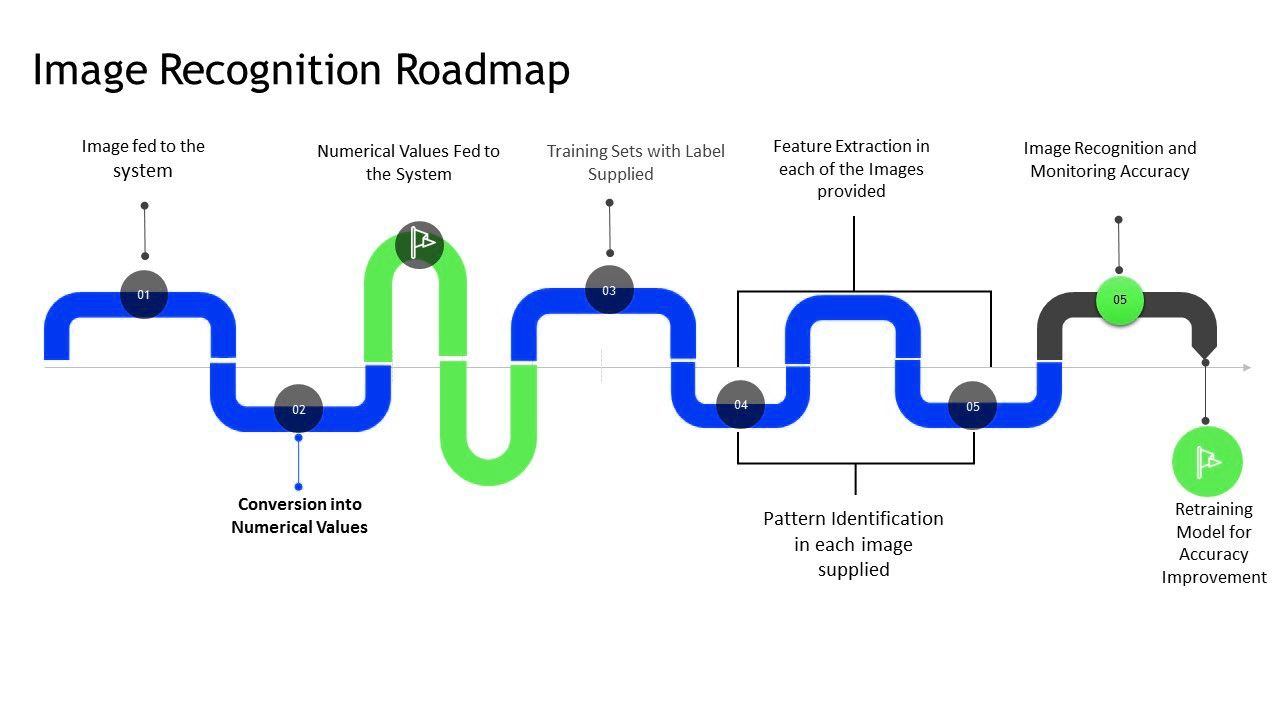

As mentioned above, a digital image represents a matrix of numbers. This number represents the data associated with the image pixels. The different intensity of the pixels forms an average of a single value and represents itself in matrix format.

The data fed to the recognition system is basically the location and intensity of various pixels in the image. You can train the system to map out the patterns and relations between different images using this information.

After finishing the training process, you can analyze the system performance on test data. Intermittent weights to neural networks were updated to increase the accuracy of the systems and get precise results for recognizing the image. Therefore, neural networks process these numerical values using the deep learning algorithm and compare them with specific parameters to get the desired output.

Scale-invariant Feature Transform(SIFT), Speeded Up Robust Features(SURF), and PCA(Principal Component Analysis) are some of the commonly used algorithms in the image recognition process. The below image displays the Roadmap of image recognition in detail.

There are numerous types of neural networks in existence, and each of them is pretty useful for image recognition. However, convolution neural networks(CNN) demonstrate the best output with deep learning image recognition using the unique work principle. Several variants of CNN architecture exist; therefore, let us consider a traditional variant for understanding what is happening under the hood.

Most of the CNN architecture starts with an input layer and servers as an entrance to the neural network. However, it considers the numerical data into a machine learning algorithm depending on the input type. They can have different representations: for instance, an RGB image will represent a cube matrix, and the monochrome image will represent a square array.

Hidden CNN layers consist of a convolution layer, normalization, activation function, and pooling layer. Let us understand what happens in these layers:

The working of CNN architecture is entirely different from traditional architecture with a connected layer where each value works as an input to each neuron of the layer. Instead of these, CNN uses filters or kernels for generating feature maps. Depending on the input image, it is a 2D or 3D matrix whose elements are trainable weights.

It is a specific math function with two parameters: expectation and variance. Its role is to normalize the values and equalize them in a particular range convenient for activation function. Remember that the normalization is carried out before the activation function.

The primary purpose of normalization is to deduce the training time and increase the system performance. It provides the ability to configure each layer separately with minimum dependency on each other.

The activation function is a kind of barrier which doesn’t pass any particular values. Many mathematical functions use computer vision with neural networks algorithms for this purpose. However, the alternative image recognition task is Rectified Linear Unit Activation function(ReLU). It helps to check each array element and if the value is negative, substitutes with zero(0).

The pooling layer helps to decrease the size of the input layer by selecting the average value in the area defined by the kernel. The pooling layer is a vital stage. If it is not present, the input and output will lead in the same dimension, which eventually increases the number of adjustable parameters, requires much more computer processing, and decreases the algorithm’s efficiency.

The output layer consists of some neurons, and each of them represents the class of algorithms. Output values are corrected with a softmax function so that their sum begins to equal 1. The most significant value will become the network’s answer to which the class input image belongs.

Here are some common challenges faced by image recognition models:

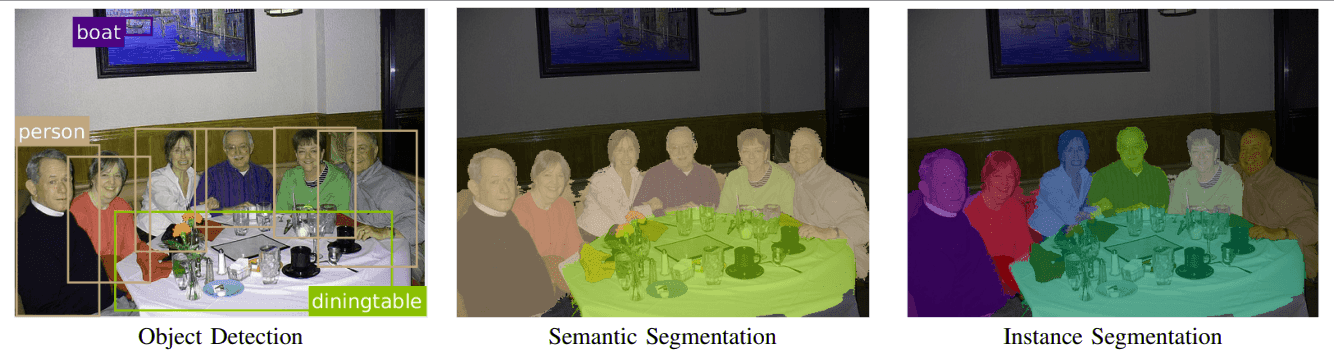

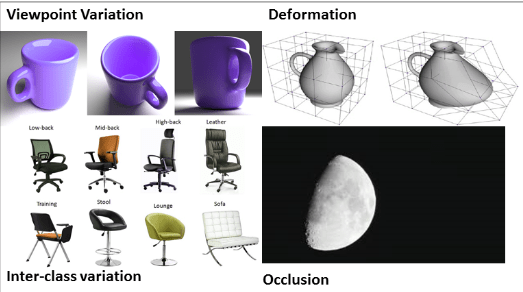

In real-life cases, the objects within the image are aligned in different directions. When such images are given as input to the image recognition system, it predicts inaccurate values. Therefore, the system fails to understand the image’s alignment changes, creating the biggest image recognition challenge.

Size variation majorly affects the classification of the objects in the image. The image looks bigger as you come closer to it and vice-versa. It changes the dimension of the image and presents inaccurate results.

As you know, objects do not change even if they are deformed. The system learns from the image and analyzes that a particular object can only be in a specific shape. We know that in the real world, the shape of the object and image change, which results in inaccuracy in the result presented by the system.

Particular objects differ within the class. They can be of different sizes, shapes but still represent the same class. For instance, chairs, bottles, buttons all come in other appearances.

Sometimes, the object blocks the full view of the image and eventually results in incomplete information being fed to the system. It is nceessary to develop an algorithm sensitive to these variations and consists of a wide range of sample data.

The training should have varieties connected to a single class and multiple classes to train the neural network models. The varieties available will ensure that the model predicts accurate results when tested on sample data. It is tedious to confirm whether the sample data required is enough to draw out the results, as most of the samples are in random order.

Neural networks follow some common yet challenging limitations while undergoing an image recognition process. Some of those are:

Convolution Neural Network (CNN) is an essential factor in solving the challenges that we discussed above. CNN consists of the changes in the operations. The inputs of CNN are not the absolute numerical values of the image pixels. Instead, the complete image is divided into small sets where each set acts as a new image. Therefore, the small size of the filter separates the entire image into smaller sections. Each set of neurons is connected to this small section of the image.

Now, these images are considered similar to the regular neural network process. The computer collects the patterns and relations concerning the image and saves the results in matrix format.

The process keeps repeating until the complete image is given to the system. The output is a large matrix representing different patterns that the system has captured from the input image. The matrix is reduced in size using matrix pooling and extracts the maximum values from each sub-matrix of a smaller size.

During the training phase, different levels of features are analyzed and classified into low level, mid-level, and high level. The low level consists of color, lines, and contrast. Mid-level consists of edges and corners, whereas the high level consists of class and specific forms or sections.

Hence, CNN helps to reduce the computation power requirement and allows the treatment of large-size images. It is susceptible to variations of image and provides results with higher precision compared to traditional neural networks.

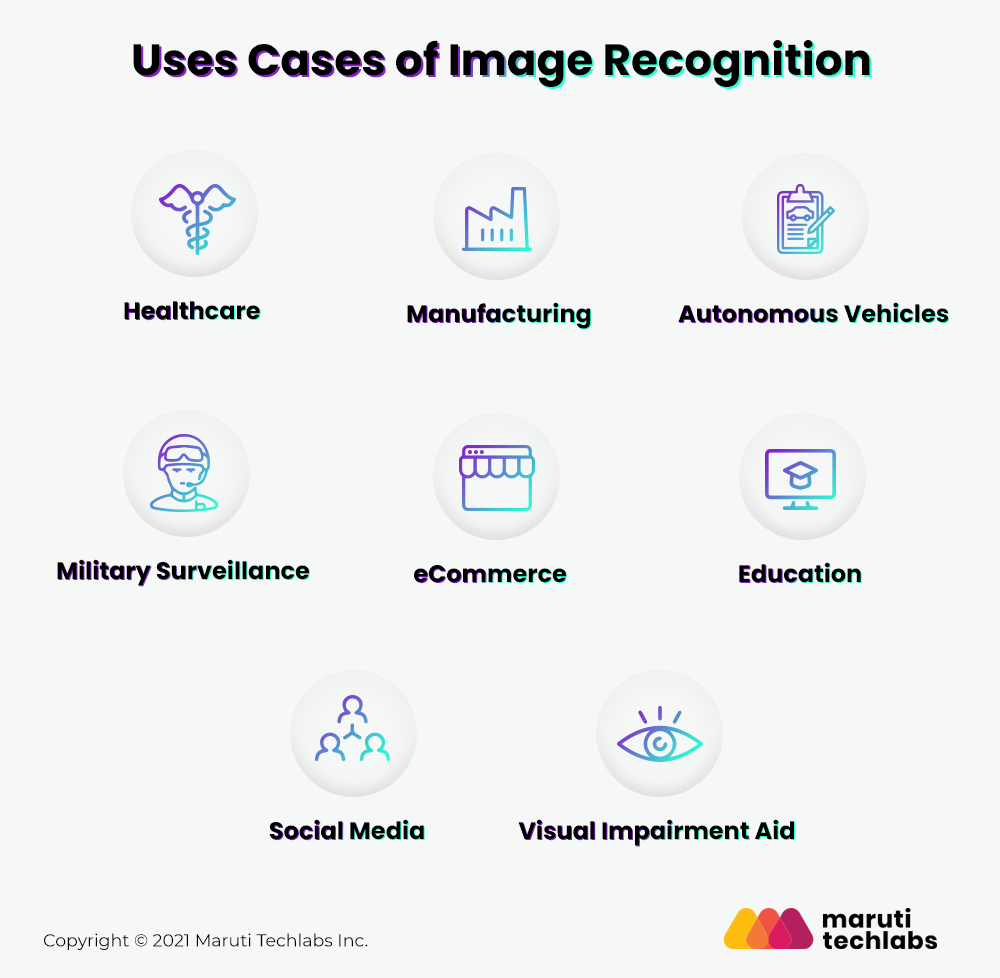

Deep learning image recognition is a broadly used technology that significantly impacts various business areas and our lives in the real world. As the application of image recognition is a never-ending list, let us discuss some of the most compelling use cases on various business domains.

Despite years of practice and experience, doctors tend to make mistakes like any other human being, especially in the case of a large number of patients. Therefore, many healthcare facilities have already implemented an image recognition system to enable experts with AI assistance in numerous medical disciplines.

MRI, CT, and X-ray are famous use cases in which a deep learning algorithm helps analyze the patient’s radiology results. The neural network model allows doctors to find deviations and accurate diagnoses to increase the overall efficiency of the result processing.

Analyzing the production lines includes evaluating the critical points daily within the premises. Image recognition is highly used to identify the quality of the final product to decrease the defects. Assessing the condition of workers will help manufacturing industries to have control of various activities in the system.

Image recognition helps autonomous vehicles analyze the activities on the road and take necessary actions. Mini robots with image recognition can help logistic industries identify and transfer objects from one place to another. It enables you to maintain the database of the product movement history and prevent it from being stolen.

Modern vehicles include numerous driver-assistance systems that enable you to avoid car accidents and prevent loss of control that helps drive safely. Ml algorithms allow the car to recognize the real-time environment, road signs, and other objects on the road. In the future, self-driven vehicles are predicted to be the advanced version of this technology.

Image recognition helps identify the unusual activities at the border areas and take automated decisions that can prevent infiltration and save the precious lives of soldiers.

eCommerce is one of the fast-developing industries in today’s era. One of the eCommerce trends in 2021 is a visual search based on deep learning algorithms. Nowadays, customers want to take trendy photos and check where they can purchase them, for instance, Google Lens.

Ecommerce makes use of image recognition technology to recognize the brands and logos on the image in social media, where companies can accurately identify the target audience and understand their personality, habits, and preferences efficiently.

Different aspects of education industries are improved using deep learning solutions. Currently, online education is common, and in these scenarios, it isn’t easy to track the reaction of students using their webcams. The neural networks model helps analyze student engagement in the process, their facial expressions, and body language.

Image recognition also enables automated proctoring during examinations, digitization of teaching materials, attendance monitoring, handwriting recognition, and campus security.

Social media platforms have to work with thousands of images and videos daily. Image recognition enables a significant classification of photo collection by image cataloging, also automating the content moderation to avoid publishing the prohibited content of the social networks.

Moreover, monitoring social media text posts that mention their brands lets one learn how consumers perceive and interact with their brand and what they say about it.

Visual impairment, also known as vision impairment, is decreased ability to see to the degree that causes problems not fixable by usual means. In the early days, social media was predominantly text-based, but now the technology has started to adapt to impaired vision.

Image recognition helps to design and navigate social media for giving unique experiences to visually impaired humans. Aipoly is one such app used to detect and identify objects. The user should point their phone’s camera at what they want to analyze, and the app will tell them what they are seeing. Therefore, the app functions using deep learning algorithms to identify the specific object.

The most crucial factor for any image recognition solution is its precision in results, i.e., how well it can identify the images. Aspects like speed and flexibility come in later for most of the applications.

The company can compare the different solutions after labeling data as a test data set. In most cases, solutions are trained using the companies’ data superior to pre-trained solutions. If the required level of precision can be compared with the pre-trained solutions, the company may avoid the cost of building a custom model.

Users should avoid generalizations based on a single test. A vendor who performs well for face recognition may not be good at vehicle identification because the effectiveness of an image recognition algorithm depends on the given application.

Other such criteria include:

As you already know, many tech giants like Google, IBM, AWS offer ready-made solutions for image recognition and machine learning. Suppose your task is enormous, such as scanning, recognizing handwritten text, translating or identifying animals, plants, or animals; in that case, you can use such ready-made neural algorithms these companies provide. Tech giants offer APIs that enable you to integrate your image recognition software.

There are various advantages for the same:

Along with these ready-made products, there are many software environments, libraries, and frameworks that help you to build and deploy machine learning and deep learning algorithms efficiently. There are also industry-specific vendors. For instance, Visenze provides solutions for product tagging, visual search, and recommendation. Other than visenze, some of the well-known are:

We, at Maruti Techlabs, have developed and deployed a series of computer vision models for our clients, targeting a myriad of use cases. One such implementation was for our client in the automotive eCommerce space. They offer a platform for the buying and selling of used cars, where car sellers need to upload their car images and details to get listed.

The Challenge:

Users upload close to ~120,000 images/month on the client’s platform to sell off their cars. Some of these uploaded images would contain racy/adult content instead of relevant vehicle images.

Manual approval of these massive volumes of images daily involved a team of 15 human agents and a lot of time. Such excessive levels of manual processing gave way to serious time sinks and errors in approved images. This led to poor customer experience and tarnished brand image.

The Solution:

As a solution, we built an image recognition model using Google Vision to eliminate irrelevant images from the platform. The model worked in two steps:

Step 1 – Detect car images and flag the rest

Step 2 – Verify car models against the details provided

The Computer Vision model automated two steps of the verification process. We used ~1500 images for training the model. With training datasets, the model could classify pictures with an accuracy of 85% at the time of deploying in production.

Investing in CV with an in-house team from scratch is no easy feat. This is where our computer vision services can help you in defining a roadmap for incorporating image recognition and related computer vision technologies. Mostly managed in the cloud, we can integrate image recognition with your existing app or use it to build a specific feature for your business. To get more out of your visual data, connect with our team here.