Why Multimodal AI is Better than Single-Modal AI Models?

The importance of versatility in AI systems cannot be overstated. Traditional single-modal AI systems typically process one type of data, such as text, images, or audio, which limits their ability to comprehend complex, real-world scenarios.

Multimodal AI addresses these limitations by integrating multiple data types, enabling a more comprehensive understanding.

For instance, combining text, images, and audio enables AI systems to interpret context more accurately, leading to more nuanced interactions and informed decisions.

This blog explores the evolution of multimodal AI, highlighting key differences and applications. This article provides a comprehensive overview of how multimodal AI surpasses single-modal AI and is effectively shaping the future of artificial intelligence.

Single-modal concerns the data source used to develop the AI algorithm. So in this case, a single source is leveraged to create AI’s algorithm.

Although single-modal AI is limited due to a unified source, it was considered the standard until the advent of multimodal machine learning.

Multimodal AI is a machine learning model that can process and integrate data from multiple sources or modalities. The different data types include audio, images, video, text, and other sensory inputs.

Traditional AI models are designed to handle a single type of data. Unlike them, multimodal AI can process and analyze different data inputs to gain a comprehensive understanding and share more robust outputs.

For instance, multimodal AI is capable of generating a summary of a landscape photo when given as input. Or it could understand the written description of a landscape and develop a relevant image.

Multimodal AI models can process and understand multiple types of data simultaneously, unlocking capabilities that single-modal AI cannot. Let’s understand the benefits a multimodal AI model offers over a single-modal AI.

Multimodal AI systems are adept at understanding phrases and words used with Natural Language Processing (NLP). It does this by browsing through related keywords and concepts.

This enables the model to grasp the context and provide an appropriate response. Upon integration with multimodal AI models, NLP models can leverage visual and linguistic data to understand the context.

Advanced multimodal models mix text, videos, and images to enhance accuracy. They are proficient in examining input data, increasing prediction accuracy across tasks.

They employ various modalities to enhance picture captioning. Multimodal AI can better grasp speaker emotions by merging speech and facial features, aiding NLP’s capabilities.

The limitations (text and speech) of AI models acted as a barrier to facilitating a natural user experience. In contrast, multimodal AI better understands user intent using text, visual, and voice cues.

It offers text and audio recognition features to learn the meaning of commands when integrated with virtual assistants.

Image, text, and audio recognition capabilities make multimodal AI a unique technology. They add to the effectiveness and accuracy of task accomplishment.

Multimodal LLMs, capable of understanding faces and audio, can efficiently identify people.

Assessing audio and visual signals, the model can distinguish between things and people with similar voices and appearances.

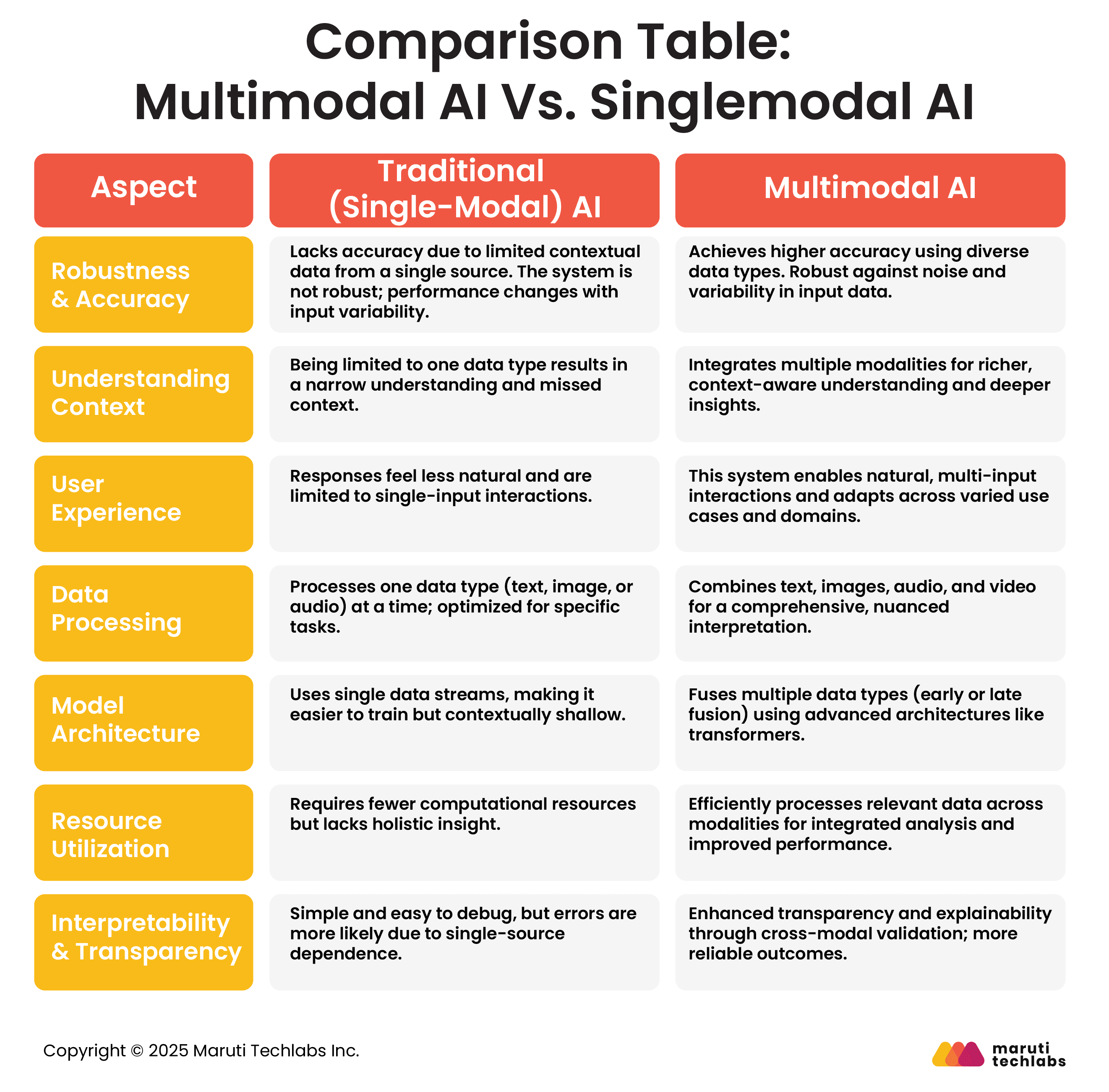

While traditional AI focuses on one type of data at a time, multimodal AI can integrate insights from multiple data sources for a comprehensive understanding. Here’s how multimodal AI compares with traditional AI in creating accurate models.

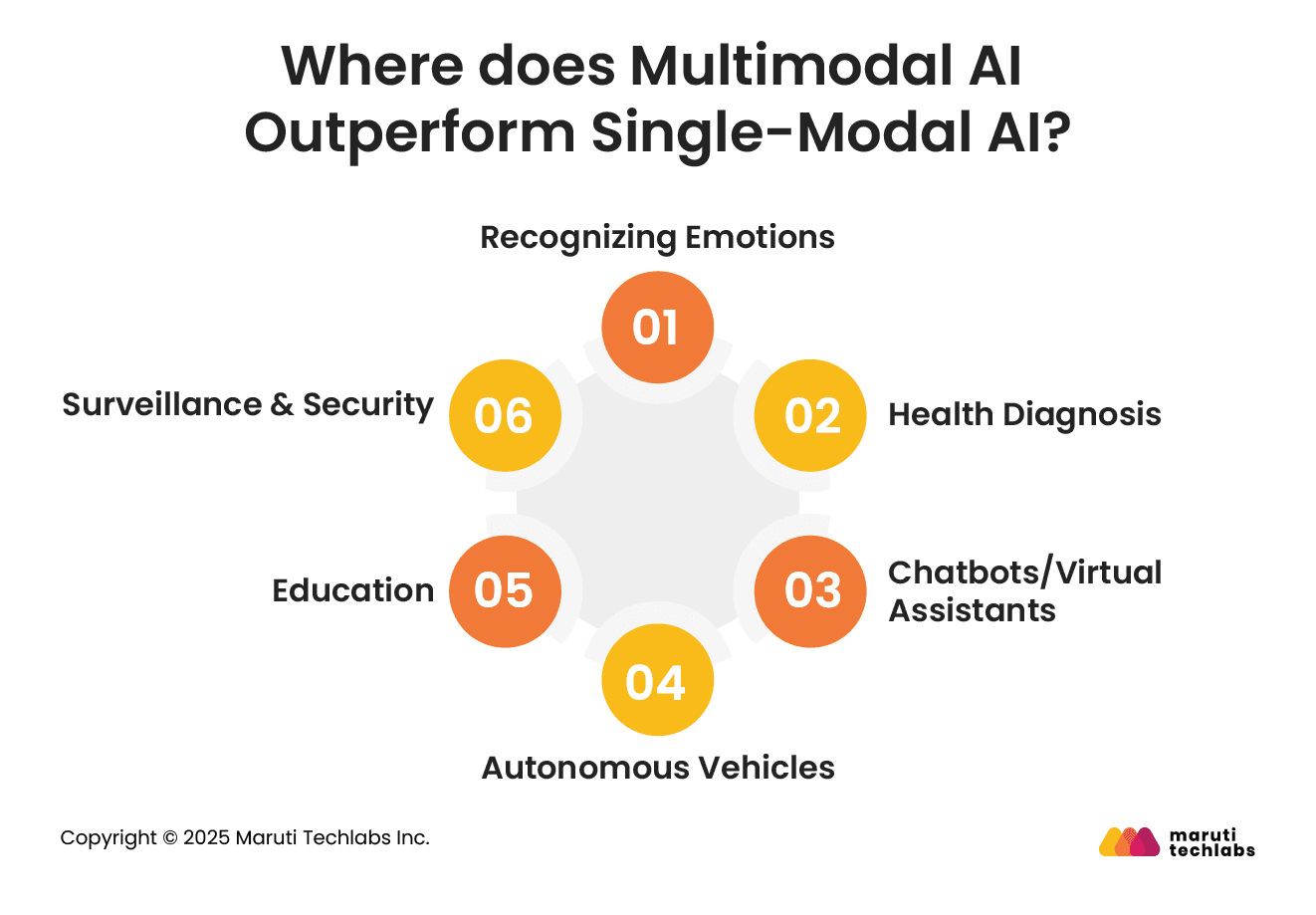

By combining information from different data types, multimodal AI can tackle complex problems that single-modal AI struggles with. Here are some aspects or areas where multimodal AI outperforms single-modal AI.

Let’s explore the top multimodal AI models used globally.

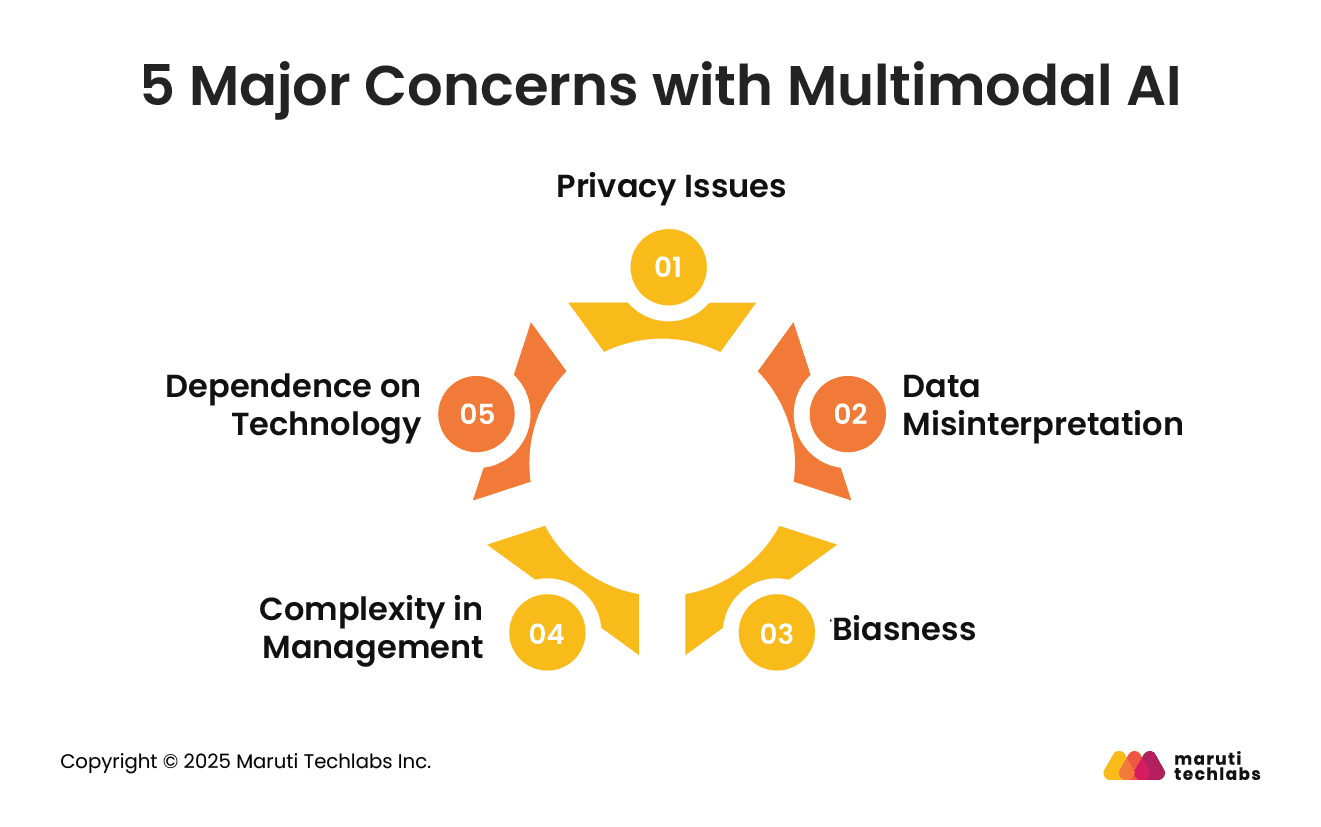

While multimodal AI offers powerful capabilities, it also introduces unique challenges that organizations need to address. Here are the most evident challenges or concerns observed with multimodal AI.

Multimodal AI systems handle large amounts of personal data, including images, voices, and text. Without strong protections, this access can expose sensitive information and pose serious privacy risks.

Although these systems can combine insights from different sources, they sometimes misread complex contexts, which can lead to inaccurate or even harmful decisions.

Multimodal AI can inherit and spread biases from the data it learns from. Because it processes diverse information, these biases can appear in many ways, affecting fairness and equality.

Managing and maintaining multimodal AI is more difficult than simpler systems. Its complexity can increase operational costs and make it harder to ensure consistent results.

A heavy reliance on multimodal AI may lead to a reduction in human judgment and critical thinking, as people become increasingly dependent on technology for decision-making.

Multimodal AI has emerged as a transformative force in artificial intelligence, offering capabilities that far surpass those of traditional single-modal systems. By integrating text, images, audio, and other data types, it provides a richer and more context-aware understanding, making interactions more intelligent and more human-like.

Unlike conventional AI, which often struggles with single data sources, multimodal AI excels in complex scenarios such as customer service, healthcare diagnostics, autonomous driving, and personalized learning, enabling more accurate solutions.

Popular models like GPT-4o, Gemini, and DALL-E 3 showcase how versatile and powerful this technology has become. At the same time, open-source and research-focused systems like LLaVA and ImageBind continue to expand their potential.

However, challenges such as privacy concerns, bias, complexity in management, and over-reliance on technology remain essential considerations.

If you’re planning to maximize the potential of AI, you can leverage Maruti Techlabs’ specialized Artificial Intelligence Services to develop customized, intelligent, and reliable solutions tailored to your unique needs.

Get in touch with us today and explore how multimodal AI can work wonders for your business applications.

Want to assess how ready your organization is to adopt advanced AI systems like multimodal models? Try our AI Readiness Assessment Tool to evaluate your current AI maturity and uncover opportunities for growth.

Multimodal AI is an artificial intelligence system that processes and integrates multiple types of data, such as text, images, audio, and video.

By combining these data streams, it can understand context more deeply, provide richer insights, and perform tasks that require cross-modal reasoning, unlike traditional single-modal AI.

Multimodal AI uses specialized models and fusion techniques to process different data types. Early fusion combines raw inputs, while late fusion merges processed outputs.

Advanced architectures, such as transformers, align and correlate information across modalities, enabling AI to understand complex relationships and make context-aware decisions across text, vision, audio, and more.

Yes, specific versions of ChatGPT, like GPT-4o, are multimodal. They can process text alongside images and audio, allowing users to interact naturally through multiple inputs. This enhances understanding, context awareness, and response quality, making the AI more versatile than single-modal versions that rely on text alone.

Generative AI focuses on creating new content, such as text, images, or music, based on learned patterns. Multimodal AI, on the other hand, integrates and interprets multiple data types to understand context or make decisions.

While generative AI can be multimodal, its primary goal is content generation, not comprehensive understanding.

Multimodal AI faces challenges including privacy concerns, potential bias, high computational requirements, and complex management. Misinterpretation of combined data can lead to errors.

A heavy reliance on technology may reduce human judgment, and developing robust, accurate systems requires extensive data, advanced models, and meticulous oversight.