A Practical Guide to Domain-Adaptive Pretraining for Custom Models

Large Language Models (LLMs) like GPT, LLaMA, and Mistral excel at everyday tasks such as answering questions or writing summaries. However, in specialized fields like healthcare, finance, law, or research, they often fall short.

The challenge is that fine-tuning on its own doesn’t equip models with the deep domain knowledge, vocabulary, or context they require. Without this foundation, they risk overlooking critical details or producing inaccurate results in sensitive settings.

This is where Domain-Adaptive Pretraining (DAPT) comes in. Instead of relying only on fine-tuning, DAPT continues the pretraining process with large volumes of unlabeled, domain-specific text. This helps models adapt better to the specialized language, structure, and patterns of the target domain.

In this blog, we’ll cover what DAPT is, its key benefits, the step-by-step approach, real-world use cases, and practical tips for implementing domain adaptation in LLMs.

Domain-Adaptive Pretraining (DAPT) is a method for enhancing the domain-specific intelligence of language models. Instead of only fine-tuning, DAPT continues the pretraining stage using a large set of unlabeled, domain-specific text. For example, a healthcare model might be trained further on biomedical papers, while one for law could use legal documents.

This extra step helps the model pick up the jargon, structure, and context of the domain. Later, when you fine-tune it on smaller labeled datasets, the model performs much better. In simple terms, DAPT provides the model with domain knowledge first, and fine-tuning then refines it for the actual task. This approach is at the core of domain adaptation and LLM domain adaptation.

A related method is Task-Adaptive Pretraining (TAPT), which utilizes unlabeled text from the specific task itself, such as customer reviews for sentiment analysis. While TAPT sharpens task focus, DAPT builds broad domain expertise.

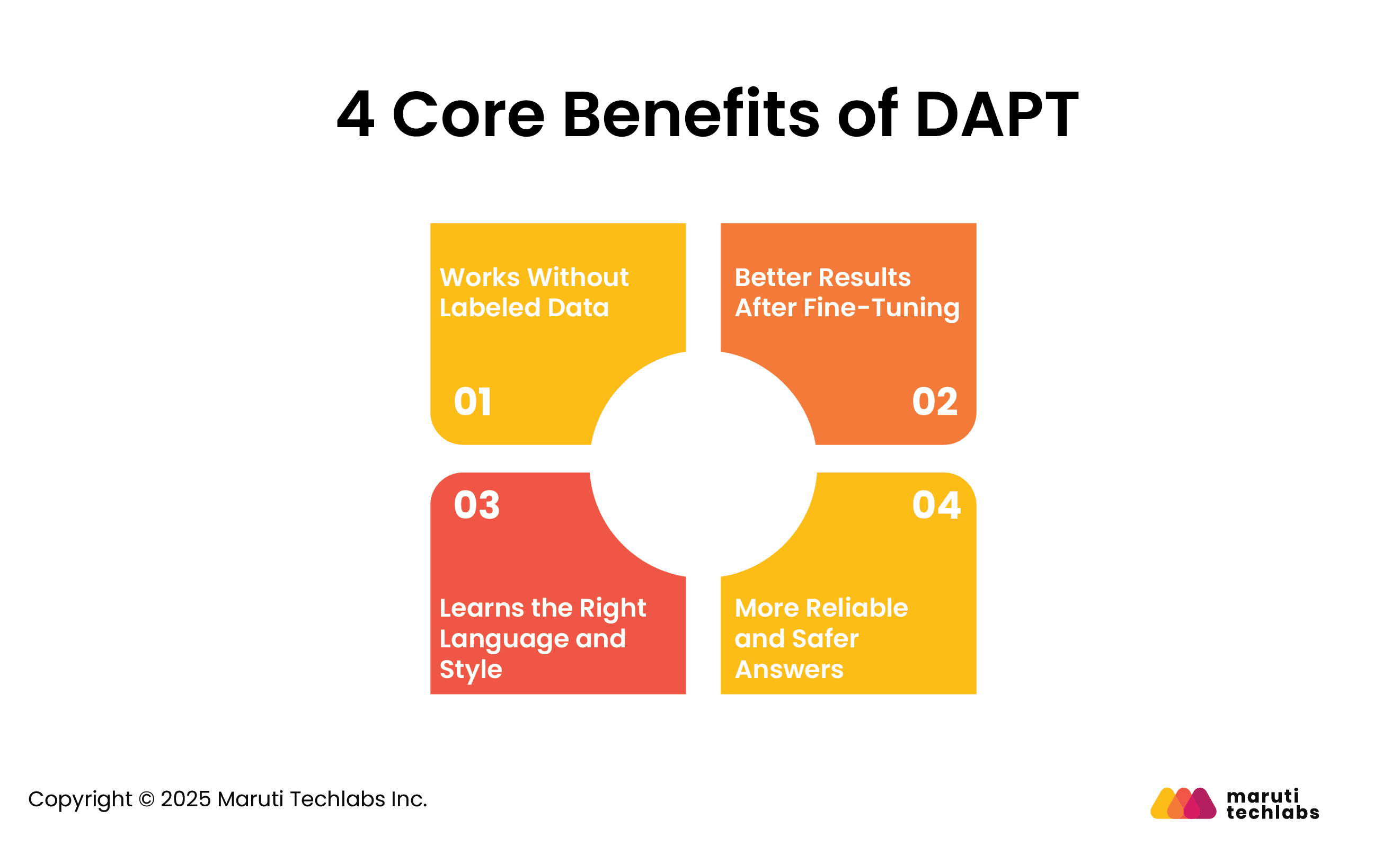

Domain-Adaptive Pretraining (DAPT) brings several clear advantages when building models for specific fields. Here are four key benefits:

One of the biggest advantages of DAPT is that it doesn’t need annotated or labeled data. You can use raw documents like reports, research papers, or even web pages from your domain. This makes it much easier to train models in areas where labeled data is scarce or expensive to create.

Models that go through DAPT perform better when you fine-tune them later for specific tasks. Since the model already understands the domain’s language and context, fine-tuning requires less effort and delivers higher accuracy in the final output.

Every industry has its own way of writing, whether it’s medical notes, legal contracts, or financial reports. DAPT helps models identify these patterns, resulting in output that feels more natural, professional, and aligned with the domain.

By grounding the model in real-world domain text, DAPT reduces the chances of hallucinations or made-up information. This is especially important in sensitive fields such as healthcare or law, where inaccurate outputs can have severe consequences.

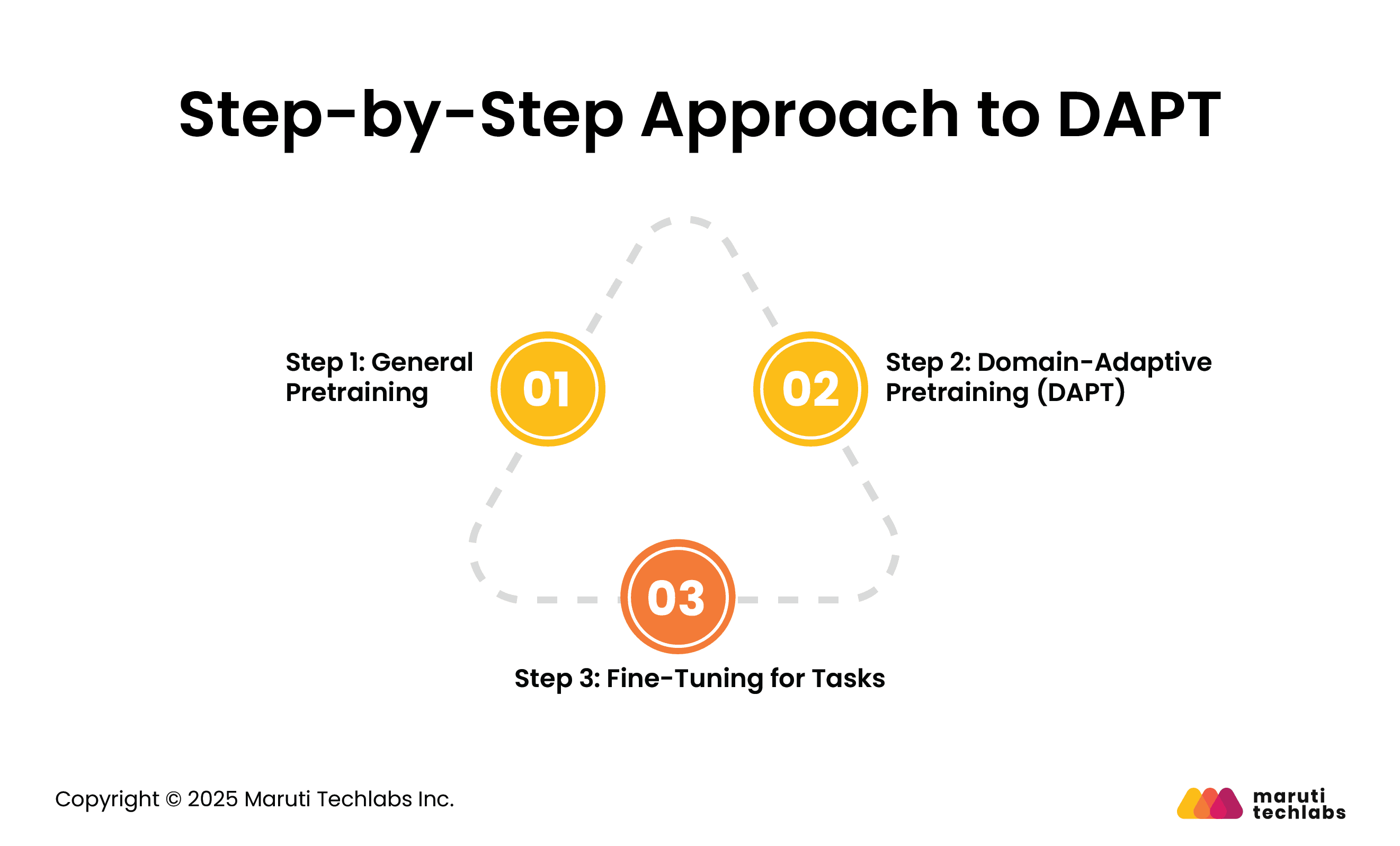

The process of Domain-Adaptive Pretraining (DAPT) usually follows two main steps: extra pretraining on domain data and then fine-tuning for specific tasks. Let’s break it down:

Every language model begins by learning from vast, diverse datasets, such as books, articles, and websites. This provides a solid foundation in grammar, context, and general knowledge.

Once the base is ready, the model continues training on text from a specific field, such as medical journals, legal documents, or research papers. This step helps the model absorb the vocabulary, style, and structure of the domain so it “speaks the same language.”

After DAPT, the model is fine-tuned on labeled datasets for specific tasks, such as analyzing contracts or classifying medical records. Since the model already knows the domain language, fine-tuning becomes easier and produces much better results.

A key research paper supports the two-step approach. In this study, researchers fine-tuned the RoBERTa model with DAPT for approximately 12,500 steps on domain-specific data, thereby enhancing its performance in specialized fields such as biomedical research and computer science.

They then tested Task-Adaptive Pretraining (TAPT), where the model was trained further on unlabeled text directly related to the task: for example, customer reviews for sentiment analysis. TAPT yielded consistent improvements and, when combined with DAPT, produced the best results by integrating both domain knowledge and task-specific focus.

Based on these insights, the best approach is to start with DAPT when sufficient domain text is available. For even stronger results, adding TAPT on top can give the model both domain knowledge and task-specific focus.

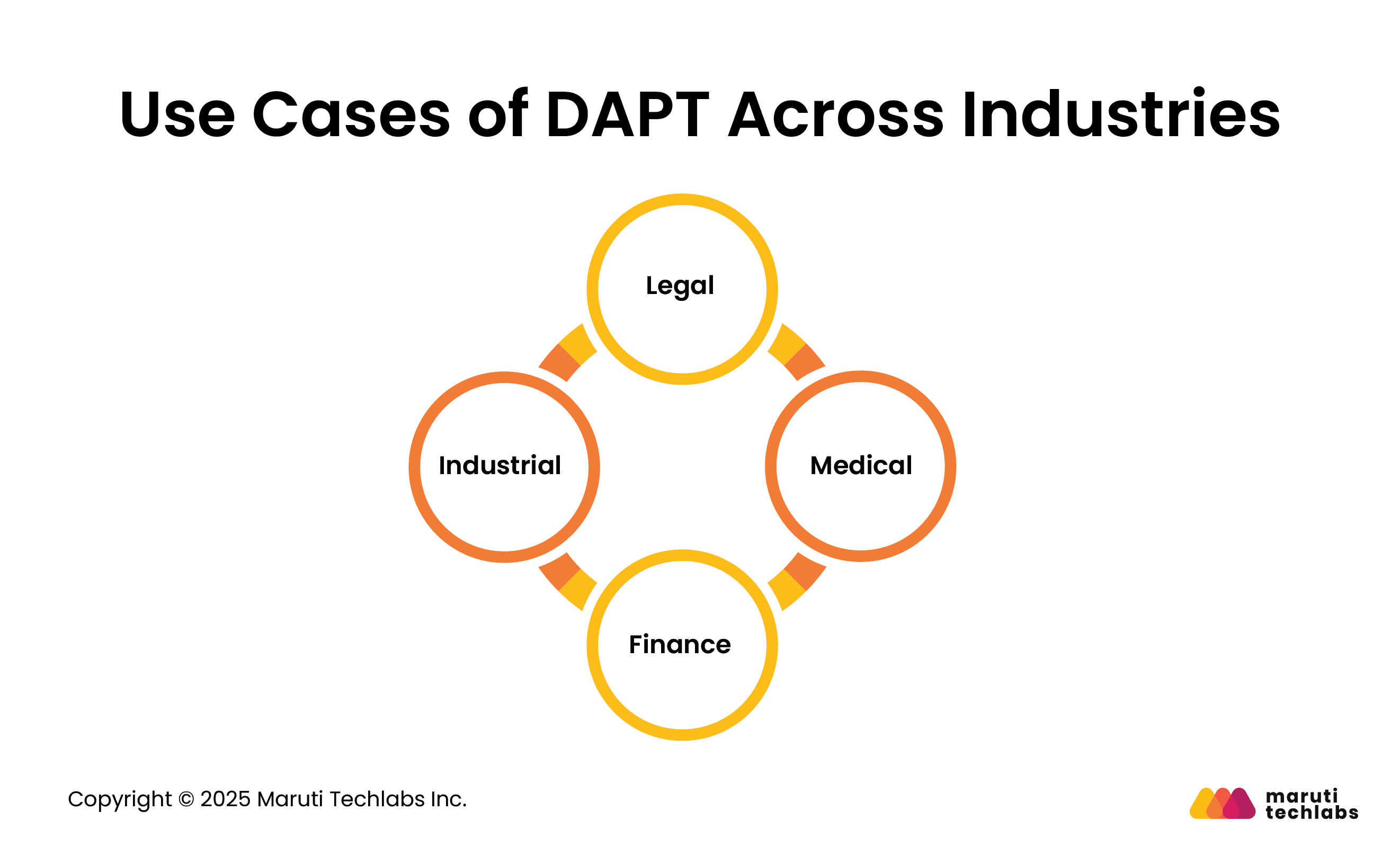

Domain-Adaptive Pretraining (DAPT) shines wherever language models need to handle specialized knowledge instead of everyday text. By training on the right kind of data, the model picks up the unique words, tone, and structure of a field. Here’s how it helps in different industries:

Legal documents are packed with jargon and written in a very formal style. A general model often struggles with this. With DAPT, the model can be trained on contracts, case law, and compliance documents, enabling it to understand the language lawyers actually use. This means faster legal research, better compliance checks, and fewer chances of misinterpreting essential clauses.

Physicians and researchers rely on terms and formats rarely found in general text. By training on clinical notes, medical reports, and research papers, DAPT enables models to understand this specialized language. The outcome is clearer patient record summaries, more accurate diagnostic support, and faster access to the latest research, making it a valuable tool for sensitive healthcare decisions.

Financial documents are full of technical terms, numbers, and strict formats. By training on annual reports, statements, and regulatory filings, a DAPT-enhanced model can interpret them with far greater accuracy. This leads to improved risk assessment, more effective fraud detection, and better forecasting—critical areas where errors can be costly.

In manufacturing and industrial sectors, workers rely on manuals, standard operating procedures (SOPs), and safety guidelines. DAPT trains models on this technical documentation, enabling them to provide more precise instructions, troubleshoot issues, and support maintenance activities. This ensures better safety and efficiency in industrial operations.

Across these industries, DAPT ensures that models grasp not only general language but also domain-specific terminology. This results in outputs that are more accurate, reliable, and valuable for real-world applications.

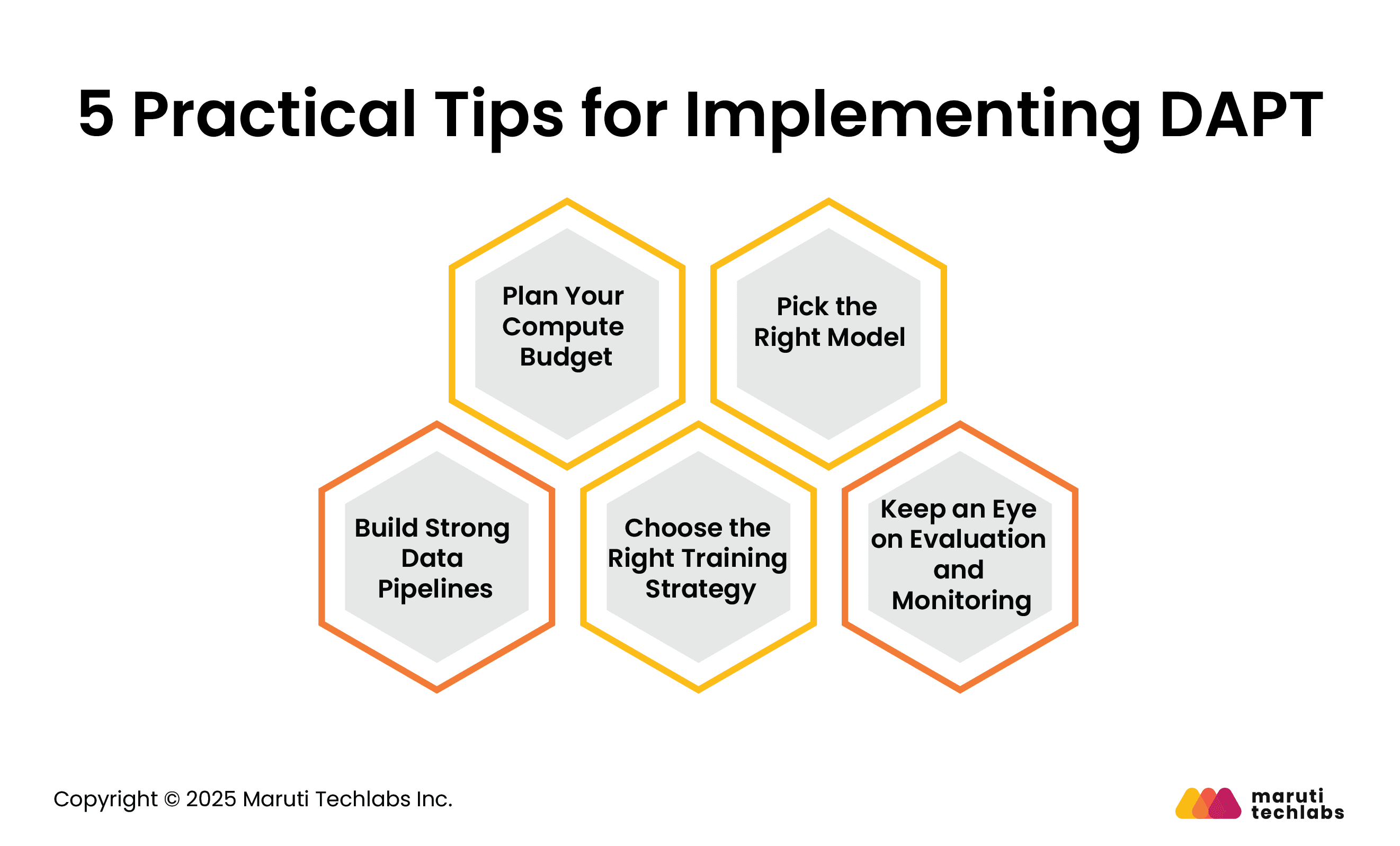

Starting with Domain-Adaptive Pretraining (DAPT) can feel daunting at first. But if you break it into simple steps, the process becomes much easier to manage. Here are five tips to help you get going:

Since DAPT involves extra training, you’ll need to think ahead about the resources it requires. Decide how many GPUs or TPUs you can use, how long the training should run, and what costs are reasonable. A little planning up front saves you from unexpected hurdles later.

Bigger isn’t always better. Instead of going for the largest model, choose a base model size and architecture that fits your domain and available resources. A smaller, well-adapted model often performs better than a large one that’s poorly tuned.

Your results are only as good as your data. Set up reliable pipelines to collect, clean, and organize domain-specific text — whether it’s reports, research papers, or manuals. High-quality, domain-relevant data makes all the difference.

Full pretraining isn’t always necessary. For efficiency, techniques like LoRA or adapters let you adapt models with less compute while still achieving strong results. Choose the approach that aligns best with your goals and resources.

Monitoring model performance is crucial. Test it on domain-specific tasks, ensure it doesn’t overfit, and evaluate how it performs in real-world scenarios. With these steps, DAPT becomes less of a heavy process and more of a practical tool that delivers real impact in your domain.

Domain-Adaptive Pretraining (DAPT) is a practical approach to enhancing the performance of general AI models for specific domains. Instead of building a model from scratch, DAPT continues training a pre-trained model on domain-specific text. This helps it understand specialized words, styles, and context, making it more accurate for tasks in healthcare, legal, finance, or industrial domains.

Compared to complete custom training, DAPT doesn’t need massive labeled datasets and can save a lot of time and effort. It also makes later fine-tuning on specific tasks more effective. Simply put, DAPT is an innovative and scalable approach to adapting language models, enabling them to perform well in specialized domains while improving reliability and usefulness without requiring a complete overhaul.

Maruti Techlabs collaborates with organizations to implement domain-adapted AI solutions, enabling them to leverage the benefits of DAPT for real-world applications. With a focus on Custom AI Development, we help businesses build intelligent systems tailored to their unique needs. To learn more, get in touch via our contact page.

Wondering how ready your organization is to adopt domain-specific AI solutions? Use our AI Readiness Assessment Tool to evaluate your preparedness and plan your next steps effectively.

Domain adaptation is a method for adjusting a pre-trained model to make it work well in a specific domain. It helps the model learn domain-specific terms, patterns, and structures, thereby improving accuracy and reliability without requiring the construction of a new model from scratch for each specialized area.

In voice and video AI, domain adaptation fine-tunes models to handle specialized sounds, accents, or visual patterns. For example, it improves speech recognition for medical terms or video analysis for industrial safety. This ensures AI delivers accurate and reliable results in real-world, domain-specific scenarios.

Key challenges include limited labeled data, differences between the source and target domains, and the need to avoid overfitting. Collecting high-quality domain-specific datasets and managing computational resources are also critical. Proper planning is crucial to ensure the model adapts effectively while maintaining robust performance.