Containerization for Microservices: A Path to Agility and Growth

As a developer or business owner, you are familiar with the ongoing demands of growing a business, addressing technical challenges, and driving innovation—all while ensuring that your systems remain reliable and efficient. Balancing all these needs can be challenging, especially as applications grow more complex. This is where containerized services can make a real difference.

By allowing different parts of an application to run independently, containerized services ensure that if one component experiences a problem, it doesn’t disrupt the entire system. This structure keeps your systems running smoothly and makes it easier to manage and scale them.

In this blog, we’ll dive deep into the essential concepts behind containerized services, break down their key benefits, and explore how they can reshape the way you build, manage, and scale applications.

Containerized microservices represent a contemporary methodology for developing and implementing applications. In this methodology, every service functions autonomously within its container. Code, libraries, and settings are all bundled together in these containers so the service may run on any platform without experiencing compatibility problems.

Unlike virtual machines, containerized services share the host’s operating system, making them lighter and faster. This efficiency allows you to run multiple containers on a single server, reducing resource usage and costs. Plus, since containers don’t require a full OS, they start up quickly, allowing for rapid deployment and minimal downtime.

One key advantage of containerized services is that if one microservice encounters an issue, the others remain unaffected. You can easily update, scale, or repair individual microservices without interrupting the entire system, making it highly resilient and adaptable.

Whether running a large enterprise or a growing startup, containerized services offer a cost-effective, scalable solution for managing applications. They enable you to adapt, innovate, and grow with ease.

Let’s look at how containerized microservices work behind the scenes to provide flexibility, scalability, and efficiency for modern applications.

To understand how containerized services work, it’s useful to first look at some older strategies and their drawbacks.

This strategy isolates services but poorly uses the power of today’s high-performance servers. Modern servers have much more computing power than any single microservice could ever require, so dedicating an entire server to one service alone becomes unnecessary.

Other strategies will allow organizations to optimize system resources to help management establish a working business infrastructure.

At first glance, hosting multiple microservices on a single operating system may seem like an efficient approach. However, this method carries significant risks. Since all microservices share the same OS, they can easily run into conflicts—especially when using different versions of libraries or dependencies. These clashes can cause system errors. If one microservice fails, it can trigger a chain reaction, potentially disrupting the operation of other services and leading to system-wide issues.

Virtual machines provide every microservice with an isolated environment, which sounds like a brilliant strategy. However, virtual machines can be very resource-hungry since they run their operating system. This results in higher costs in license prices and wastes system resources. So, it is expensive and complex to manage microservices at scale.

Containerized services are very helpful for growing innovative businesses since they offer easy and cost-effective management of microservices.

Now, let’s uncover the full range of benefits and how they can transform application management.

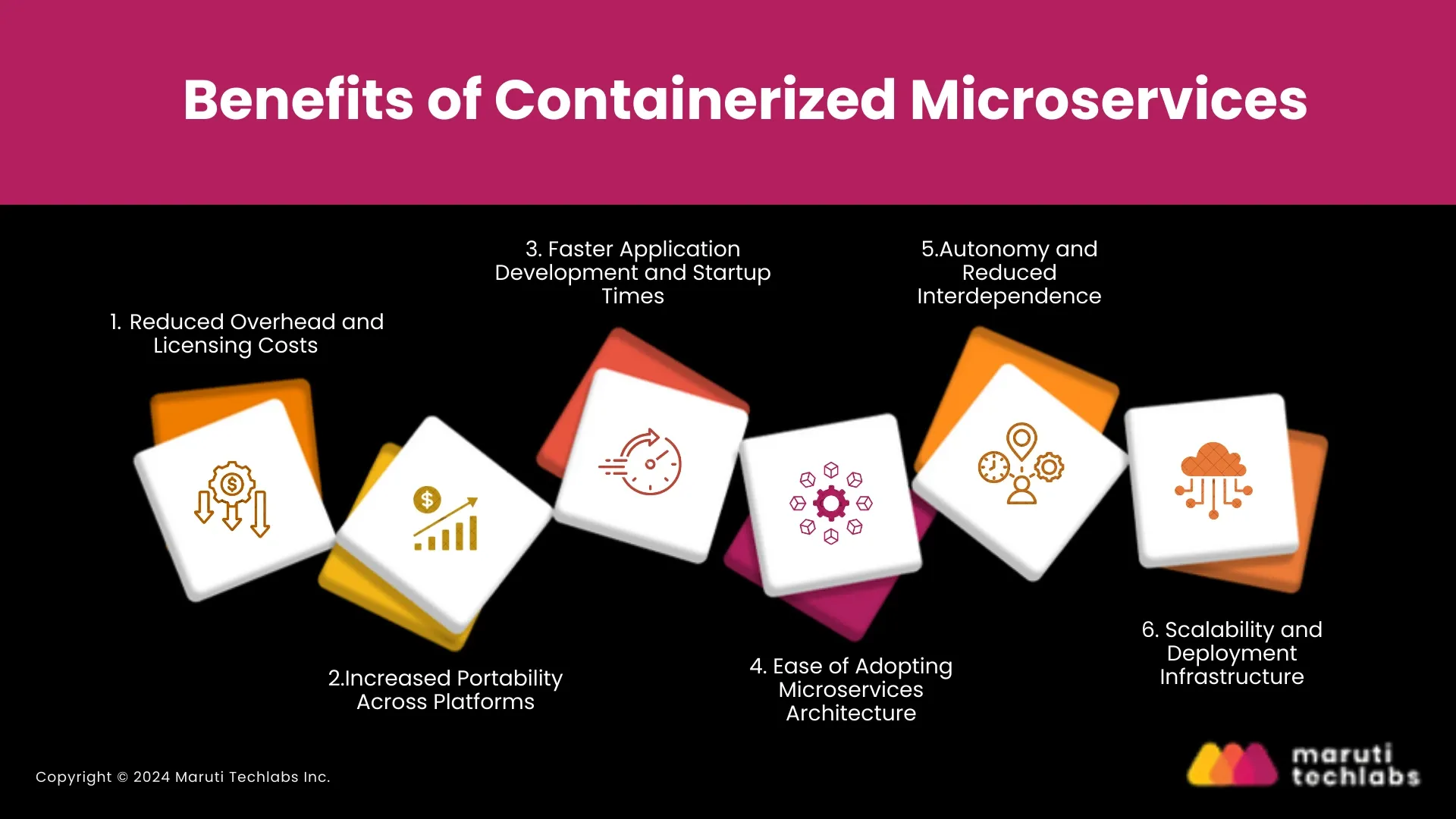

Containerized microservices provide numerous advantages that enable businesses to accelerate innovation, improve scalability, and optimize their operations.

Below are some key benefits of adopting containerized microservices:

One of the biggest benefits of containerized services is their ability to minimize overhead. Containers share the machine’s OS kernel, allowing them to use system resources more efficiently than virtual machines. This efficiency reduces infrastructure needs, lowering hardware expenses and licensing costs. By consuming fewer resources, containers help businesses scale applications without significantly rising operational costs.

In addition, containerized services simplify the management of multiple operating systems. This streamlines operations, enabling companies to focus on growth instead of dealing with complex infrastructure challenges.

Containerized services probably provide the most important advantage of easy application transfer among different environments. Containers encapsulate application code together with its dependency and make it dependable from the development stage through the testing stage and up to the production stage. This packaging makes it easy for apps to be deployed across several platforms while reducing compatibility difficulties.

Containers will thus simplify application migration processes across different environments. They will also ensure that troubleshooting time is shorter than usual, resulting in better deployments.

When speed matters, containerized services provide a significant advantage. With just a few clicks, developers can quickly spin up environments for testing, debugging, or deploying applications. The rapid setup allows teams to release updates more frequently, leading to faster iterations and product launches.

Additionally, containers are highly scalable, adapting seamlessly to surges in traffic or increased usage by quickly expanding to meet growing demand. This flexibility ensures businesses can scale efficiently without delays or unnecessary resource consumption.

When a company adopts microservices, using containerized services is an excellent choice. Each container creates a distinct environment for individual microservices, allowing for modular application development.

Teams can divide the application into smaller, more manageable components that can be evaluated and implemented independently because of its modularity. This makes the system as a whole more manageable and versatile.

Adopting a microservices architecture with containerized services enhances scalability and improves autonomy. Since each service operates independently in its container, there’s reduced interdependence. As a result, an issue or update in one service does not disrupt the others, contributing to improved system stability and overall uptime.

With supporting services, emerging platform components, and PaaS abstractions, containers become crucial tools for scaling infrastructure. On cab-internet services, organizations can automate their scaling models and develop applications that are likely to adjust for the change in workload.

For instance, in relation to traffic flow, containers allow a system to be optimally and automatically fine-tuned during periods of high demand or low traffic without reconfiguring resources. This flexibility is essential to cost control and service delivery to clients for both new and growing ventures and well-established organizations.

While the benefits of containerized microservices are clear, it's also important to understand the challenges they bring. Let’s explore the key obstacles to consider.

Containerized microservices have numerous benefits; however, they also create many difficulties for the enterprise. Being aware of these issues may help you make wise decisions about implementing the adoption and can give you better insight into the process.

Managing multiple containers and coordinating their deployment, scaling, and networking can be complex. Tools like Kubernetes streamline these processes, but they require a substantial investment in learning and infrastructure setup.

When there are even more microservices, service discovery becomes critical because they have to find the required microservices to communicate with them. Load balancing is crucial to spreading incoming traffic across multiple related service instances. One of the main challenges in a containerized environment is developing effective strategies for both service discovery and load balancing.

Microservices rely significantly on networks, making communication between them challenging. Since services end up in different containers and hosts, these have to be properly configured and protected to facilitate a proper exchange and flow of information.

Maintaining coherency and data integrity in distributed services can occasionally be difficult. One drawback is that data consistency problems can arise since every microservice might discover its data storage. A key element in improving data management and access efficiency is efficient data synchronization through techniques like event-driven architectures.

With microservices implemented in containers, some monitoring levels become complex, especially when managing the health of multiple services. Collecting each service's logs, metrics, and traces is not as simple when it is not approached systematically.

Monitoring such statistics is possible only with proper instrumentation of all services, which is critical for accurate analysis. However, such hurdles can easily be overcome through appropriate tools and methods to achieve a reliable and fascinating system.

Containerized microservices present new security risks. It’s crucial to manage access controls, secure inter-service communication, and defend container environments against threats. Strong security mechanisms like encryption and authentication are required to reduce threats.

Switching to containerized microservices often requires a DevOps-oriented culture. Building robust pipelines for continuous integration and delivery (CI/CD) is essential to automating microservices’ development, testing, and deployment. Teams may find adapting challenging as they must accept new technology and methodologies and modify existing workflows.

Containerized microservices are transforming how businesses develop, manage, and scale their applications. By breaking down monolithic structures into independent services, companies benefit from improved scalability, reduced overhead, faster deployment, and greater flexibility. Unlike traditional methods like physical servers or virtual machines, containerized services are more efficient and adaptable to changing business needs.

Though implementing these services presents challenges such as service discovery, load balancing, and network security—they can be managed effectively with the right tools, including Kubernetes and DevOps practices.

Maruti Techlabs helps businesses overcome these challenges, offering tailored solutions to optimize operations and drive innovation. With our expertise in containerized services, your business will be well-prepared to thrive in competitive environments and accurately meet customer expectations. Contact us today!

Containerized services minimize infrastructure costs by eliminating the need for separate operating systems and optimizing resource usage. By using resources more wisely, businesses may make large financial savings.

Yes, companies can progressively switch to a microservices design by integrating containerized services with their current applications. This modernizes the application stack with the least amount of disturbance.

Containerized services benefit all types of businesses, including startups and established enterprises. They are particularly valuable for organizations that need to scale rapidly or manage complex applications efficiently.

Maruti Techlabs offers tailored solutions for adopting containerized services, including application development, deployment automation, and microservices architecture design. Our expertise helps businesses leverage these technologies to improve productivity and drive innovation.

Its security depends on how the containerized services are built, which is the same as in other microservices architecture. When used independently, containers protect the application from potential security flaws that could impact other services. Beyond that, following guidelines for the safe use of containers also ensures security and safety.