From Free-Form to Structured: A Better Way to Use LLMs

Large Language Models (LLMs) have transformed the way we generate text, but their outputs are often unstructured and inconsistent. Instead of delivering a clear and structured response, they can produce unpredictable results.

This leads to additional effort in correcting errors, cleaning the output, and, in some cases, dealing with applications that fail when the expected format is missing. All of this adds up to wasted time and higher costs.

Structured outputs help fix this. They ensure the model’s response follows a proper format, such as JSON, so that it can be used directly without additional processing. To support this, OpenAI and other providers have started adding the feature, making LLMs more reliable and easier to integrate into real applications.

In this blog, we’ll look at what structured output means, why it’s important, how it’s different from regular outputs, the main techniques to use it, common challenges, and real-world examples.

Structured outputs are answers in a fixed format, like JSON, XML, or Markdown. Standard LLM text is free-form, which can be messy and unclear. Structured outputs make the response clean, predictable, and easy for machines to process without requiring additional work.

OpenAI first addressed this in 2023 with the introduction of JSON mode, which allows models to return structured JSON outputs upon request. However, JSON mode wasn’t always dependable; it often failed to capture the exact format required. Structured outputs provide a better solution, ensuring the response always follows the correct structure.

They strictly enforce a schema, which improves accuracy and makes it much easier to connect LLM responses with APIs, databases, and other tools. According to OpenAI, prompt engineering alone achieved only about 35.9% reliability for structured responses, while structured outputs now reach 100% reliability when strict mode is enabled.

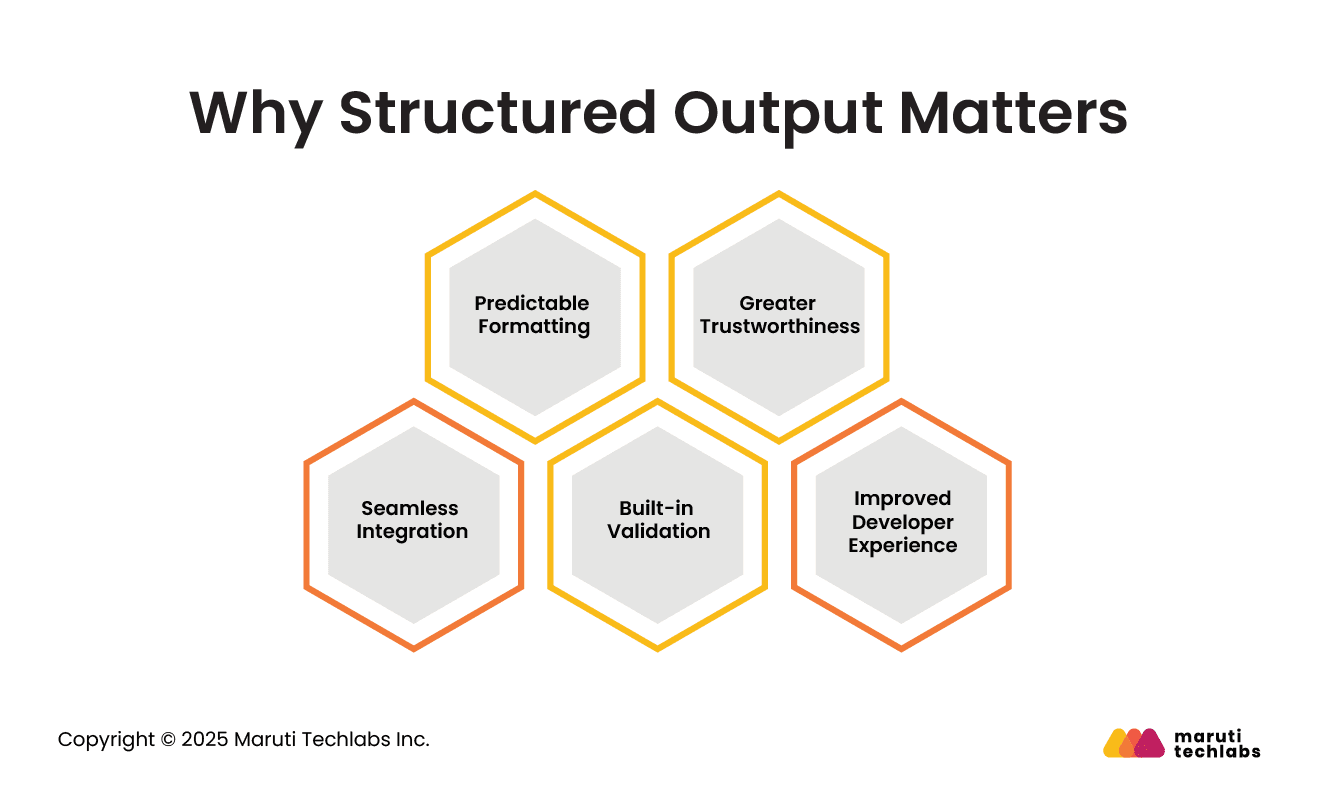

The value of structured outputs can be seen in several ways:

By shifting from free-form answers to structured outputs, LLMs become more than conversational tools; they turn into dependable data providers for real-world applications.

Comparison Area | Traditional LLM Outputs | Structured Outputs |

| Type of Response | Free-flowing text, often messy or inconsistent. | Follows a fixed format like JSON or XML. |

| Consistency | Can change from one response to another, causing errors. | Consistently follows the set format, making results predictable. |

| Handling Mistakes | Mistakes and missing parts are common, needing manual checks. | Reduces errors by sticking to the defined structure. |

| Prompting Effort | Requires lengthy, detailed prompts to elicit the desired response. | Shorter prompts work since the format is already enforced. |

| Checking the Output | Often requires cleaning and fixing after output. | Built-in checks ensure the output meets requirements. |

| Connecting with Systems | Harder to integrate because the format keeps changing. | Easy to plug into apps or APIs that expect structured data. |

| Missing Information | May skip essential details. | Make sure all required fields are included. |

| Wrong Values | Can generate incorrect or unexpected values. | Only produces values allowed by the schema. |

| Safety Signals | Hard to clearly spot refusals or unsafe content. | Safety issues are flagged in a clear, programmatic way. |

| Where It Works Best | Suitable for creative or open-ended tasks. | Best for tasks needing structured, machine-readable responses. |

| Effort to Set Up | Easier to start with, but it needs more cleanup later. | Slightly harder to set up, but gives cleaner, consistent results. |

| Working with APIs | Requires additional steps or custom parsing to integrate with APIs. | Directly usable with APIs that accept JSON or XML. |

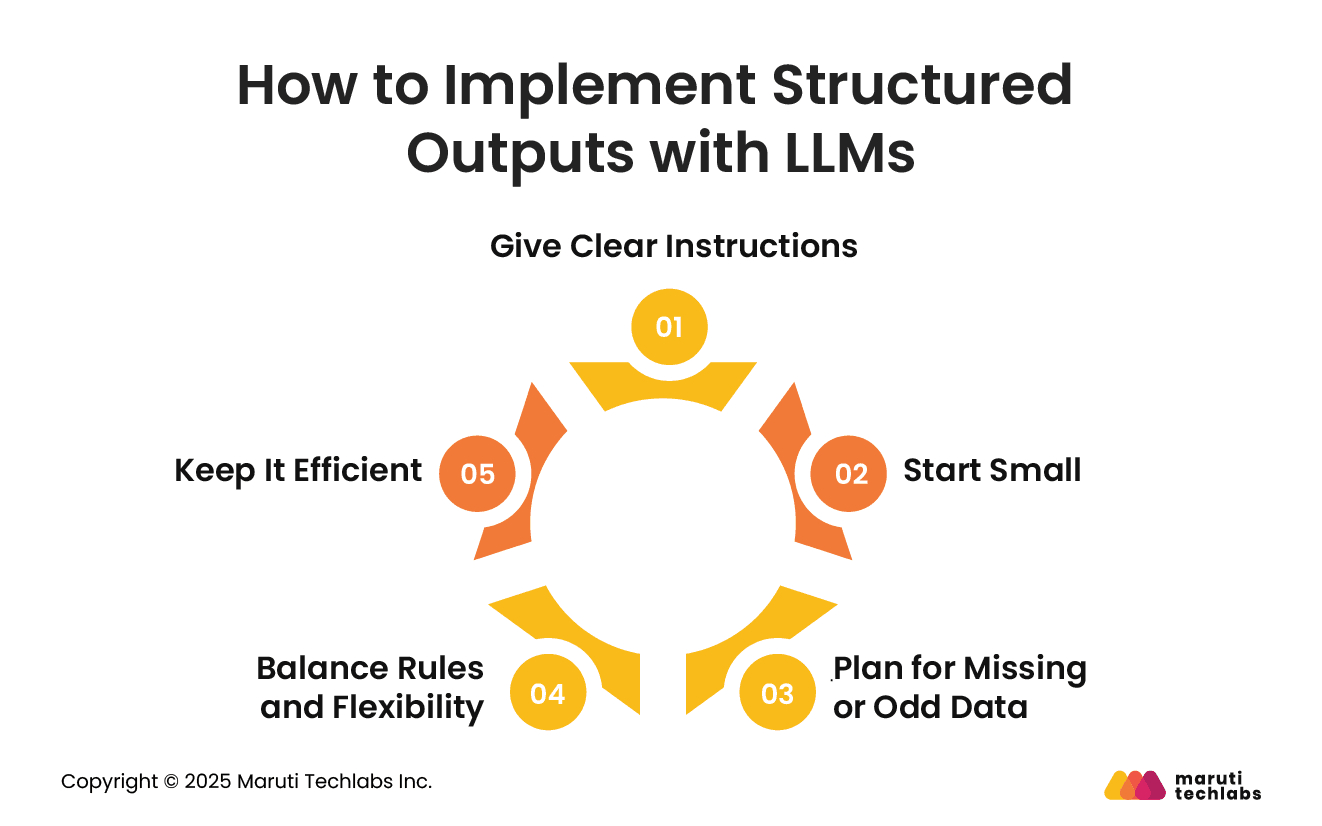

Structured outputs make LLM responses more reliable, but setting them up carefully is key. Here are some simple ways to do it:

Explain each field in a short and specific way. The more precise you are, the easier it is for the model to return the right information.

Begin with a simple structure that covers your primary needs. You can make it more complex later if needed.

Sometimes the information might be missing or unclear. Decide in advance how your system should handle these cases so it doesn’t break.

If the structure is too strict, the model may fail when the data doesn’t fit perfectly. If it’s too loose, you might not get consistent results. Find a middle ground that works.

Bigger, more detailed structures require more tokens, which can slow down the process and increase costs. Try to make your structure simple yet sufficient to convey what you need.

By following these tips, you can set up structured outputs that are easy to use, reliable, and flexible enough for real-world tasks.

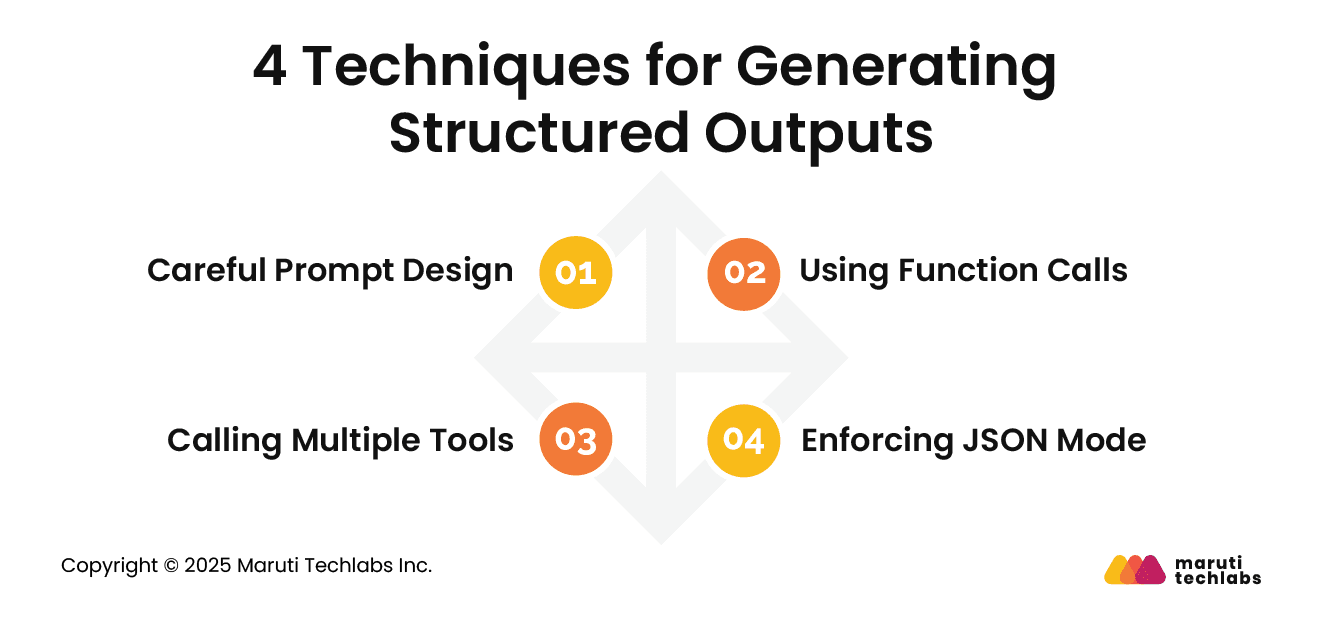

There are various ways to enable LLMs to return structured outputs. Each method has its own strengths and limitations:

You can guide the model by writing prompts that request a specific format, such as JSON or XML. Adding clear instructions (“Give the answer in JSON”) or showing examples makes the output more likely to match what you want. This method works with any LLM, but it’s not always consistent—the model may still return unstructured text at times.

Function calling lets the model generate specific function calls based on your input. By providing function names and descriptions, the model can select the right one and return structured data. This is highly effective for targeted tasks, but it requires models that support this feature. It can also increase token usage, which may reduce efficiency in some cases.

Tool calling takes function calling a step further by allowing the model to utilize multiple tools simultaneously. This is particularly helpful when a task is complex and requires more than one function to be solved. It makes the system more flexible and powerful, but also requires specialized support and can lead to higher token usage.

Some models, such as OpenAI’s, offer a JSON mode to ensure outputs are in JSON format. This is highly reliable for APIs and data workflows where a strict structure is essential. However, it only works in specific setups and can reduce flexibility for open-ended tasks.

While structured outputs bring a lot of value, they also come with some challenges you’ll need to keep in mind:

Creating schemas can be challenging, especially when working with nested or complex structures. For example, pulling structured information from legal documents often requires deeply nested schemas that take time to plan and test.

LLMs have a token cap (16,384 in many cases). If the output is too long, such as an extensive list of transaction records, the response may be cut off, leaving you with incomplete data.

Structured formats can make responses more reliable, but sometimes they reduce the model’s reasoning power. In other words, while you get less hallucination, the model may not think as deeply as it would in free-form text.

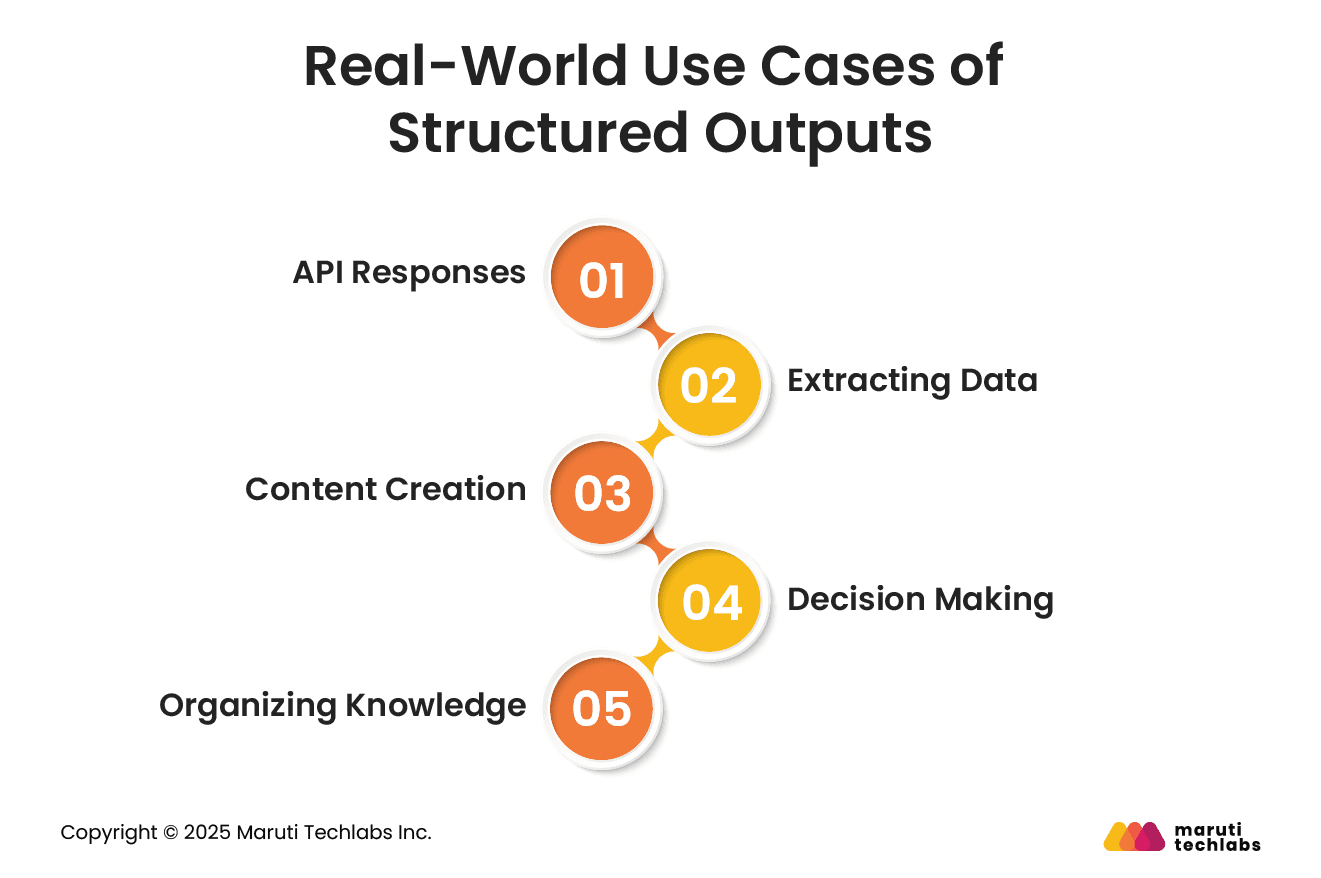

Structured outputs make LLMs far more helpful in real systems by ensuring their data is reliable and reusable without extra cleanup. Here are some common applications:

LLMs can turn natural language queries into consistent API outputs. This means developers can rely on predictable responses that slot right into their systems.

When working with unstructured text, LLMs can be guided to extract only the fields you need, such as names, dates, or transaction details, without requiring manual parsing.

Structured formats help generate clear and uniform content, such as product descriptions, reports, or marketing snippets, making them ready for direct use across platforms.

LLMs can output their analysis in structured formats, such as tables or JSON, that can be directly fed into dashboards or other tools to support business decisions.

From large document sets, LLMs can extract, group, and format important information in a way that makes it easy to manage and retrieve later.

Structured outputs provide a powerful way to enhance the reliability and predictability of LLMs. Instead of producing free-flowing text, they ensure results follow a clear format that machines can understand. This makes it easier to manage complex workflows, extract valuable insights, and connect AI with real business systems.

Whether you’re working with models like OpenAI or Gemini, structured outputs help keep data consistent across applications. They serve as the bridge between the creative aspects of language models and the structured, rule-based requirements of production environments. As LLMs become increasingly advanced, these techniques will become even more critical.

At Maruti Techlabs, we help teams design and implement structured outputs as part of their Generative AI solutions. If you’re looking to make your AI systems more predictable and production-ready, we’d be glad to help.

Discover more about our Generative AI services or contact us to initiate a conversation.

Not sure how ready your organization is to adopt structured AI workflows? Use our AI Readiness Calculator to assess your maturity and take the right steps toward enterprise-grade AI adoption.

Structured outputs in machine learning are results that follow a fixed format, such as tables, JSON, or labeled data. Unlike plain text, these outputs are predictable, machine-readable, and more manageable to use in applications such as APIs, data pipelines, or decision-making systems, without requiring heavy cleanup or reformatting.

In LLMs, structured outputs are responses that stick to a defined format, such as JSON or Markdown. This makes the model’s answers reliable and predictable, enabling developers to use them directly in software systems, rather than having to fix messy, free-form text that may cause errors or inconsistencies in applications.

In LangChain, structured outputs refer to the model’s responses following a specific schema that you define, such as JSON fields or key-value pairs. LangChain ensures that the LLM produces predictable, machine-readable results, making it easier to connect the model’s output with other tools, applications, or workflows in real-world use cases.

In OpenAI, structured outputs are model responses that follow a fixed structure, such as JSON objects. OpenAI introduced features such as JSON mode and function calling to make this possible. These help developers get consistent, schema-based results, reducing errors and making outputs directly usable in production systems.