Managing Machine Learning Models Made Easy with Registries

Enterprises are increasingly relying on ML models to tackle everyday business challenges, such as forecasting demand and tailoring customer experiences. But as teams create more models, keeping track of each one can get tricky. Without a proper model or ML registry, important information about each model, such as its version, performance metrics, or creator, can be lost. This can lead to duplicate work, confusion, and slower deployments.

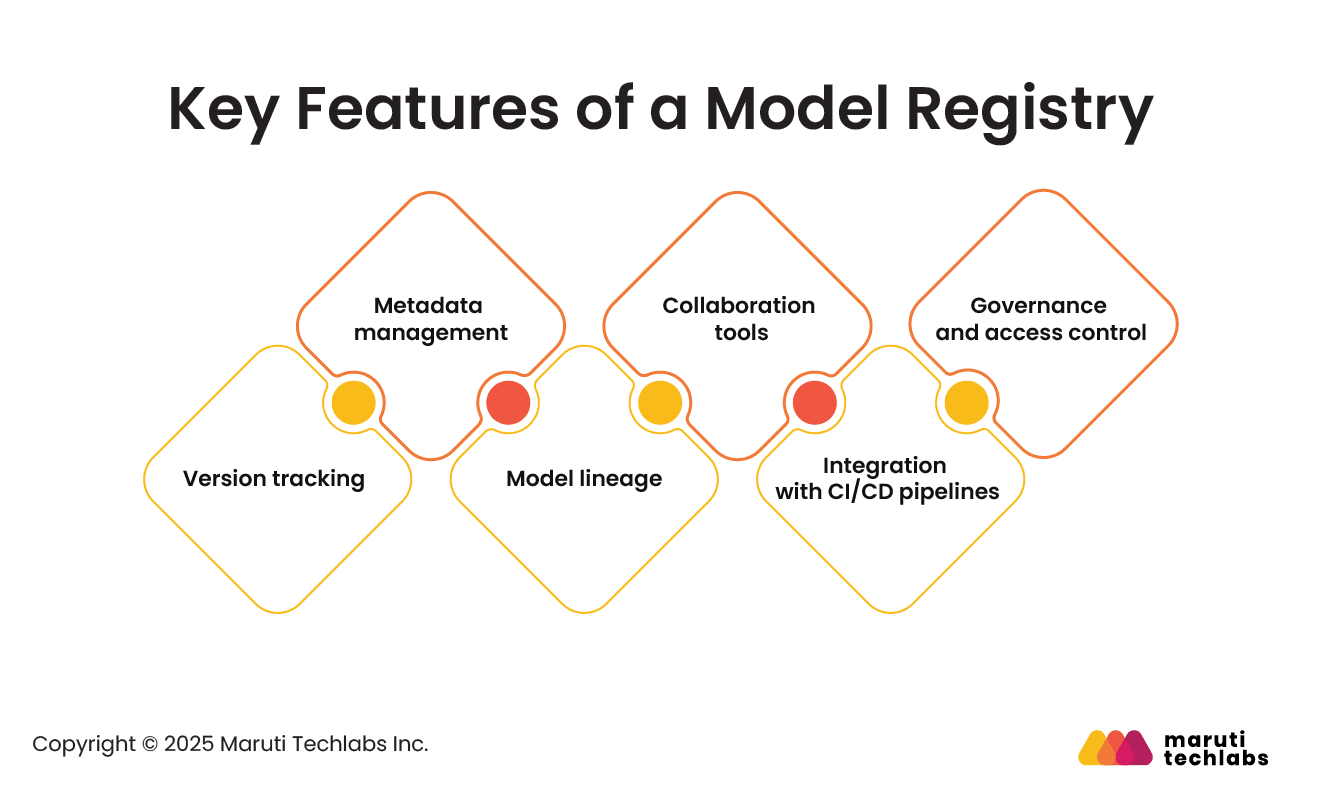

A model registry, or ML model registry, is critical for solving this problem. It is a central system that stores all models, tracks versions, records metadata, and maintains model lineage. It connects with CI/CD pipelines and helps data science teams collaborate more effectively. With a model registry, teams can easily reproduce results, enforce governance rules, and reuse existing models rather than creating duplicates.

At scale, managing an ML model registry can be challenging. Many organizations face challenges such as unclear model ownership, difficulty tracking updates, and limited visibility into model performance. In the absence of a proper registry, deployment efficiency may decrease, and operational risks may increase.

This blog will cover what a model registry is, why it is crucial in MLOps, the challenges of managing it at scale, best practices for enterprises, and some popular tools to make ML model registry management easier and more efficient.

A model registry is a central repository for storing and managing machine learning models. It tracks models from training through deployment or retirement. An ML model registry is like a version control system for models. It helps teams see which version works best and which one is ready to use.

A good model registry usually includes:

In MLOps, a model registry serves as a centralized hub that links experiments to models deployed in real-world applications. Teams no longer need to search through files or guess which version to use, as they can easily view all models, their history, and their current stage. This streamlines workflows, reduces errors, and facilitates the smooth transition of models from testing to production.

The registry also provides insights into model performance, highlighting which models are performing well, which require adjustments, and which are ready for deployment. This visibility is especially valuable in environments with multiple models and large teams, ensuring efficient collaboration and effective model management.

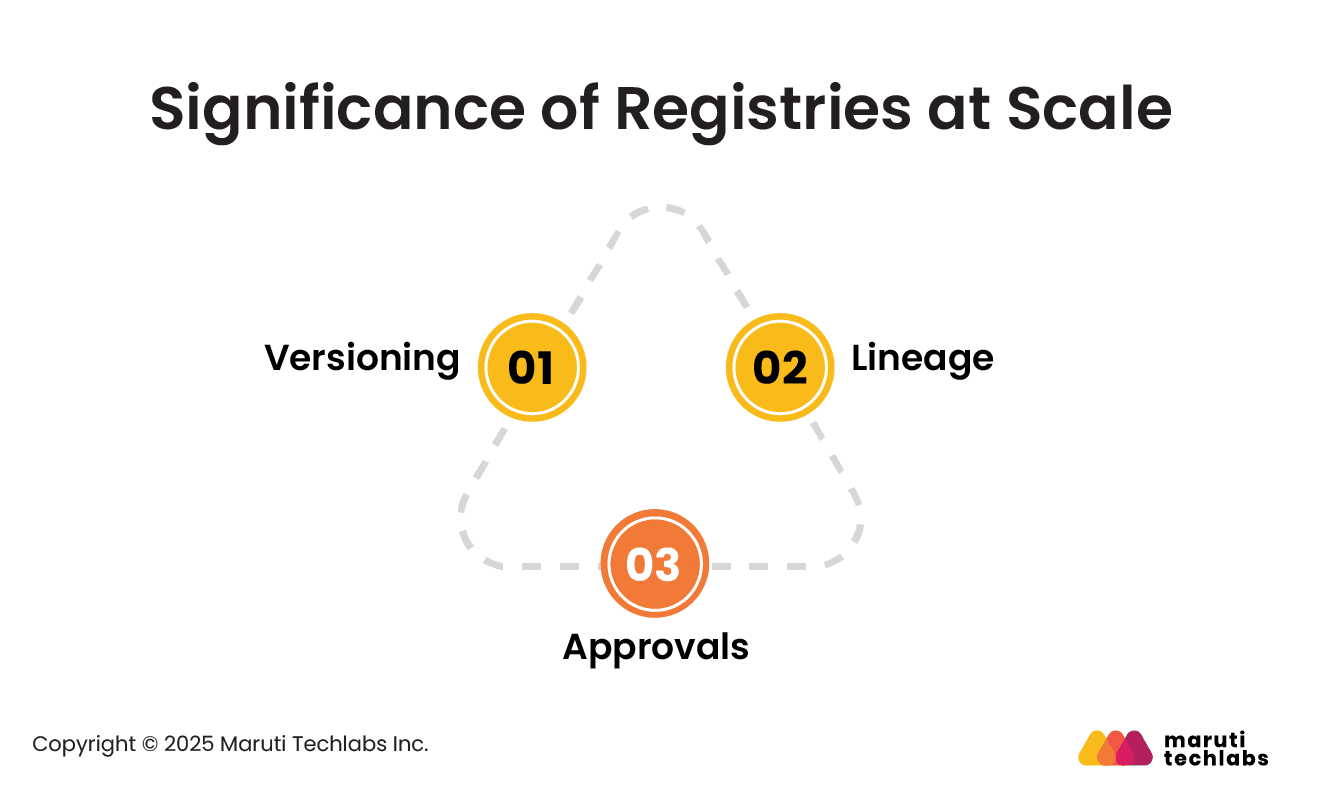

Managing machine learning models at scale can be complex. As teams create more models and work with larger datasets, tracking changes, understanding model histories, and ensuring the correct versions are used in production becomes challenging. A model registry addresses these issues by providing a central place to manage models, streamline collaboration, and reduce errors.

Versioning tracks all model versions and the data used to train them. It allows teams to see changes, revert to earlier versions if needed, repeat experiments, and ensure models remain accurate over time.

Lineage records where data came from and how a model was built, including all steps, transformations, and tools used. This makes it easier to troubleshoot, compare versions, and provide explanations for regulatory or business needs.

Approvals ensure models meet quality standards before deployment. Teams can review models at different stages, reducing errors and building confidence that production models are reliable and safe.

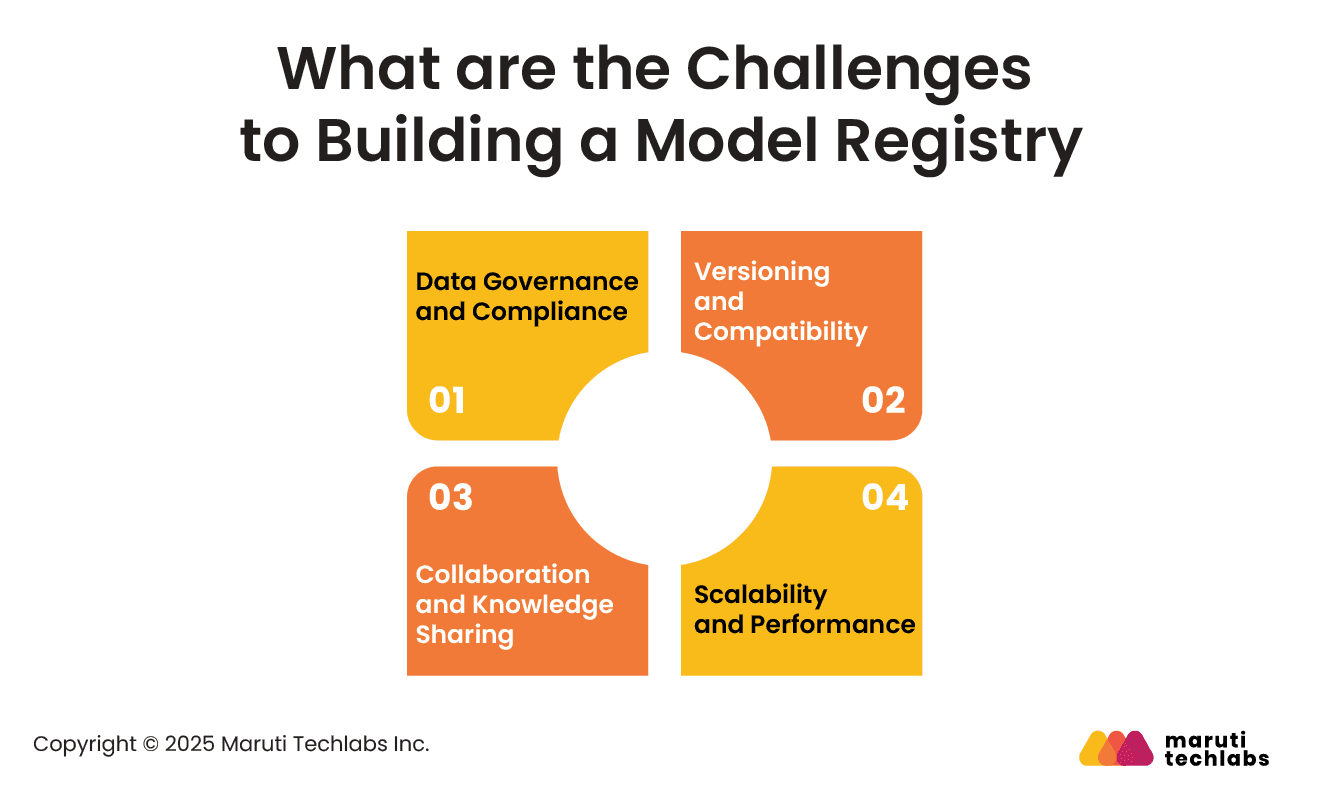

Creating a model registry can be helpful. But teams must be aware of specific challenges during implementation.

One of the most complex parts is keeping models and data secure. Teams must control access to models and ensure compliance with privacy regulations. Without proper controls, sensitive information may be exposed, and regulatory requirements could be violated.

It can also be challenging to keep track of different model versions and make sure they work well together. Models often rely on certain tools, libraries, or data formats, and changes in any of these can cause problems when updating or using the models.

As the number of models and users grows, the registry must handle the increased load without slowing down. If performance drops, it can become hard for teams to use the registry efficiently, delaying projects.

Getting multiple teams or departments to work together smoothly is another challenge. Sharing knowledge, reviewing models, and coordinating updates across teams requires good processes and clear communication.

Even with these challenges, many tools are available to build and manage model registries efficiently.

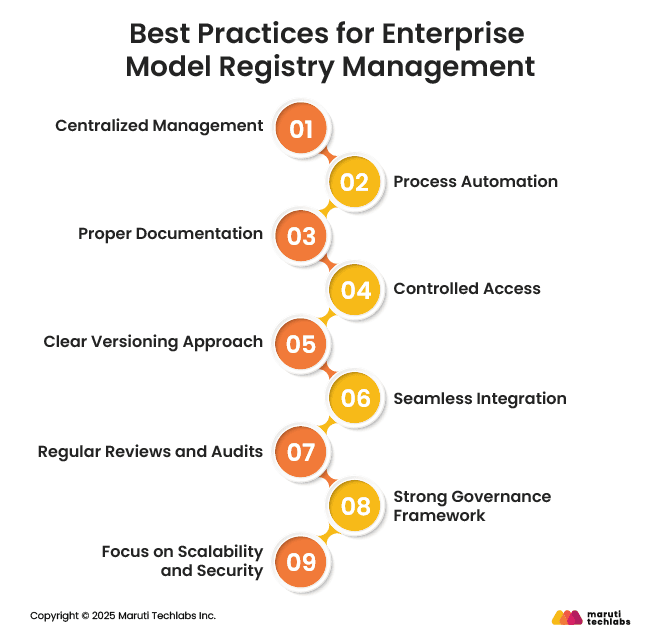

Managing a model registry effectively helps teams stay organized and keep their machine learning work on track. Here are some simple practices that can make a big difference.

Keep all your models in one central place. It helps everyone find what they need easily and avoids duplicate or outdated versions.

Automate steps like model registration, testing, and deployment. This saves time and reduces mistakes that can happen when done manually.

Write down key details about each model, such as what it does, how it performs, and who worked on it. Good documentation helps others understand and use the model later.

Make sure only the right people can view or update models. Setting clear access rules helps protect data and keeps things secure.

Use a consistent, straightforward approach to track model versions. This makes it easy to see what changed, compare results, or roll back if needed.

Connect the registry with your other MLOps tools so everything works smoothly together, from training to deployment.

Review models regularly to remove old or unused ones. This keeps the registry clean and ensures everything meets company standards.

Have clear approval steps before models go live. It keeps the process transparent and compliant with rules.

Pick a system that can grow with your needs and has strong security to protect your data and models.

To effectively manage models and streamline MLOps workflows, organizations often rely on specialized model registry tools. Here are some popular model registry tools to consider.

MLflow is one of the most widely used tools for managing ML models. It offers a clean interface and API support for registering, tracking, and deploying models. It also helps with version control, experiment tracking, and workflow automation, making it a reliable choice for most teams.

Amazon SageMaker includes a built-in model registry as part of its cloud platform. It helps teams catalog models, manage versions, and connect easily with CI/CD pipelines. It’s great for organizations already using AWS and looking to move models smoothly from training to production.

Neptune.AI provides a flexible, easy-to-use model registry that prioritizes collaboration. Teams can track experiments, store metadata, and leave comments or tags to make teamwork easier. It integrates well with popular ML frameworks but may need some setup effort for large projects.

Weights & Biases offers experiment tracking, model versioning, and strong visualization tools to monitor performance. It’s simple to use and has a large community for support. Many teams use it for its shared dashboards and reporting features.

Verta.ai provides a complete system for managing models from development to deployment. It helps track versions, automate CI/CD workflows, and manage approvals. It also keeps audit logs and access controls to ensure models are secure and compliant.

Managing model registries at scale is not just about storing models; it’s about keeping everything organized, traceable, and ready for production. A well-managed registry helps teams work together, maintain version control, and ensure compliance as models move from testing to deployment.

Organizations investing in Custom AI Development can design model registries tailored to their workflows, enabling smoother collaboration, faster deployment, and greater trust in AI solutions.

The key takeaway is that as enterprises build more machine learning models, having a strong model registry becomes essential for efficiency, collaboration, and trust. It’s what keeps the entire MLOps process running smoothly.

If your organization is looking to improve how it manages ML models or wants to set up a reliable MLOps pipeline, our team at Maruti Techlabs can help. Explore our AI and ML development services or contact us to learn how we can support your journey toward scalable, well-managed machine learning operations.