Fine-Tuning vs Prompt Engineering: A Guide to Better LLM Performance

Leading AI models such as GPT-4, Claude, and PaLM 2 have made advanced technology more accessible than ever. These models excel at generating content, answering queries, and interpreting natural language with high accuracy. However, while zero-shot use, plugging in a prompt and getting an answer works in general scenarios, it often falls short for business needs that demand domain accuracy, consistency, and compliance.

That’s why organizations are increasingly turning to customization strategies to unlock real value from LLMs. Two of the most effective methods are fine-tuning and prompt engineering. Fine-tuning LLMs involves retraining the model on specific data to improve relevance and accuracy. Prompt engineering, on the other hand, shapes the input to guide the output, without needing additional training.

In this blog, we explore how fine-tuning and prompt engineering transform generic models into powerful business assets, the differences between the two approaches, and why zero-shot use isn’t enough for enterprise-grade AI.

Fine-tuning is the process of taking a pre-trained AI model and adapting it to a specific task or domain. Instead of starting from scratch, you build on what the model already knows, making it more accurate, relevant, and aligned with your business needs while saving time and resources.

Prompt engineering is the art of writing clear, well-structured inputs to guide an AI model’s output. By choosing the right words and context, you help the model understand your intent, reduce errors, and produce more useful, relevant, and accurate responses without retraining the model itself.

Fine-tuning and prompt engineering are both powerful ways to make AI models work better for specific needs. But they take very different approaches.

The table below highlights the key differences in simple terms.

Aspect | Prompt Engineering | Fine-Tuning |

| Goal | Focuses on shaping AI outputs to be relevant and accurate for a given query. | Improves the model’s overall performance for specific tasks or domains. |

| How it Works | You guide the AI by writing clear, detailed prompts with the proper context and instructions. | You train the AI on new, domain-specific data so it learns patterns and context over time. |

| Control | You keep complete control over each response by adjusting your prompts. | The model gains autonomy once trained; it produces results without needing detailed instructions. |

| Resources Needed | Minimal resources are needed; often just time, creativity, and access to a generative AI tool. Many tools are free or low-cost. | Fine tuning demands substantial resources, including computing power, specialized datasets, and advanced technical expertise. |

| Speed of Implementation | Delivers rapid results that can be enhanced immediately by refining prompts. | Training and testing the model can be time-consuming, often taking several days or even weeks. |

| Best For | Quick experiments, varied use cases, or when you need flexibility. | Consistent, domain-specific outputs at scale for specialized applications. |

| Example | Adjusting the input text to guide the AI’s output. For example: Asking "Summarize this in three bullet points" instead of "Summarize this" to get a concise list. | Retraining the AI on domain-specific data improves its performance for a particular task. For example: Feeding the model thousands of legal contracts so it can draft new ones in the correct legal format. |

Both techniques can work together; prompt engineering for flexibility and fine-tuning for deep, domain-specific accuracy.

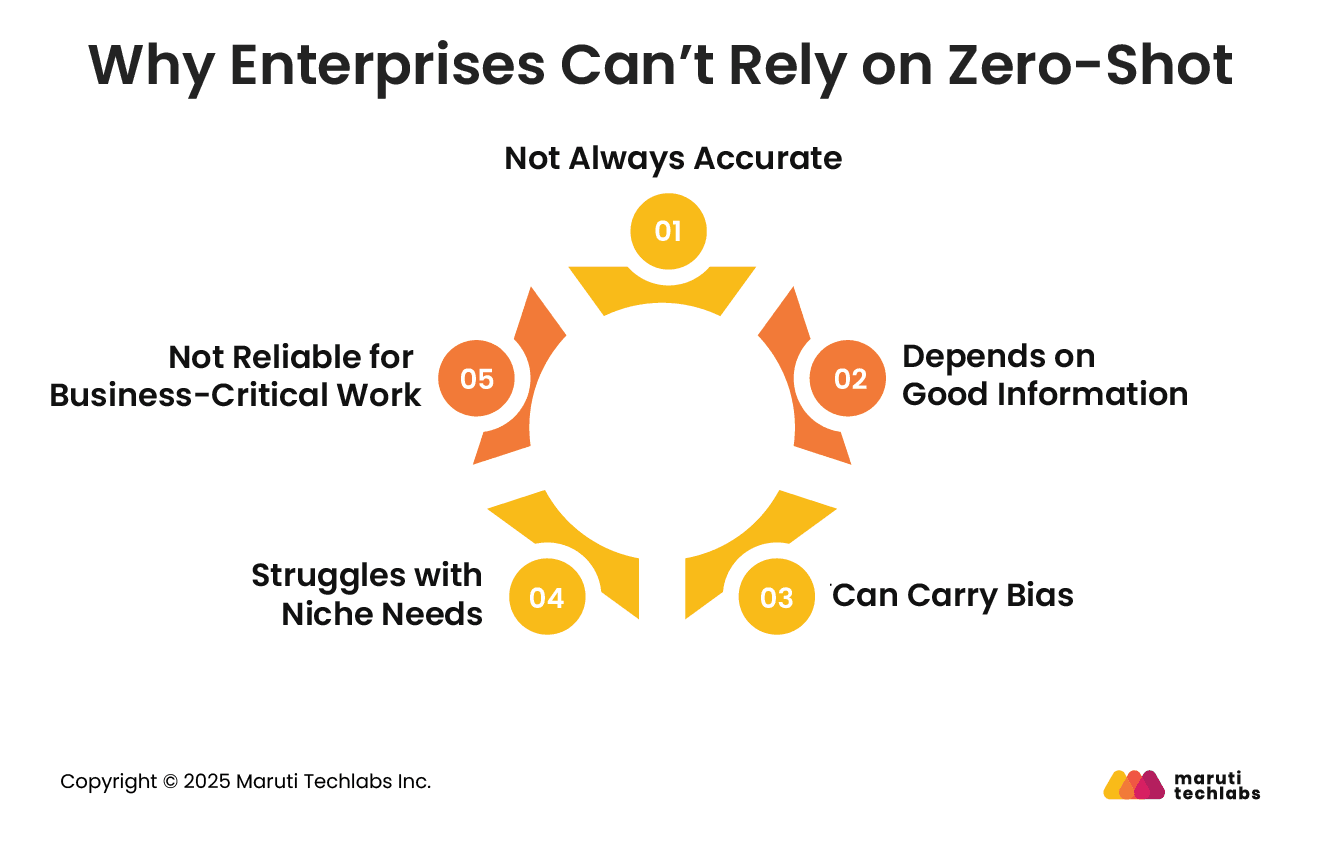

Zero-shot learning is when an AI tries to handle a task it hasn’t been trained on. While useful in certain scenarios, it can have significant limitations for critical business applications.

When not trained for a specific task, the AI’s responses may be inaccurate, particularly for complex or highly technical work.

Poor or incomplete input leads to poor output; the quality of the input directly determines the quality of the results.

The AI can pick up hidden biases from the data it was trained on, which can lead to unfair results.

Specialized industries like healthcare, law, or finance need precise and compliant answers. Zero-shot often gives generic responses instead.

When accuracy and trust are critical, zero-shot is insufficient. Companies often rely on fine-tuning or prompt engineering to achieve results tailored to their needs.

In short, zero-shot can be a quick start, but it’s no substitute for customization. To achieve dependable, business-ready results, enterprises require AI that’s tailored to their specific needs, rather than relying on general knowledge.

Customizing large language models through fine-tuning or prompt engineering can make them far more effective for business than using them in their default form.

When fine-tuning LLM models is combined with prompt engineering, businesses get AI that is accurate, consistent, and aligned with their goals, transforming generic models into valuable business tools.

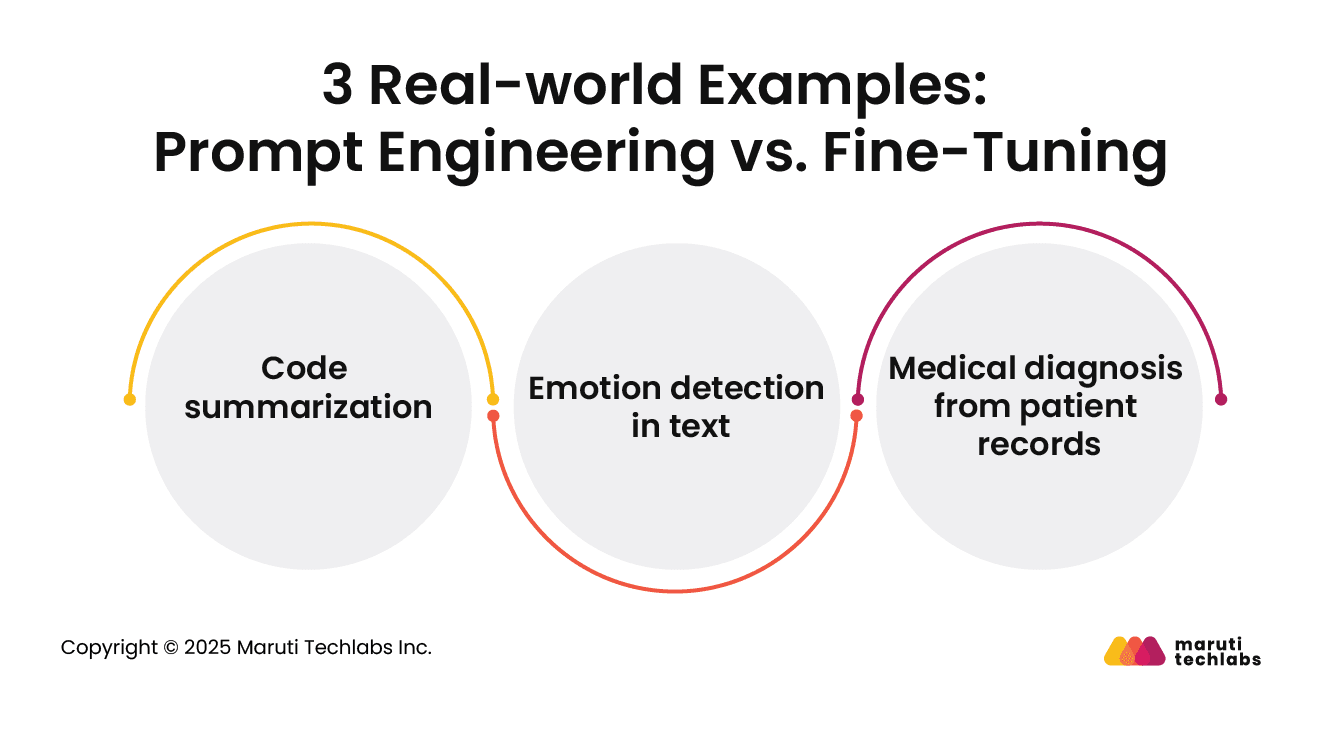

To better understand how each approach works, let’s look at a few practical examples and see where prompt engineering or fine-tuning makes more sense.

With fine-tuning, the model is trained on large sets of code and matching summaries, helping it understand programming patterns. Prompt engineering can simply guide the model with clear instructions like “Summarize the key functions in this code.” In many cases, prompt engineering works well here because it taps into the model’s existing knowledge quickly.

Fine-tuning trains the model on diverse emotional datasets so it can pick up subtle cues in language. Prompt engineering uses targeted instructions to look for keywords or phrases that show emotions. Fine-tuning is better for this task since emotions can be complex and vary by context.

Fine-tuning on medical data helps the model understand terminology and patient history patterns. Prompt engineering can guide questions for possible diagnoses, but fine-tuning is preferred due to the depth and accuracy needed in medical decisions.

Before choosing between fine-tuning and prompting, it’s essential to understand the limitations and risks that come with each. Knowing these can help you set the right expectations.

By keeping these points in mind, you can better plan your approach and reduce risks.

For real-world projects, numerous tools are available to simplify the adoption of prompt engineering or fine-tuning. These platforms support both approaches, enabling you to start with simple experiments and scale to full AI systems as your requirements evolve.

OpenAI’s GPT-4 Turbo is a popular choice for both prompting and fine-tuning LLM. It can be accessed through the API, Playground, or ChatGPT Teams, making experimentation easy. Fine-tuning works for GPT-3.5 and GPT-4 Turbo, allowing teams to adjust the model for specific tasks, tone, or domain knowledge without starting from scratch.

Hugging Face Transformers is one of the most widely used open-source libraries for fine-tuning LLMs. It supports multiple architectures like BERT, GPT, and LLaMA, giving flexibility for research and production. With pre-trained models and datasets readily available, teams can fine-tune models for industry-specific needs while keeping control over deployment.

LangChain focuses on building applications powered by large language models, making it ideal for combining prompting with fine-tuning. It helps developers create context-aware workflows, connect LLMs with external data sources, and structure multi-step reasoning. Fine-tuning can be applied on top to ensure responses are accurate and aligned with business goals.

LangGraph builds on LangChain, adding better visualization and control over LLM workflows. It’s helpful when testing and iterating on prompts or fine-tuning models, as you can see exactly how data flows between steps. This makes it easier to identify bottlenecks, fine-tune specific components, and improve the overall model output.

Anthropic’s models, like Claude, focus on safe and reliable AI outputs. While prompting is their primary strength, fine-tuning LLM models is also possible for enterprise needs. They provide strong guardrails for ethical AI, making them suitable for industries where safety and compliance are critical while still benefiting from task-specific fine-tuning.

These tools give you the flexibility to start with simple prompts and, when ready, move into fine-tuning LLMs for deeper customization, all in one ecosystem.

Both prompting and fine-tuning play an essential role in getting the most out of generative AI. Prompting lets you get quick results without much setup, while fine-tuning gives you deeper customization for specialized needs. Using them together ensures you get accuracy, efficiency, and adaptability, all while reducing the risk of poor outputs.

The right balance between the two can boost ROI by helping AI work precisely the way your business needs. With fine-tuned models, you handle unique tasks better, and with strong prompting strategies, you save time and resources.

At Maruti Techlabs, we help you choose the right mix of these strategies so your AI solutions deliver better results with fewer risks. Explore our Generative AI services to see how we can support your goals, or contact us today to discuss your specific needs.

Start by using our AI Readiness Calculator to assess how prepared your business is to implement and scale AI effectively.

Fine-tuning an LLM involves training it with your data to learn your specific tone, style, or domain. You collect quality examples, prepare them in the proper format, and use the model’s fine-tuning API or tools. This helps improve accuracy for your unique use case.

Use prompt engineering for quick, flexible results without retraining, ideal for testing ideas or small tasks. Fine-tuning is most effective when you require consistent, specialized outputs for repeated use cases. If your needs change often, prompts win; if your domain is fixed, fine-tuning is better.

Prompt engineering shapes the model’s response with carefully crafted instructions. RAG (Retrieval-Augmented Generation) adds real-time data from external sources to improve accuracy. Fine-tuning changes the model itself by training it with your examples, making it “remember” patterns, unlike prompts or RAG, which work without altering the model.

Fine-tuning permanently adapts the model with your data. Prompt chaining breaks complex tasks into smaller steps, where each output becomes the input for the following prompt. Chaining improves reasoning for multi-step tasks, while fine-tuning ensures consistent style, tone, or domain knowledge across all future outputs.