Netflix, the first of its kind in the world of streaming, vividly illustrates how businesses integrate the latest technology to maintain their competitive edge.

While migrating some of its services to containers, Netflix encountered a few challenges, which led to the development of its container management platform, Titus.

Now, Netflix runs services such as video streaming, content-encoding, recommendations, machine learning, studio technology, big data, and internal tools within containers totaling 200,000 clusters and half a million containers per day.

Organizations are adopting containerization to develop new applications and improve existing ones to keep pace with the ever-changing digital market. According to an IBM® survey, around 61% of container users said they had used containers for at least half of their new apps in the past two years, while 64% plan to containerize over half of their current apps in the next two years. Enterprise application modernization solutions are essential in this transition, helping businesses stay competitive and agile.

This blog will discuss application containerization's challenges, benefits, and use cases. Before we get into details, let's define containerization.

Application Containerization is the execution of software applications in separate packages called containers. Application containers store everything required to run an application, including files, libraries, and environment variables. So, regardless of the operating system they're on, the applications work smoothly without rendering compatibility issues.

Containerizing applications speeds development, improves efficiency, and enhances security by separating them from hardware and other software dependencies. Containers can run on any host operating system while being isolated. Containers power major services like Google Search, YouTube, and Gmail. Google also developed Kubernetes and Knative, popular open-source platforms for managing containers and applications.

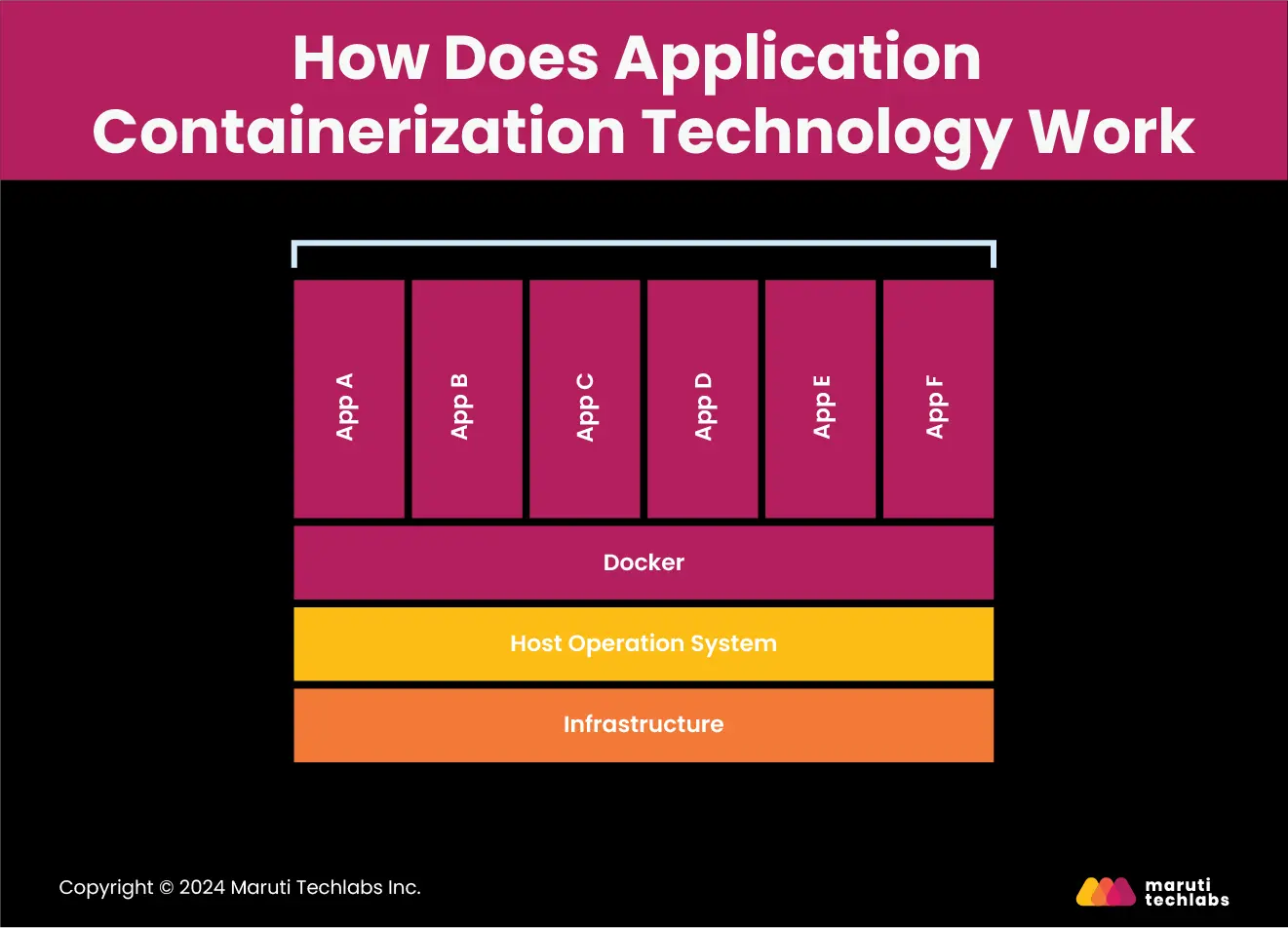

Containers generate representations of code authored on one system along with its corresponding configurations, dependencies, libraries, etc. These representations function as container engines that are compatible with various platforms.

The primary aim of containers is to segregate programmed software from diverse computing environments. This facilitates consistent code execution across different platforms, regardless of variations in development environments and practices.

Furthermore, containerization technology acts as a host operating system. Nevertheless, they are distinct from parent operating systems, as discussed previously.

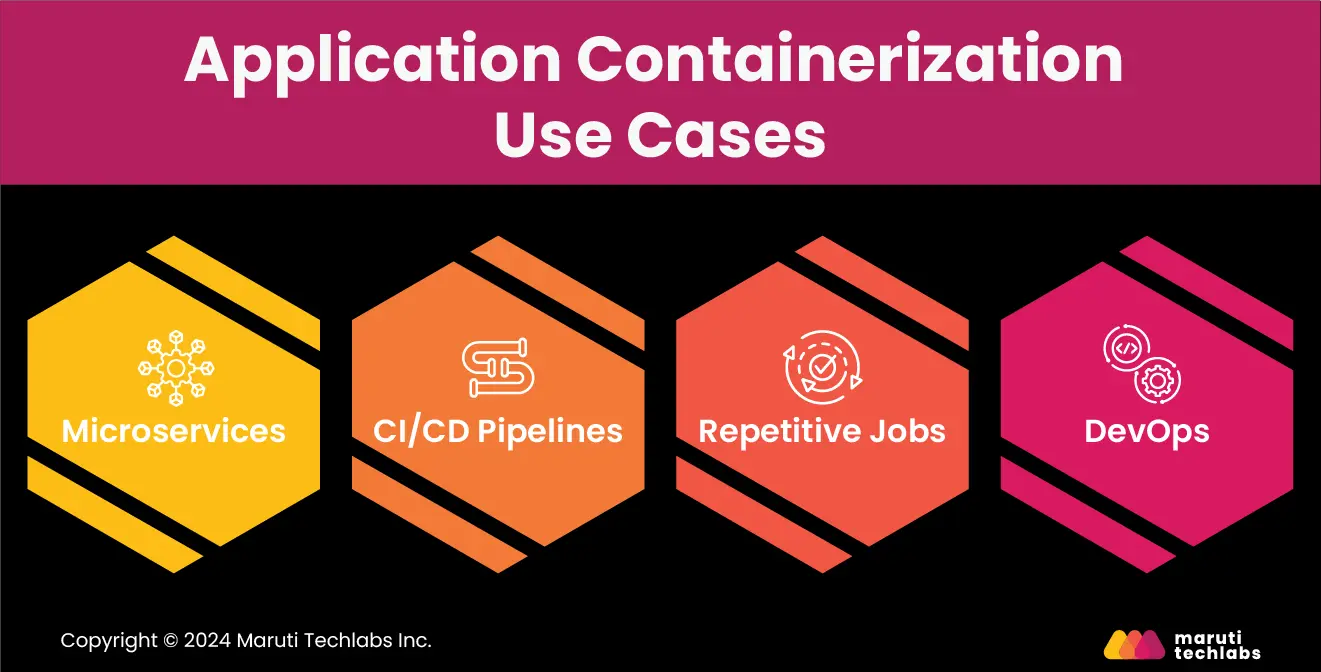

In contemporary business landscapes, containers are frequently used to host programs, and they work particularly well for the following use cases:

Applications based on microservices comprise numerous separate parts, most deployed inside the containers. Together, the various containers create an organized application. This application design technique benefits effective scaling and upgrading. When handling increased load, the containers with the highest load must be scaled, not the entire application. Similarly, individual containers may be modified as opposed to the whole program.

Containerized apps enable teams to test applications in parallel and accelerate their Continuous Integration/Continuous Delivery (CI/CD) pipelines. Additionally, testing a containerized application in a test environment gives a close representation of its performance in production because containers are portable between host systems.

Bulk and database jobs are periodic background tasks that work well with containers. Each operation can operate thanks to containers without interfering with other concurrent jobs.

An application's consistent and lightweight runtime environment can be quickly created with containerized apps. This helps DevOps teams to build, test, launch, and even iterate applications as they wish.

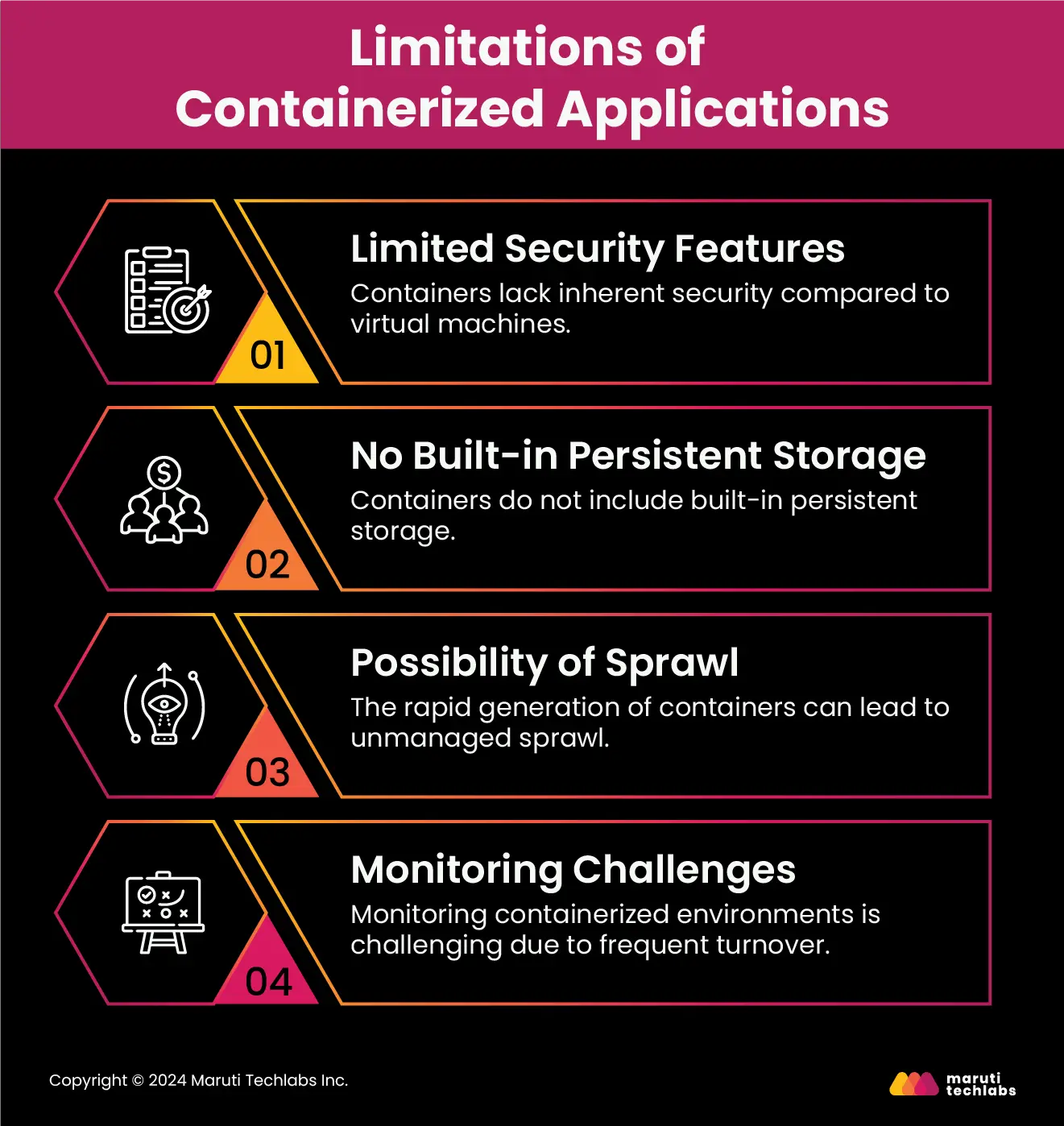

Despite being extremely beneficial, containers come with some limitations:

Namespaces enable each container on a host to get allocated resources from the host operating system and separate the processes running inside the container from those outside it. Any vulnerability in the host operating system might pose a threat to all its containers because they run on the same OS. Moreover, if network settings have been compromised, an attacker who gains access to one container can easily access other containers or the host.

The data contained in a running container will vanish whenever it is stopped. A persistent file system is required to save the data. Most orchestration tools enable persistent storage, while vendors' products differ in quality and execution.

While the rapid generation of containers is beneficial, it can also lead to unmanaged container sprawl and increased administrative complexity.

Teams often struggle to keep track of running containers because they spin up and down rapidly. Manual tracking containers are rigid because they churn 12 times quicker than regular hosts.

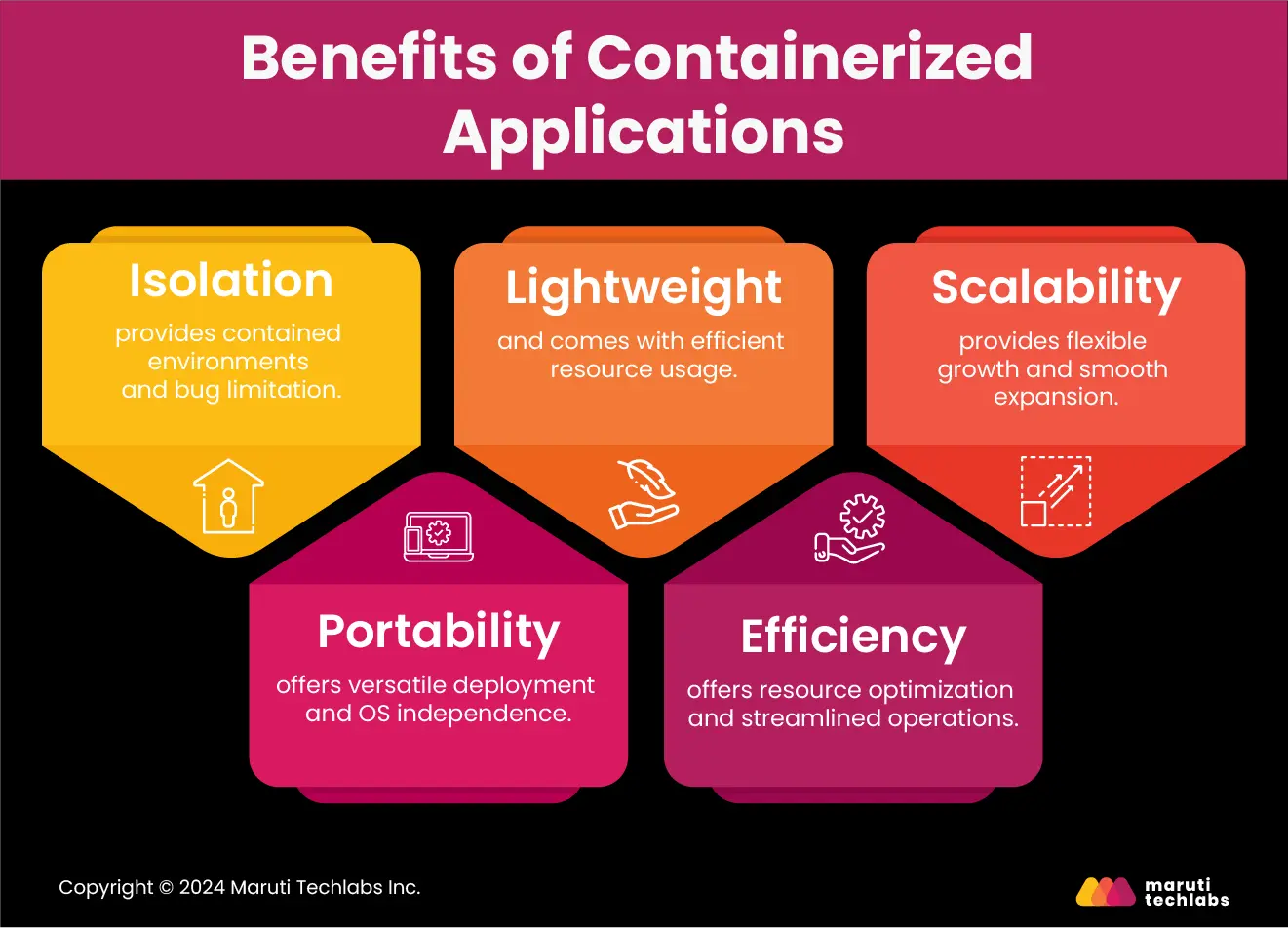

Application containerization enhances speed, efficiency, and security by isolating various functions from hardware dependencies and other software components. Containerized applications offer a host of advantages that include:

Since containerized applications exist in an isolated environment away from other apps and system components, any issues occurring within one app do not affect others or the underlying system components. This containment effectively limits the scope of potential bug incidents.

Because they are independent of the operating system, containerized applications are portable across different environments, such as servers, virtual machines, developers' computers, and the cloud.

Containers are more efficient than virtual machines since they do not carry the entire operating system, making them lighter.

Containerized applications effectively use a machine's resources by sharing computing capabilities and application layers, allowing multiple containers to run simultaneously on the same machine or virtual environment.

Increasing container instances to accommodate growing application demands is a smooth process in application containerization.

While VMs and containers center on ‘virtualizing’ a particular computational resource, containers are often favored over VMs. This is because VMs require more overhead when compared with containerization technologies.

Regardless of the OS, another advantage that virtual machines (VMs) support is that this allows a corporation to run several servers virtually from one system or more. Containers, in turn, manage an application and can spin up and down instances in seconds, as they are lightweight.

Let us look at examples to understand how containerization helps companies cut costs.

Challenge: Spotify faced challenges managing increased workload when the platform experienced a hike in active users, reaching over 200 million monthly subscribers.

Solution: To handle this, Spotify-

Result: In terms of implementation, the company -

Challenge: Financial Times, the newspaper giant, dealt with enormous content on its platform. The team’s goal was to minimize the costs associated with the operation of AWS servers.

Solution: They accomplished this by upgrading their framework and shifting to containers, resulting in an 80% reduction in cloud server management costs. Here are some strategies they employed while using Docker as a container -

Result: The development team focused on supporting the health of the tech cluster and minimizing server costs. As a result, they-

Challenge: Pinterest had to deal with additional work and hosting costs for the numerous images posted on the site. To make suitable investments, it looked for new technology.

Solution: The team aimed to -

Result: Here are the containerized processes that helped Pinterest avoid hefty expenses in the long run -

The above examples demonstrate that containerization can reduce costs and enhance productivity. Mainstream companies such as Spotify, Financial Times, and Pinterest have used containers to address the challenges of handling additional workloads and operational costs and improving the efficiency of the development and delivery processes. Containerization is not only an efficient way of resource management but also promotes change and growth in complex environments.

Some of the popular platforms for containerized applications include:

Docker is an open-source software platform for generating, deploying, and overseeing virtualized application containers on a shared operating system (OS) alongside a network of associated tools.

LXC is a Linux container runtime comprising tools, templates, libraries, and language connections. It's quite basic, highly adaptable, and includes nearly all containment features supported by the upstream kernel.

rkt, also called Rocket, is a container engine that lets you manage individual containers or work with Docker containers while giving you more flexibility and control over your containerized applications.

The Container Runtime Interface (CRI) for the container management platform enables OCI-compatible runtimes. It is frequently used instead of Docker containers with Kubernetes.

The core components of a standard containerized application setup consist of three main elements:

Tools like Docker container, CRI-O, Containerd, and Windows Containers reduce the administrative expenses required to manage applications and make them easy to launch and shift between environments.

Platforms such as Kubernetes and OpenShift manage large numbers of containers, automate deployment, and guarantee smooth operation.

Platforms like Amazon EKS and Google GKE make managing Kubernetes easy. They simplify setup and operation even for organizations with less experience.

Containerization plays a crucial role in smooth and successful DevOps implementation, promoting the development of applications that could have been difficult to build on a system natively. Whether a startup or a big enterprise, containerization offers agility, portability, flexibility, and speed. Containers make various environments like development, testing, and production identical. So, you don't need to depend on operations teams to ensure that different servers run the same software.

Introducing new tools and technologies may be difficult, and complex applications can increase costs. At Maruti Techlabs, we provide enterprise application modernization solutions combining redevelopment, redesign, refactoring, or replacing legacy systems. Our professionals have managed to transfer fully functional applications to a microservices architecture and containerize them. Containerization, i.e., packing your app components into completely separate packages from each other, means simple scaling and transferring between various environments.

We also ensure our clients complete any stage of IT process modernization, infrastructure modernization, or cloud migration.

Connect with us and simplify your development and deployment processes with application containerization!