Prompt Engineering For DevOps: 9 Best Practices In 2026

The constantly changing digital ecosystem has witnessed the rise of DevOps as a critical technique for software development and IT operations. Implementing DevOps shortens the development cycle, enhances software quality, facilitates automation, continuous delivery, and teamwork.

Today, the introduction of generative AI (gen AI) has equipped DevOps to achieve unprecedented levels of creativity and efficiency. These developments have made prompt engineering an evident competency for DevOps engineers.

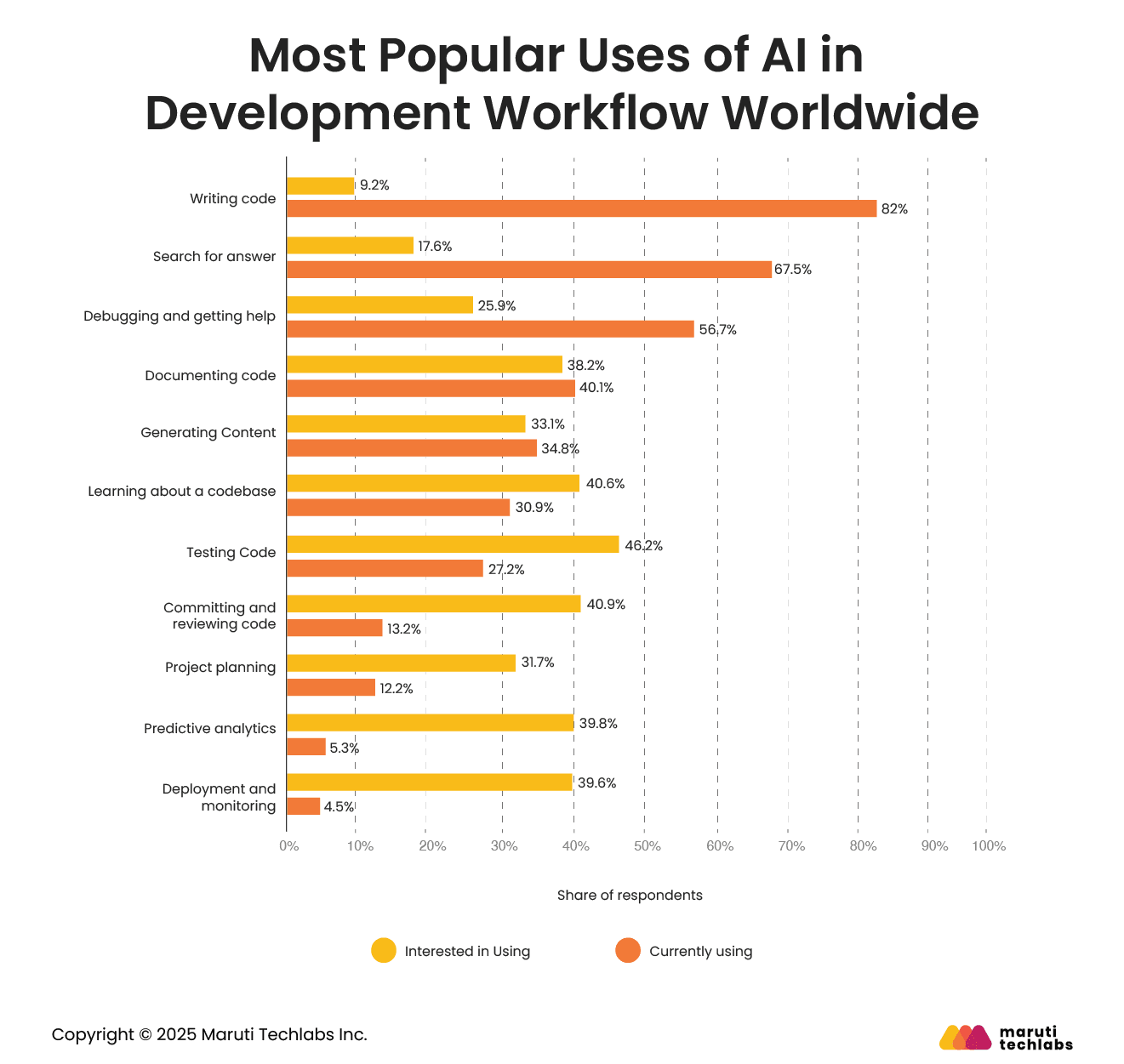

A 2024 Stack Overflow survey reported that 82% of developers currently using AI tools mostly use it to write code. This speaks volumes about how generative AI is making its way into programming.

This article provides a deep dive into the importance of prompt engineering in DevOps, explores the distinction between blind prompts and prompt engineering, outlines top gen AI best practices, and highlights key use cases you should be aware of in 2025.

“A ‘prompt' comprises a query or distinct instructions used to generate required responses in an artificial intelligence system or machine learning models.”

It serves as a task list for an AI system, helping the model understand what action is expected of it. The output AI shares is directly a result of the quality and clarity of the prompt.

A prompt can be an instruction, a question, or even a statement presented with specified context. For instance, a prompt could be a general query, such as “List the best hill stations to visit in India in June.

Concerning programming tasks, a prompt can be a piece of code with a missing function that needs to be added.

“ ‘Prompt Engineering’ is the process of purposefully designing and refining prompts to generate desired responses from generative AI systems.”

This process facilitates meaningful interactions between users and general AI systems by using well-structured and precise prompts. By presenting contextually relevant prompts coherently, users can leverage the full potential of AI solutions.

Prompt engineering focuses on creating high-quality software. It provides efficient solutions through a systematic approach. Incorporating prompt engineering principles streamlines software development, offering unparalleled results and increased customer satisfaction.

DevOps leverages the power of communication and collaboration to align software development and IT operations, facilitating quick and effective software delivery by enabling both teams to work cohesively. With DevOps, organizations experience benefits such as faster time-to-market, improved efficiency, and enhanced software quality, thanks to its continuous development, integration, testing, and deployment environment.

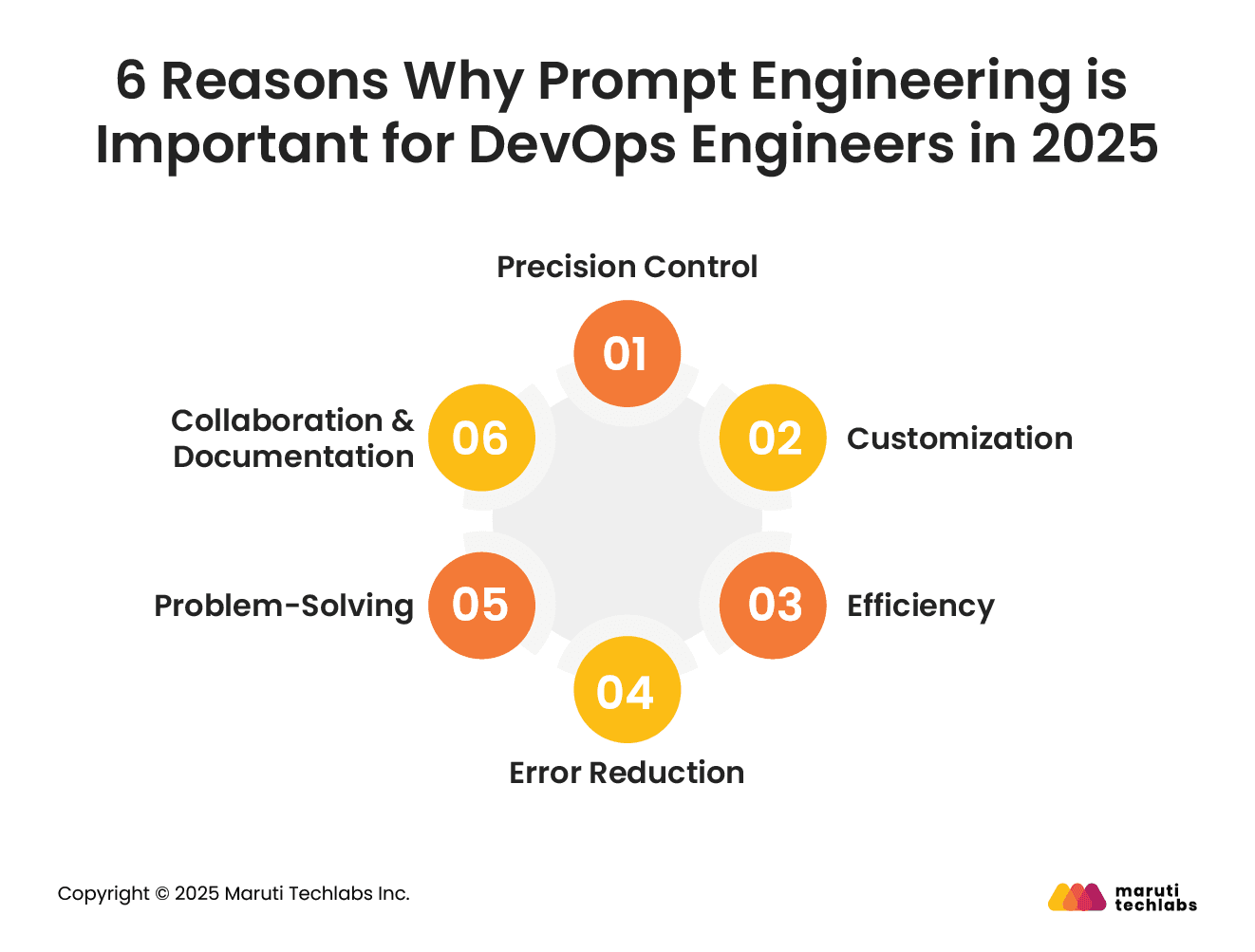

Here are seven essential reasons that emphasize the importance of prompt engineering for DevOps engineers.

Complicated workflows and systems are an essential part of what DevOps engineers deal with. Prompt engineering allows them to articulate their queries correctly, sharing relevant responses. Specific prompts facilitate targeted interactions, enabling an engineer to exercise control over the AI’s output.

Customization is a need for various DevOps tasks. Prompt engineering enables engineers to design contextually relevant prompts tailored to their specific tasks and requirements. Customization is paramount to help the AI model understand the unique requirements, curating responses in congruence with your DevOps workflow.

DevOps work environments are generally fast-paced, with deadlines to meet. Precisely designed prompts facilitate clear communication with AI models, yielding accurate and faster results. It also eliminates repetitive tasks, allowing them to focus on other crucial activities at work. This improves the internal process efficiency.

The chances of misinterpretation are reduced using clear and tailored prompts. Structuring prompts correctly enables AI models to comprehend the requirements accurately, minimizing errors in output. This helps prevent mishaps with configuration, deployment, and troubleshooting tasks.

AI solutions provide insightful solutions with optimized prompts that clearly articulate complex issues. Prompt engineering aids the problem-solving capabilities of DevOps teams.

The feature of sharing ChatGPT conversations using links fosters collaboration and knowledge exchange. ChatGPT conversations, when shared using links, retain the context of the conversation along with the prompts and responses. This sustains the essence of an ongoing discussion along with effective collaboration and communication.

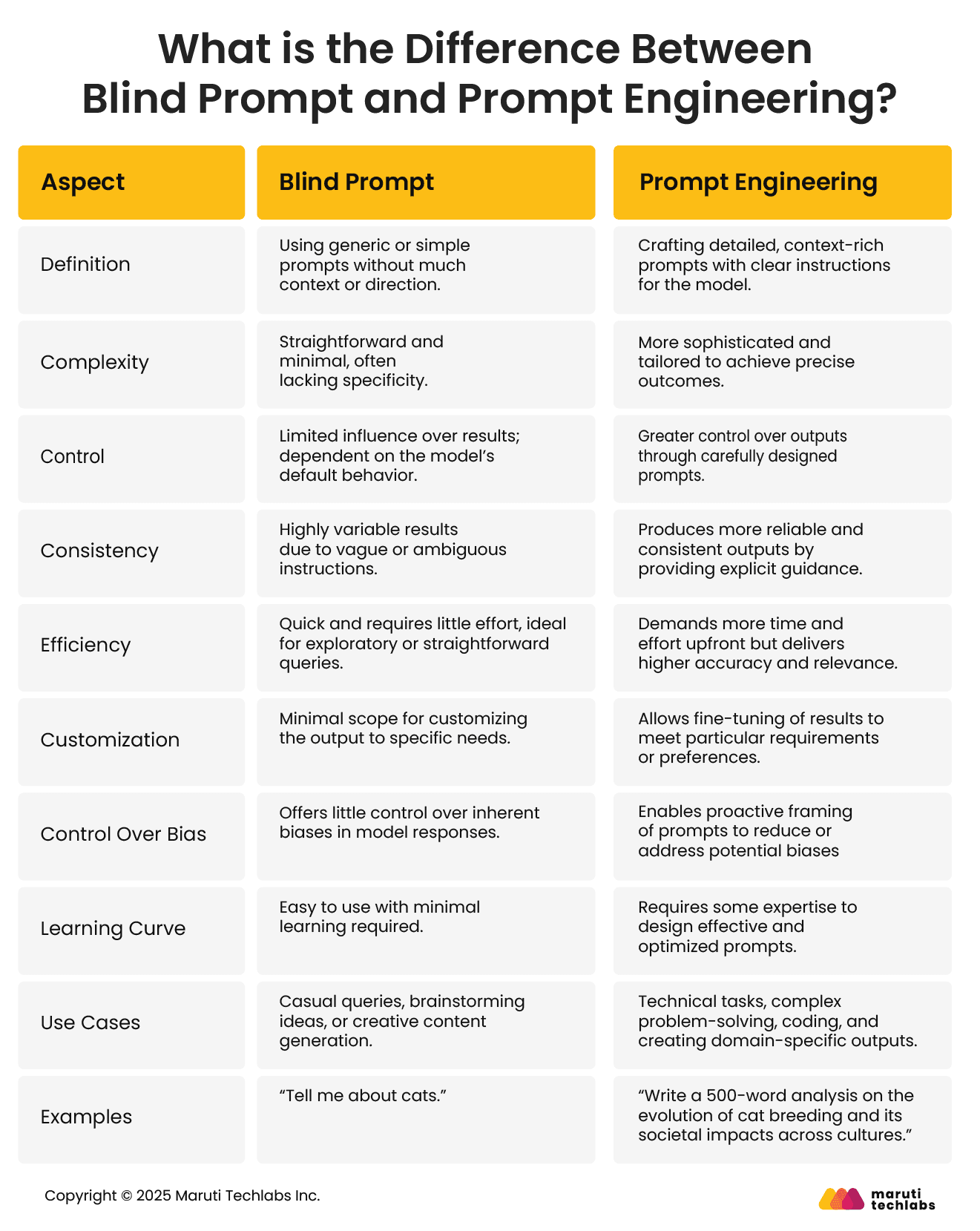

Aspect | Blind Prompt | Prompt Engineering | |

| Definition | Using generic or simple prompts without much context or direction. | Crafting detailed, context-rich prompts with clear instructions for the model. | |

| Complexity | Straightforward and minimal, often lacking specificity. | More sophisticated and tailored to achieve precise outcomes. | |

| Control | Limited influence over results; dependent on the model’s default behavior. | Greater control over outputs through carefully designed prompts. | |

| Consistency | Highly variable results due to vague or ambiguous instructions. | Produces more reliable and consistent outputs by providing explicit guidance. | |

| Efficiency |

| Demands more time and effort upfront but delivers higher accuracy and relevance. | |

| Customization | Minimal scope for customizing the output to specific needs. | Allows fine-tuning of results to meet particular requirements or preferences. | |

| Control Over Bias |

| Enables proactive framing of prompts to reduce or address potential biases. | |

| Learning Curve |

| Requires some expertise to design effective and optimized prompts. | |

| Use Cases | Casual queries, brainstorming ideas, or creative content generation. | Technical tasks, complex problem-solving, coding, and creating domain-specific outputs. | |

| Examples |

| “Write a 500-word analysis on the evolution of cat breeding and its societal impacts across cultures.” |

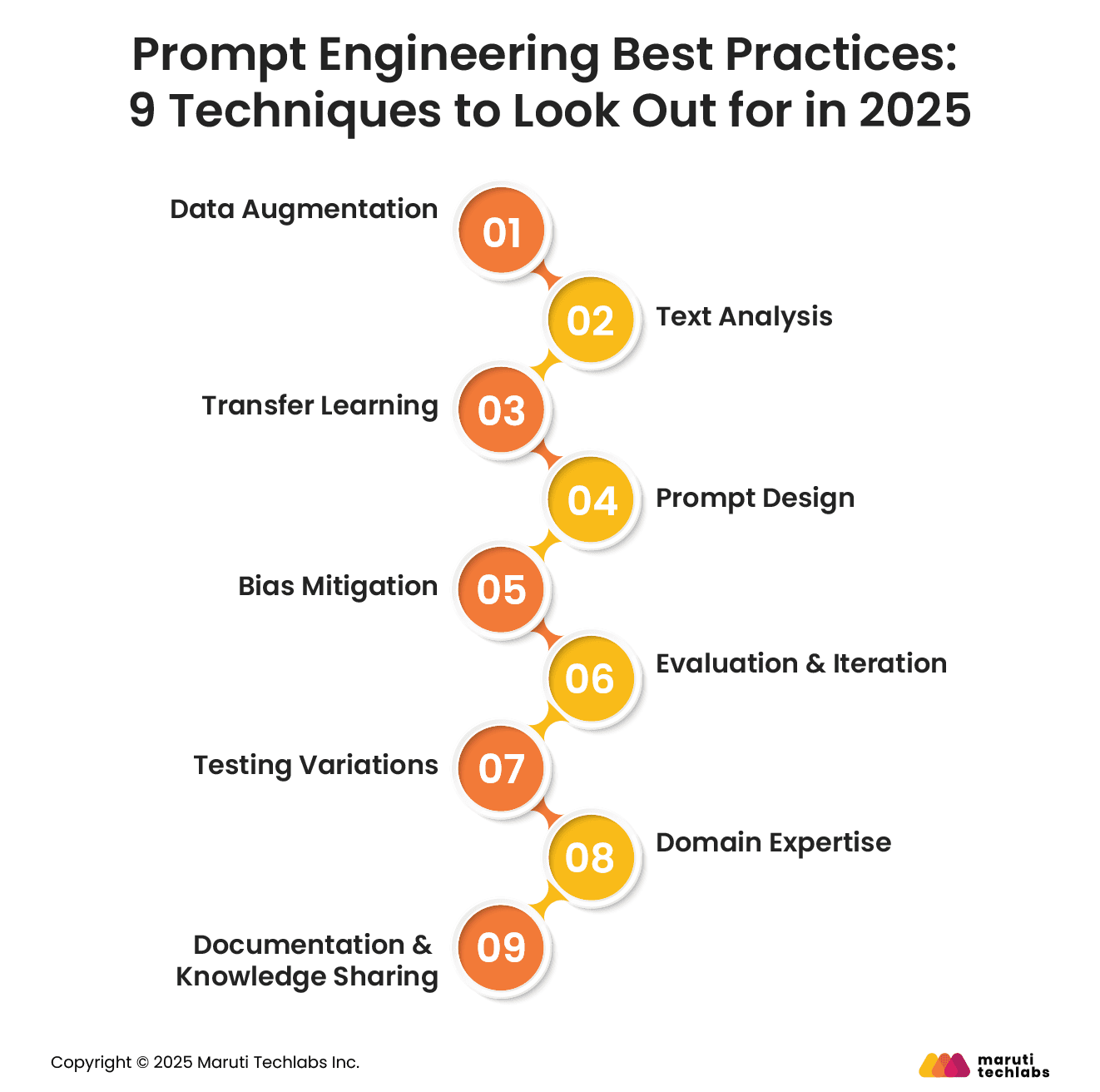

Let’s observe the top best practices and techniques that one should know to leverage the full potential of large language models.

Share knowledge and learnings with your team or community by documenting your prompt engineering strategies, best practices, and successful approaches.

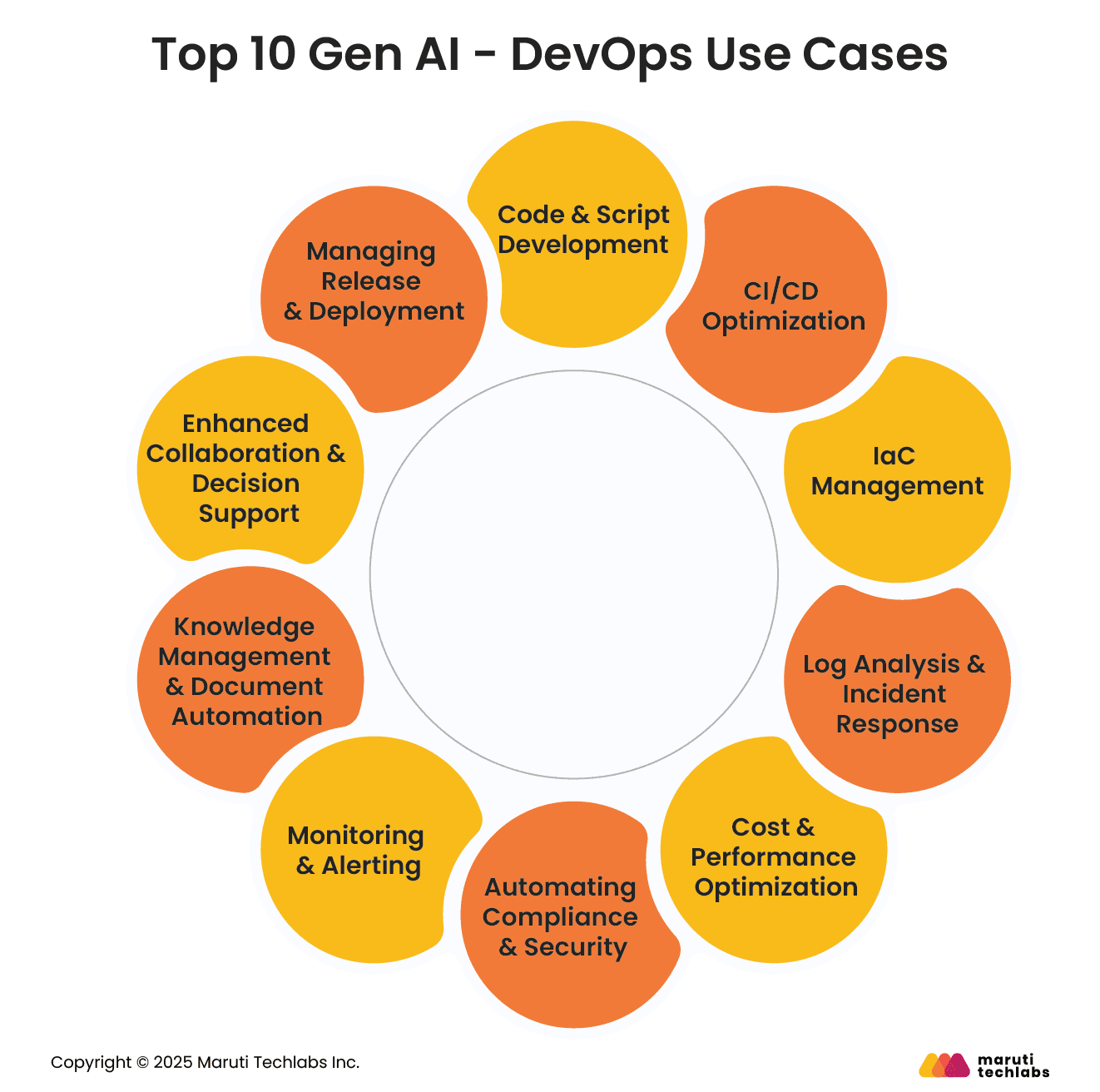

Gen AI in DevOps offers various optimization benefits, leading to more reliable and faster software development, deployment, and management. Here are some of the most widely adopted use cases in 2025.

Prompt engineering is transforming how DevOps teams leverage AI tools to boost productivity and precision. From automating complex workflows and improving CI/CD pipelines to streamlining incident response and generating accurate infrastructure documentation, prompt engineering empowers teams to unlock Gen AI’s full potential.

It delivers greater control, consistency, and efficiency in DevOps processes, enabling faster innovation and reduced operational bottlenecks.

At Maruti Techlabs, we specialize in helping businesses integrate AI-driven solutions within their DevOps practices. Our DevOps Service ensures your teams can leverage prompt engineering for more intelligent automation and resilient systems.

Ready to elevate your DevOps capabilities? Partner with Maruti Techlabs to build a future-ready, AI-powered DevOps ecosystem.

Not sure where to start? Use our AI Readiness Assessment Tool to evaluate your current DevOps maturity and uncover opportunities for intelligent automation.

Prompt engineering is the practice of designing precise, context-rich inputs to guide AI models like ChatGPT. It helps achieve desired, consistent, and relevant outputs by framing instructions clearly and strategically.

A non-strategy in prompt engineering would be relying solely on generic, vague prompts without refinement or iteration, as it limits control over the AI’s responses and leads to inconsistent or irrelevant outputs.

Iteration involves refining a prompt multiple times to improve results. For example, adjusting “Summarize this article” to “Summarize this article in 100 words, focusing on key business insights.

In ambiguous situations, prompt engineering helps clarify intent by adding context, constraints, and examples, ensuring the AI produces relevant and accurate responses despite uncertainty in the initial query.