11 Proven FinOps Techniques to Keep Cloud Bills in Check

Large Language Models (LLMs) have revolutionized the tech landscape, powering breakthroughs in natural language understanding, content generation, and enterprise automation.

Organizations now use LLMs to reimagine customer service, accelerate R&D, and create hyper-personalized experiences. However, this innovation comes with a steep price. Businesses often underestimate these expenses until cloud bills skyrocket within months.

A Vanson Bourne study commissioned by Tangoe found that Cloud spending is up 30% on average, mainly due to the Adoption of Generative AI and traditional AI technologies. Additionally, 72% of IT and financial leaders agree that GenAI-driven cloud spending is becoming unmanageable.

This blog examines the key cost drivers associated with LLM development. It provides practical strategies, ranging from implementing FinOps principles to leveraging more innovative workload management, to ensure sustainable and cost-effective LLM development.

“Large Language Models or LLMs are a type of Artificial Intelligence program that are trained on massive data sets, helping them understand and generate content, amongst other tasks.”

LLMs have become extremely popular since the introduction of generative AI to the forefront of public interest. Its influence is such that almost all businesses across the globe are planning to adopt AI across business functions and use cases.

On-premise LLMs require servers and other infrastructure that necessitate timely maintenance. Cloud-hosted LLMs eliminate the need for costly infrastructure.

While on-premise deployments offer increased control over data, they often don’t provide the scalability and flexibility of cloud solutions. Additionally, cloud-based LLMs are well-suited for businesses that require agility, as they operate on a pay-as-you-go model.

LLMs are primarily categorized into two types: Open-Source LLMs and Managed LLMs. Each offers specific benefits. However, it’s essential to examine the various costs associated with creating LLMs. This plays a critical role in LLM cost optimization.

Here are the top costs associated with building LLMs.

Direct charges depend on an LLM's token usage. A critical factor to consider for this is the inference. For instance, OpenAI charges $0.03 for 1000 inputs and $0.06 for 1000 outputs for GPT-4, which is significantly higher than the GPT-3.5 model.

Choosing the right deployment model here offers scalability and better workload predictability. Here are the top deployment models for you to choose from.

Customizations account for most of the indirect costs with LLMs. Here are the top indirect costs involved with the LLM development process.

Customization costs may vary depending on the frequency of updates and the complexity of deployment.

The key contributors to operational costs include latency, scalability, and inference. With an increase in user demand, operational costs will need to be optimized according to business requirements. At the same time, it’s necessary to look for techniques that would reduce LLM operational costs.

LLMs incur higher cloud costs over time, demanding more computing power and increased latency. Here are some key factors that can hamper your operational expenses.

Companies generally overlook certain hidden costs when considering LLM cost optimization. These can significantly increase your long-term spending and impact your total cost of ownership of the LLM.

Consider the following costs to avoid surprises in the long run.

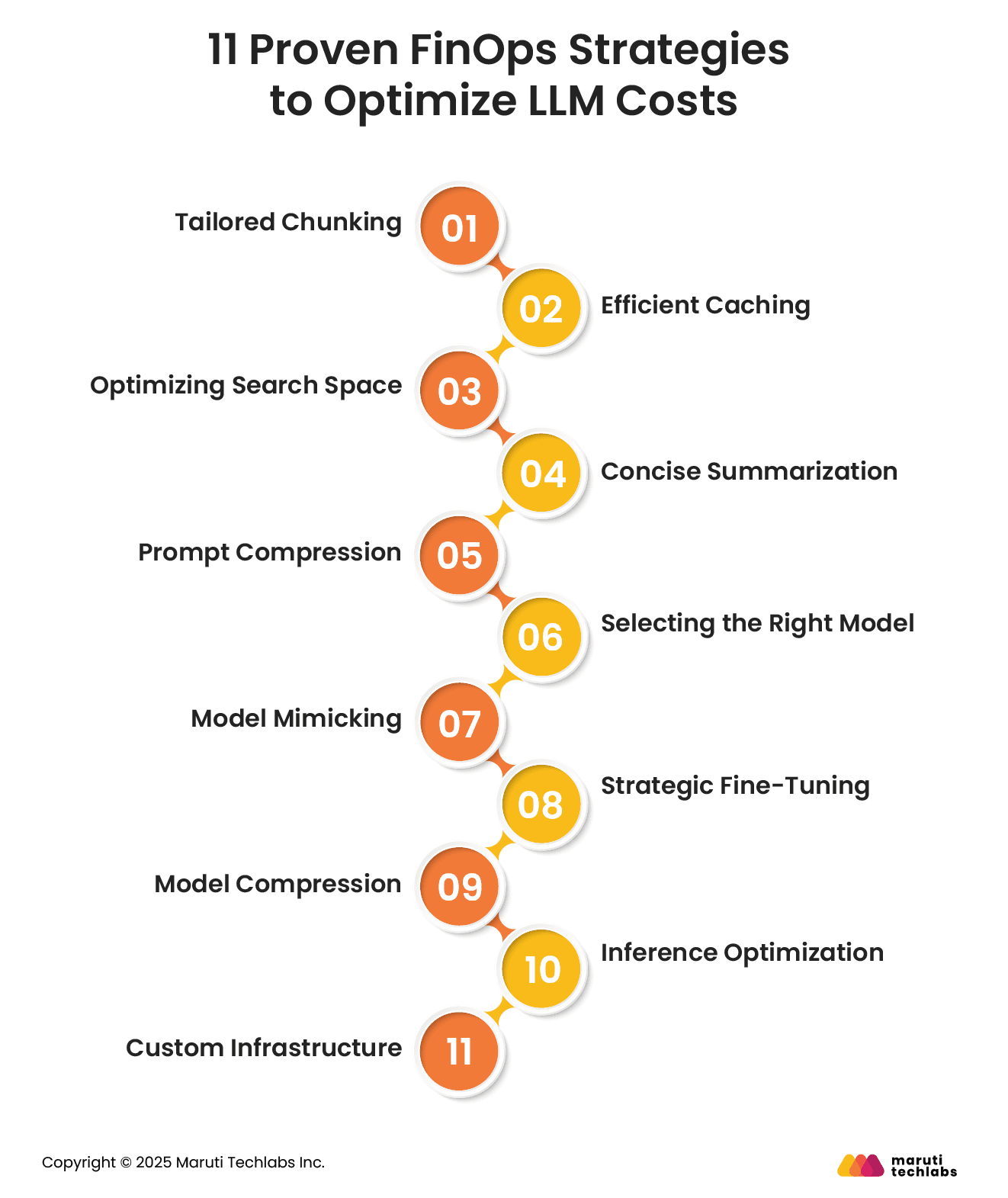

Optimizing the cost of LLMs demands a multi-faceted approach. Here are the top 11 FinOps strategies you can leverage to reduce expenses with LLMs.

LLMs process data in ‘Chunks’. This could increase costs while impacting accuracy. Opting for default chunking methods that often include overlaps results in inefficiencies, adding to latency and expenses.

Here’s how one can mitigate this challenge:

Costs can add up unexpectedly if LLMs process repeated interactions. It’s suggested that one leverages semantic caching, which would help store and retrieve frequently shared responses.

Let’s observe some tools and techniques that can help solve this problem.

Passing a varied range of contexts to LLMs without relevant filtering can increase computational costs while decreasing accuracy. Optimizing the search space ensures only relevant information is processed.

Here are some techniques that can help you optimize your search space.

With LLMs, conversational interactions can directly affect the costs by accumulating tokens. One can retain essential context and minimize token usage by summarizing chat histories.

Let’s observe some practices that can help this cause.

The introduction of prompting techniques, such as chain-of-thought (CoT) and in-context learning (ICL), has increased prompt lengths, leading to higher API costs and computational requirements.

Here is a technique to mitigate this challenge.

LLM costs are primarily dependent on the model you choose. Though LLMs are highly useful, they can disrupt your budgets, especially if you implement large models instead of SLMs.

One can achieve apt performance while reducing computational costs by training a smaller model to mimic the outputs of a larger model.

Here’s a technique that can help you achieve this.

Offering few-shot examples in prompts can be costly, especially in complex use cases. The need for these models can be eliminated by fine-tuning them for specific tasks.

Here is a list of strategies to do this.

Deployment is often cumbersome as LLMs require high GPU computational costs. Leveraging techniques like quantization can enhance accessibility, consuming fewer resources and decreasing model size.

These are some tools you can use for this process.

If you want to maximize throughput, minimize latency while decreasing costs, it’s essential that you optimize LLM inferences.

Here’s how you can do it.

Cost optimization is a direct result of the infrastructure you choose for your LLM. One can only observe significant savings if their LLM’s infrastructure is tailored to their usage patterns.

Here are some strategies to choose the right infrastructure.

Here are some practical examples that showcase the implementation of cost optimization strategies for running LLMs.

As organizations embrace LLMs to drive transformative AI solutions, FinOps emerges as the cornerstone for balancing innovation with financial sustainability. By bringing visibility, accountability, and collaboration across teams, FinOps ensures Gen AI workloads remain cost-efficient without hampering experimentation.

It empowers businesses to scale LLM deployments confidently, optimizing compute, storage, and inference costs. With a strong FinOps culture, enterprises can unlock Gen AI’s full potential while adhering to their budgets.

Partner with Maruti Techlabs to accelerate your AI journey. Our expertise in Artificial Intelligence Services and Cloud Cost Optimization helps you innovate faster and smarter, without the surprise cloud bills. Connect today to future-proof your AI investments.

Curious about your organization’s readiness for AI adoption? Try our AI Readiness Assessment Tool to evaluate your current capabilities and identify growth areas.

If you're looking for trusted cloud application development in New York, Maruti Techlabs delivers scalable, cost-efficient solutions tailored to support your FinOps and AI goals.

Cloud FinOps is a cultural and operational practice that introduces financial accountability to cloud spending. It enables cross-functional teams to collaborate on data-driven spending decisions, ensuring efficient resource utilization and cost optimization in cloud environments.

Top FinOps tools include Apptio Cloudability, Flexera, and Finout. The right software depends on your organization’s scale, multi-cloud needs, and integration requirements with existing financial and engineering workflows.

FinOps for AI focuses on optimizing the costs of AI workloads, such as training large language models and running inference, by improving visibility, resource allocation, and spend efficiency, thereby ensuring innovation without unanalyzed cloud expenses.

AIOps uses AI to automate IT operations, improving performance and uptime. FinOps manages cloud financials, fostering collaboration between finance, engineering, and operations to control costs. While AIOps focuses on operations, FinOps targets cloud spend governance.

The DoD’s cloud FinOps strategy emphasizes cost transparency, governance, and optimization across its multi-cloud environments. It aims to improve financial accountability, avoid waste, and align cloud spending with mission-critical outcomes efficiently.

The three pillars of FinOps are Inform (create visibility into cloud spend), Optimize (reduce waste and improve cost-efficiency), and Operate (establish processes for continuous financial governance and accountability).