Prompt Engineering, Fine-Tuning, RAG: How to Make the Right Choice?

LLMs are undeniably powerful, but generic models rarely meet the unique needs of every business. That’s where customization becomes essential, ensuring relevance, accuracy, and performance aligned with your goals.

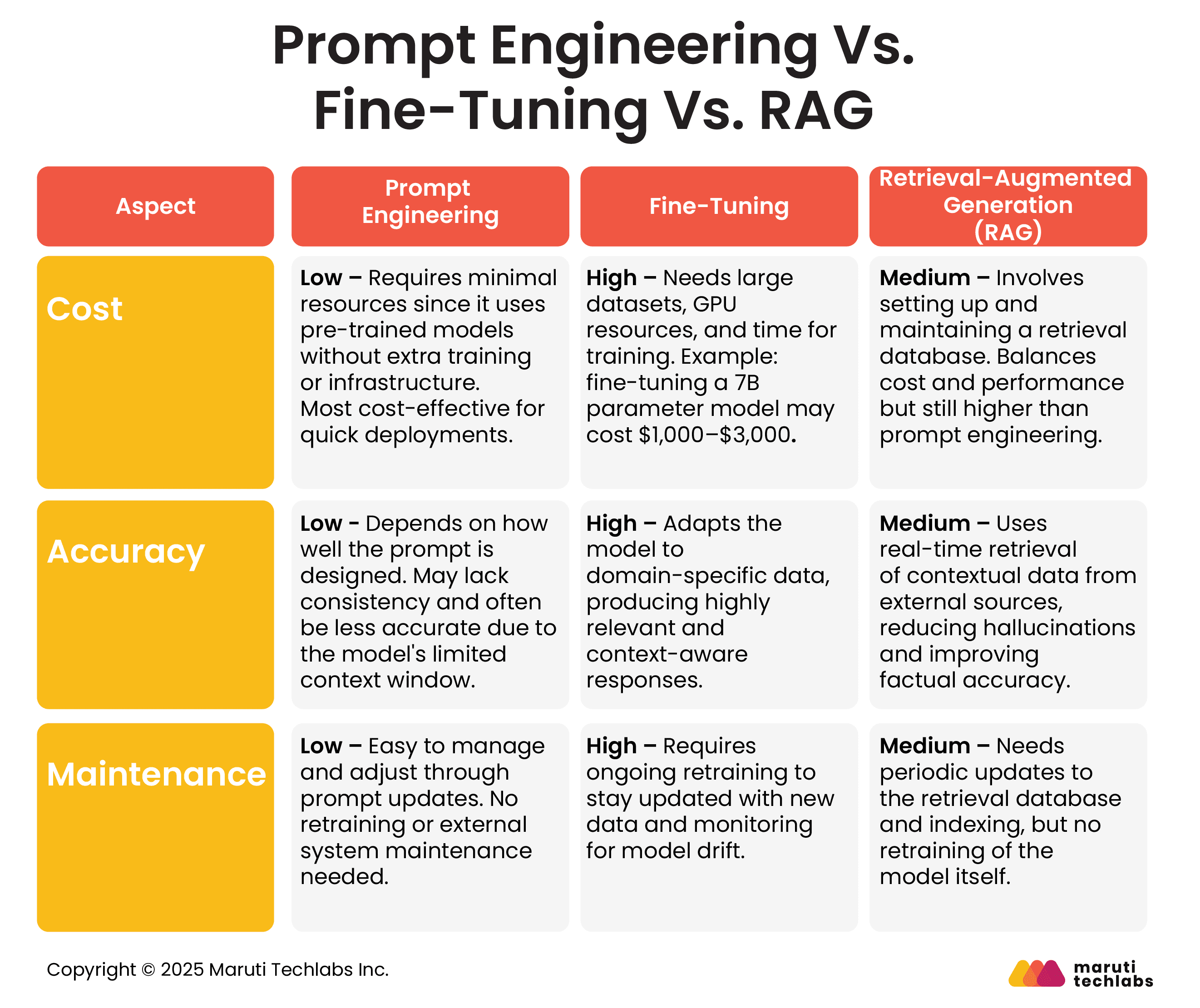

There are three primary ways to tailor an LLM: Prompt Engineering, Fine-tuning, and Retrieval-Augmented Generation (RAG). Each offers distinct trade-offs in cost, accuracy, and scalability.

Five years ago, a tectonic shift redefined how we work when OpenAI released ChatGPT in 2022. It marked the dawn of a new human–machine partnership. Language became the interface, and tasks like coding, writing, and decision-making changed forever.

Today, nearly every business leverages some form of Large Language Model (LLM), yet many still struggle to unlock their full potential.

In this blog, we’ll explore these three approaches, their use cases, compare their different aspects, and help you choose the right path for effective, future-ready LLM customization.

Enterprises seeking to maximize the value of their Large Language Models (LLMs) can utilize three optimization methods: prompt engineering, fine-tuning, and retrieval augmented generation (RAG).

All the above models are adept at optimizing model behavior. However, which one suits your needs best depends on the targeted use case and available resources.

Let’s get a brief overview of what these techniques have to offer.

All three approaches offer distinct advantages and trade-offs, making them suitable for various requirements and environments.

Let’s observe how all three techniques can be applied across different industries.

Across industries, these three methods drive smarter operations, enhancing patient care in healthcare, detecting fraud in finance, and crafting dynamic marketing campaigns. Each plays a unique role in driving AI-driven efficiency and innovation.

Let’s break down how each method compares in terms of cost, accuracy, and upkeep.

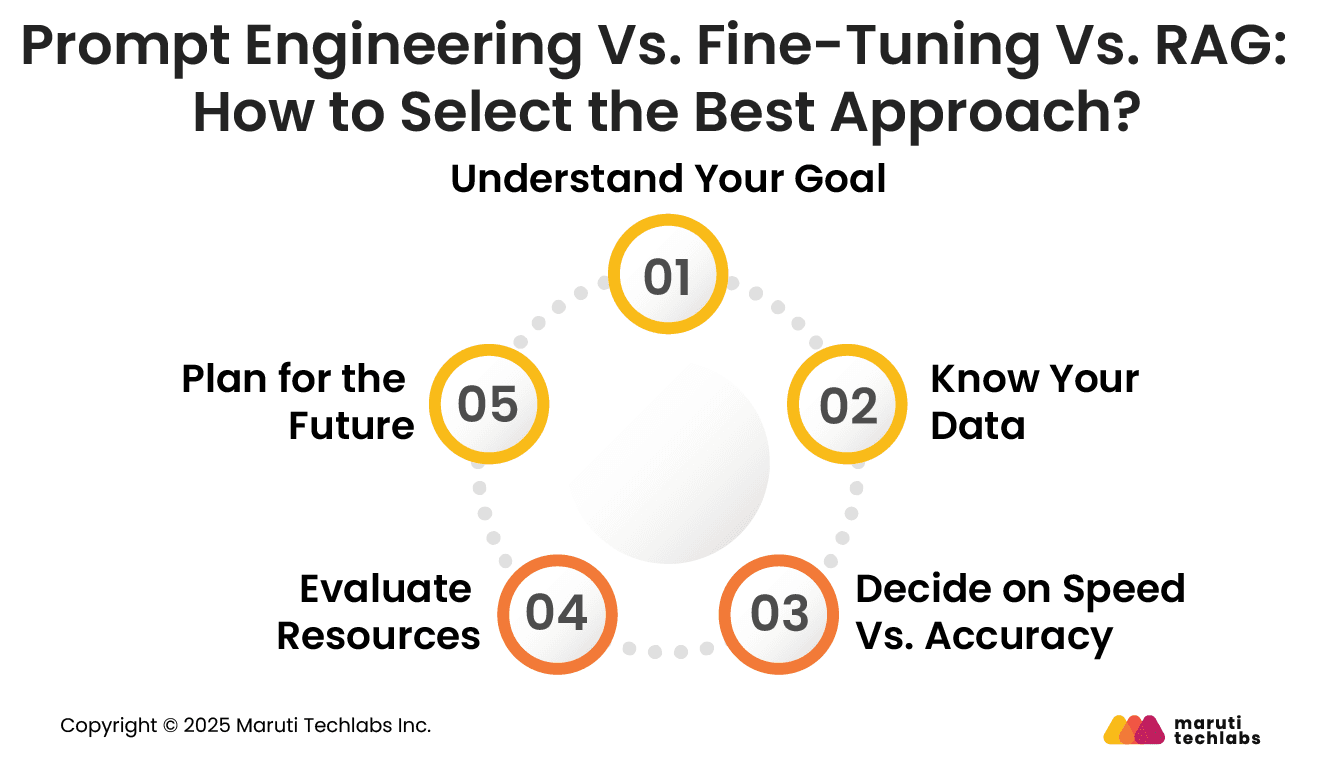

Follow these five simple steps to zero in on the approach that fits your business best.

Identify whether your focus is on accuracy, scalability, or cost efficiency. Your business objective determines which AI optimization approach is best suited for your needs.

Assess the volume, quality, and structure of your data. High-quality, domain-specific data favors fine-tuning or RAG over simple prompting.

If you need quick deployment, prompt engineering works best. For precision and context-rich outputs, RAG or fine-tuning delivers stronger results.

Consider budget, infrastructure, and technical expertise. Fine-tuning demands high computational resources and specialized skills, while RAG and prompt engineering require fewer resources.

Choose a scalable approach. RAG supports continuous data updates, while fine-tuning provides long-term domain expertise and flexible engineering.

There’s no one-size-fits-all when it comes to customizing LLMs. The right approach really depends on what you’re building, the type of data you have, and the level of control you want. Let’s look at a few real-world scenarios to see when prompt engineering, fine-tuning, or RAG makes the most sense.

In short, prompt engineering is best for quick experiments and content generation with minimal setup. Fine-tuning works when you need precision, consistency, and domain expertise. RAG is ideal for keeping outputs current and factual without retraining the model. The right choice depends on your goals, data, and the level of hands-on involvement you want with your AI.

Choosing between Prompt Engineering, Fine-Tuning, and RAG ultimately depends on your organization’s priorities, whether that’s cost-efficiency, accuracy, scalability, or adaptability.

Understanding your data maturity, business goals, and available resources is key to selecting the correct method. The right approach can significantly enhance productivity, customer experience, and decision-making through optimized language model performance.

If you’re ready to unlock the full potential of AI customization, explore how Maruti Techlab’s Generative AI Services can help you design, implement, and scale the best-fit solution for your business.

If you’re just commencing your AI journey, our AI Strategy and Readiness services can help you learn your business requirements and help design an adequate AI solution.

Partner with Maruti Techlabs today to build more innovative, adaptive, and cost-efficient AI systems.

Fine-tuning customizes a model using domain-specific data for precise outputs.

Prompt engineering optimizes how you query models for better responses without retraining.

RAG combines language models with external databases to fetch real-time, factual information, thereby improving accuracy and reducing hallucinations.

Prompt engineering is faster, cheaper, and requires no retraining. It enables businesses to achieve beneficial results using existing models with minimal data or technical effort, making it perfect for experimentation or quick deployments.

However, fine-tuning requires more computational resources, specialized datasets, and time to achieve domain-specific performance.

Yes. Combining RAG and fine-tuning often delivers optimal results. Fine-tuning tailors the model’s internal knowledge to specific domains, while RAG supplements it with up-to-date, external data.

Together, they enhance contextual understanding, accuracy, and factual relevance, particularly in industries that require frequent updates to information, such as healthcare or finance.

Choose based on goals, data availability, and resources. Utilize prompt engineering for speed and cost efficiency, fine-tuning for accuracy in specialized domains, and RAG for real-time, factual outputs.

Assess your infrastructure, technical expertise, and scalability requirements to select the most balanced and sustainable approach for your business.