LLM Deployment: A Simple Guide to Cloud vs. On-Premises

Generative AI and large language models (LLMs) have quickly become a focus for businesses across industries. A recent Bain study shows that 87% of organizations have already deployed or are testing generative AI, with adoption skyrocketing. Yet, many companies still struggle to decide how to deploy their models in a way that balances performance, security, and cost.

Choosing the right LLM deployment model is not always simple. Businesses generally have two options: run models on-premise or in the cloud. On-premise deployments give you complete control over your infrastructure and data, which is helpful for organizations with strict security or compliance needs.

Cloud deployments make it easier to get started, scale quickly, and reduce day-to-day maintenance, but they can raise concerns about data privacy and long-term costs.

The best approach depends on your business goals, the sensitivity of your data, and the resources you can dedicate to managing AI systems. Picking the right strategy can make the difference between an AI project that delivers value and one that never meets expectations.

This blog covers why deployment choice matters for LLMs, how on-premises and cloud deployment each offer advantages and face limitations, five critical factors for LLM deployment strategies, and a detailed comparison of on-premise vs cloud.

AI has become a key driver of innovation and efficiency across almost every industry. Companies are investing heavily in AI infrastructure to keep up with this rapid adoption. According to IDC, AI infrastructure spending reached $47.4 billion in 2024, nearly doubling from the previous year, and is expected to surpass $200 billion by 2028.

The U.S. alone will account for 59% of this investment. While the tech industry led this movement at first, sectors like energy, manufacturing, logistics, and finance are now seeing wide applications for generative AI, large language models (LLMs), and intelligent agent platforms.

As businesses integrate AI into core operations, one of the most critical decisions they face is how to deploy their LLMs. The choice between on-premise and cloud deployment models has a direct impact on cost, security, scalability, and long-term return on investment.

On-premise deployment gives organizations complete control over their infrastructure and data, making it attractive for companies with sensitive data or strict compliance needs. However, it requires significant upfront investment and ongoing maintenance. Cloud deployment, on the other hand, offers faster setup, easy scaling, and less operational overhead, but may raise concerns about data privacy and long-term costs.

Understanding these trade-offs is essential for enterprise leaders. The right deployment model ensures that AI investments translate into real value, aligning infrastructure choices with business goals and maximizing the impact of LLMs across the organization.

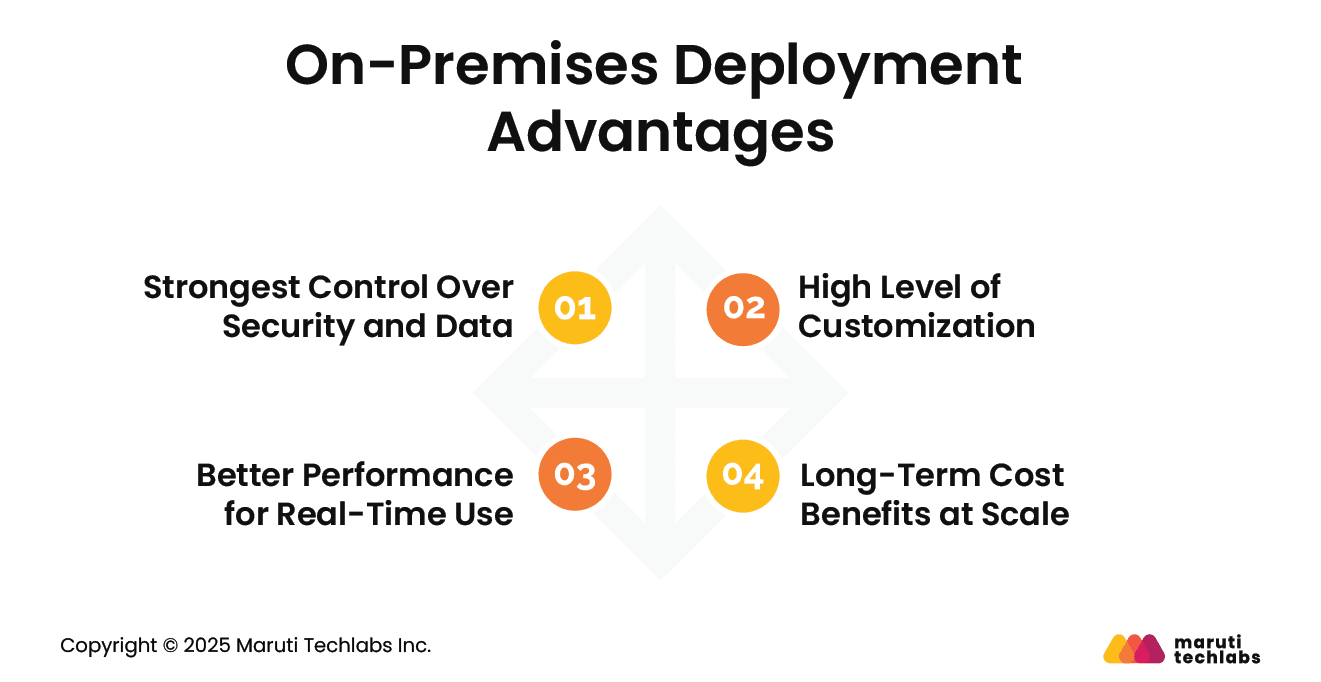

For some enterprises, deploying LLMs on-premises can offer powerful benefits, especially when security, control, and long-term cost are top priorities. Let’s look at where this model stands out:

With on-premises deployment, all your data stays within your environment. This makes it easier to protect sensitive information, avoid third-party data breaches, and ensure your proprietary data isn’t used to train public LLMs. It gives you complete control over access, storage, and compliance.

On-premise setups let you customize AI models to match how your business works. Training smaller models with your own data enables them to provide more accurate results, particularly in tasks such as answering questions from internal documents or automating processes.

Since everything runs locally, you’re not dependent on internet speeds or external servers. This setup often results in lower latency and faster response times, especially helpful for real-time, high-stakes applications.

Although setting up on-premises systems incurs higher initial costs, it can ultimately save money, particularly for large companies. When you’re working with big datasets, training models, or have lots of users, handling everything in-house can be more cost-effective.

Additionally, there are tax advantages, such as the ability to spread costs over time, helping to ease the overall financial impact.

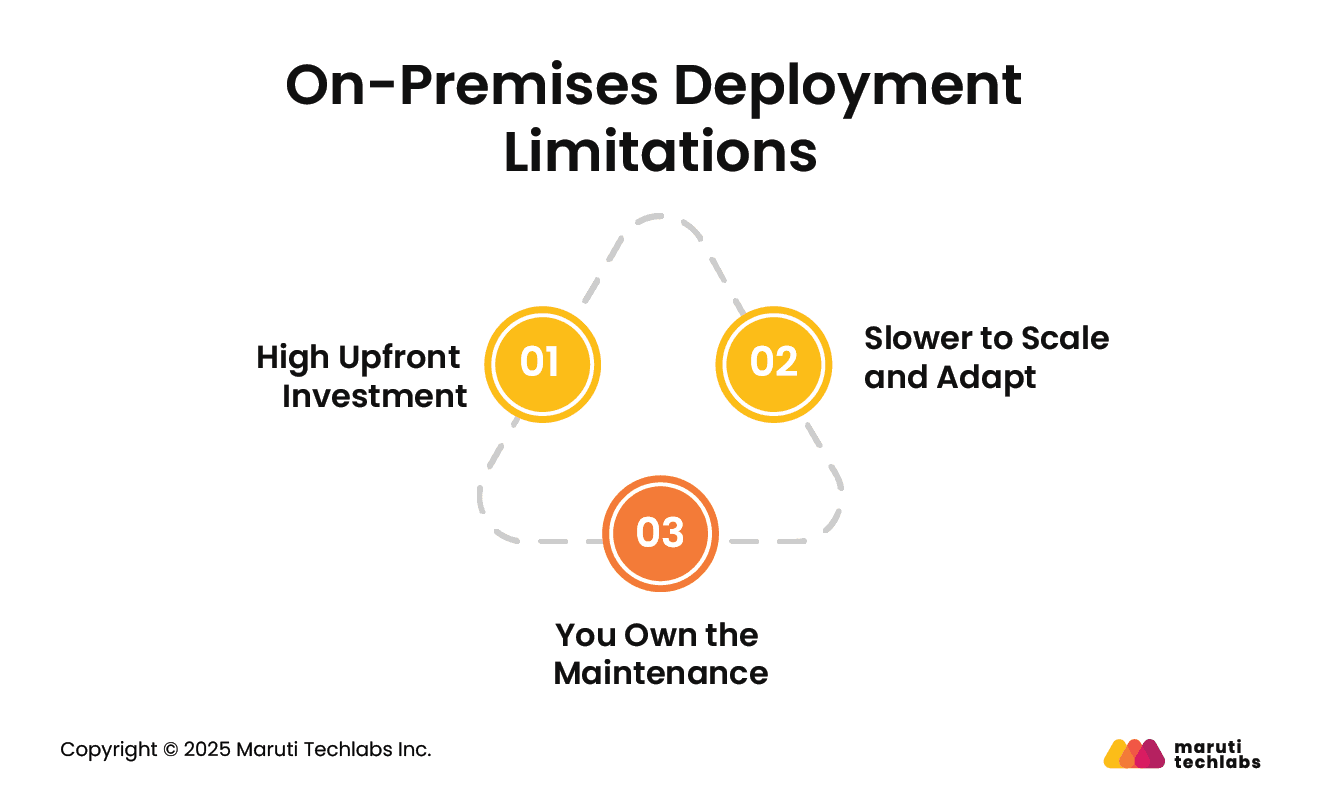

While on-premise deployment offers strong control, better performance, and long-term savings for some businesses, it also comes with a few challenges to keep in mind:

Setting up an on-premises environment requires significant capital for hardware, software, and skilled teams, especially if you plan to build or train your own models.

Unlike cloud, scaling up on-prem infrastructure takes time, planning, and more investment in physical resources.

Security updates, system patches, and ongoing infrastructure upkeep are entirely your responsibility. This adds complexity and operational burden.

Partnering with a vendor that offers pre-built tools and support for on-prem deployment can help reduce cost, time, and risk. While on-premises isn't for everyone, it can be the right fit for businesses that need full control, have strict data requirements, and operate at a scale where long-term savings matter.

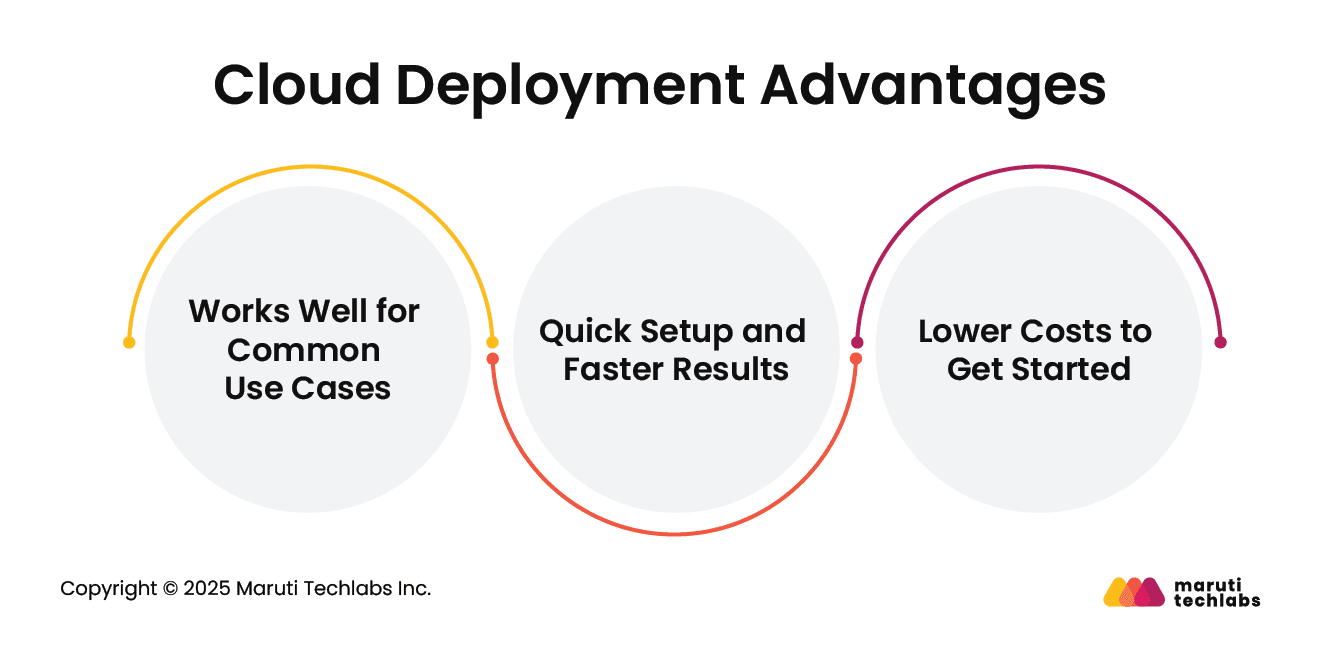

Cloud-based deployment has become a popular choice for businesses looking to quickly get started with AI and avoid heavy upfront investments. Let’s look at what makes this approach appealing:

1. Works Well for Common Use Cases: If your business needs align with how cloud solutions are designed to work, you can achieve strong results right out of the box. These systems are built to handle general workflows and offer a good user experience from the start.

2. Quick Setup and Faster Results: Cloud LLMs are usually ready to use, so your team can start experimenting and rolling out features in less time. Since they follow standard interfaces and tools, your team likely won’t face a steep learning curve.

3. Lower Costs to Get Started: Cloud deployments are ideal if you don’t need to build or train your model. You save time and money by skipping custom development, training, and infrastructure setup.

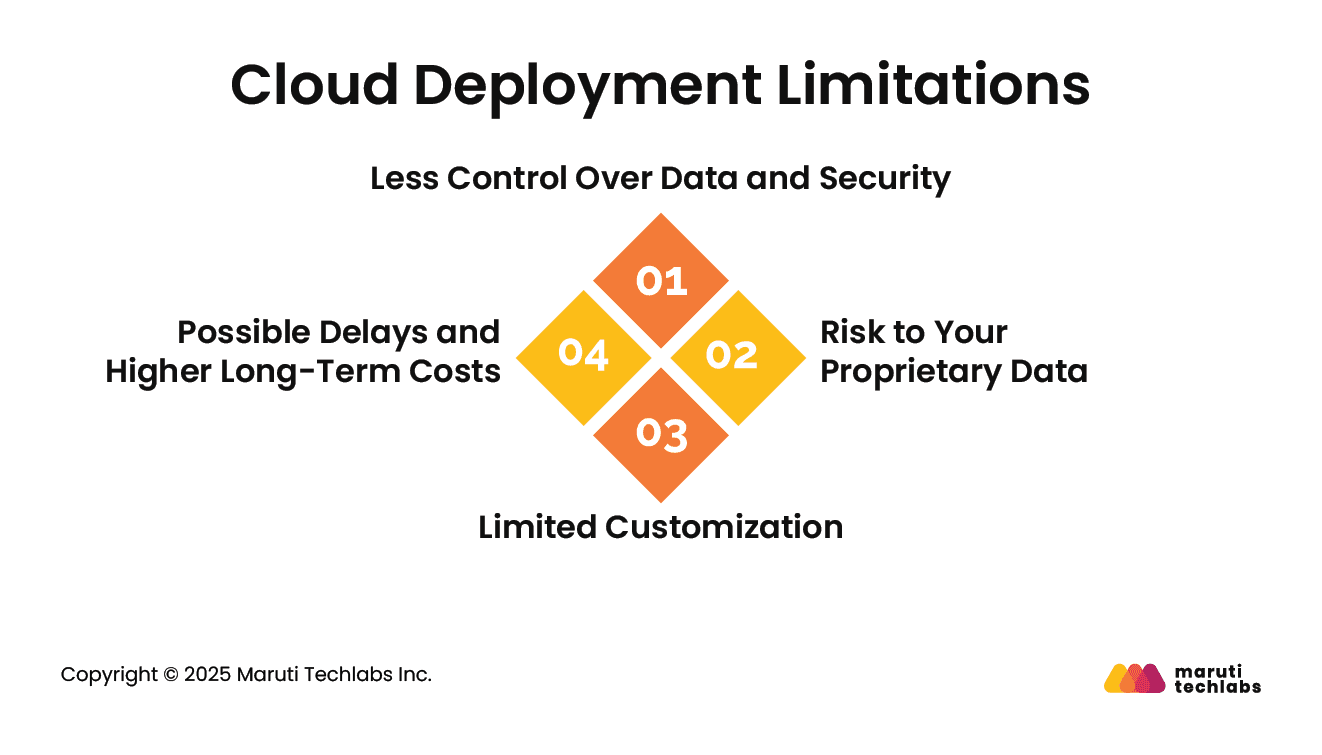

While cloud solutions offer speed and flexibility, they also come with a few necessary trade-offs to consider:

1. Less Control Over Data and Security: Because the data resides on third-party servers, direct control over its storage and protection is limited. It is essential to rely on the vendor’s safeguards and verify that they comply with rigorous security standards.

2. Risk to Your Proprietary Data: Organizations fear that uploading sensitive information to a commercial LLM could compromise their data, especially for those with valuable intellectual property.

3. Limited Customization: Off-the-shelf solutions may not fully match your specific needs. Accuracy can suffer, and you might not be able to tweak the system the way you want.

4. Possible Delays and Higher Long-Term Costs: Depending on usage and provider, latency can affect performance. Additionally, ongoing usage fees, API calls, and operational costs can accumulate over time, sometimes exceeding expectations.

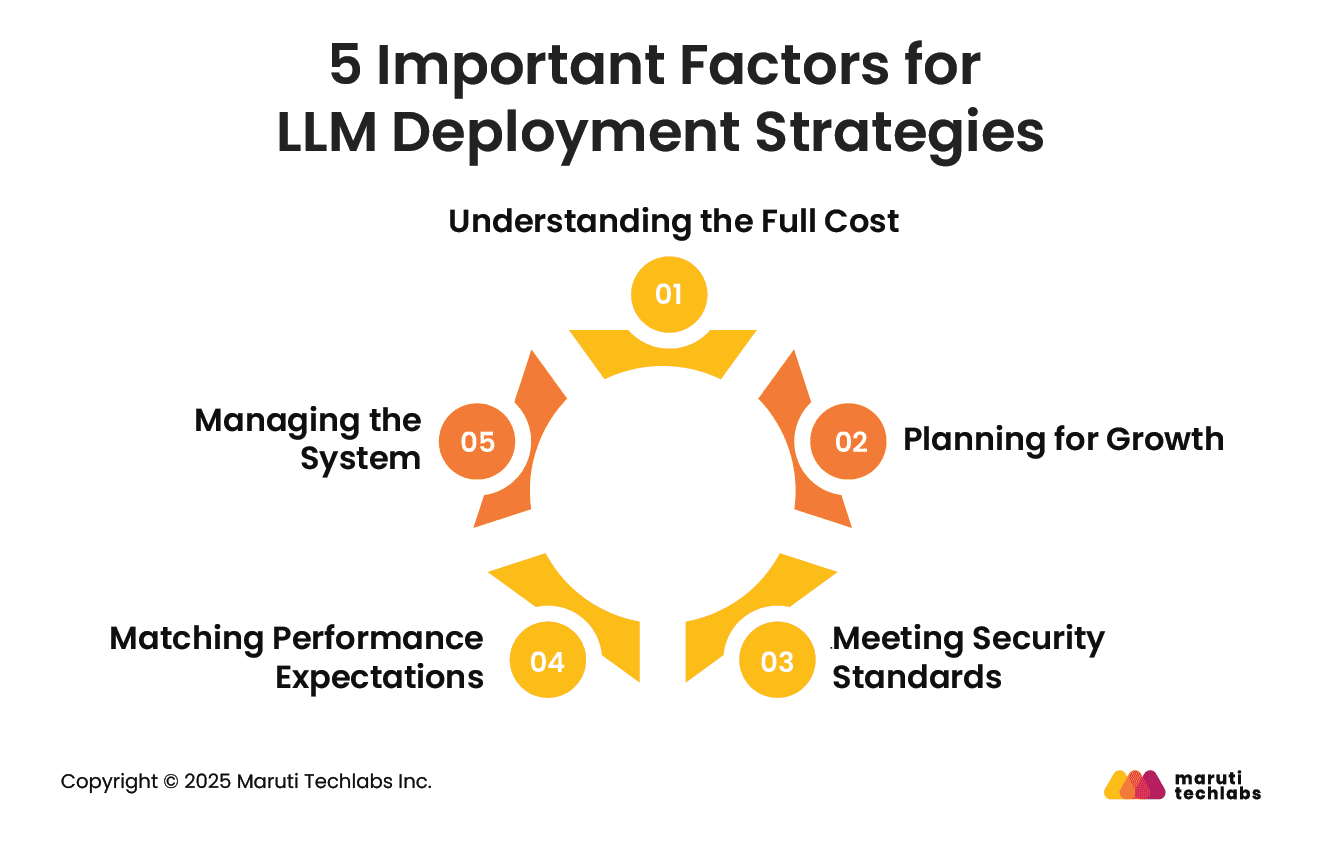

Before choosing between on-premise and cloud for LLM deployment, it’s essential to look at a few key areas that directly affect long-term success.

Look beyond just the initial setup. Consider hardware, software, developer time, ongoing usage, and long-term operating costs. This helps you determine which option offers the best financial advantage for your business.

Think about how much your usage might grow over time. If you anticipate rapid growth or fluctuating demand, a cloud setup can provide the flexibility to scale up or down as needed.

Every business has different security needs. If you're working with sensitive or regulated data, you’ll need to be extra careful about where and how that data is stored. This could influence your choice of deployment.

Some use cases need real-time speed and very low delay. If that’s the case, on-premise might be a better option since it offers more consistent performance without relying on internet connections.

Consider who will maintain the system. If the team lacks in-house expertise, cloud solutions may be easier to manage, while on-premises options often require more hands-on upkeep.

Choosing between cloud and on-premises deployment for large language models (LLMs) is a critical decision that impacts cost, scalability, security, and performance. Each option offers unique advantages and trade-offs that suit different business priorities. Here is a detailed comparison of cloud vs. on-premises LLM deployment.

Both cloud and on-premises deployments have clear advantages, and the “right” choice depends on whether your business values flexibility and speed over control and security, or vice versa. For organizations prioritizing rapid scaling and lower initial costs, the cloud is often the better fit. However, if your business demands maximum control, tight compliance, or the lowest possible latency, on-premises deployment could be the optimal route.

As a company experienced in cloud application development in Chicago, Maruti Techlabs offers end-to-end support to help you assess, plan, and implement the most suitable deployment strategy for your needs.

If you’re exploring Generative AI and unsure about the right deployment strategy: cloud, on-premises, or hybrid, Maruti Techlabs can help. Explore our Generative AI services or get in touch with our team to discuss what works best for your use case.

Want to estimate your cloud setup and optimization costs? Try our Cloud Migration Cost Calculator to plan your deployment strategy more effectively.

Cloud deployment uses remote servers managed by third-party providers, offering flexibility and scalability. On-premises means software and data are hosted locally within an organization’s infrastructure. Cloud reduces operational effort, while on-premises provides greater control, especially for data-sensitive industries.

Choose cloud if you need flexibility, quick updates, and reduced IT overhead. Opt for on-premise if your operations involve highly sensitive data, strict internal controls, or regulatory environments requiring full infrastructure ownership. Your choice should align with compliance needs, IT readiness, and long-term maintenance capabilities.

Cloud-based FSM (Field Service Management) typically involves lower upfront costs and pay-as-you-go pricing. On-premise FSM requires significant initial investment in infrastructure and ongoing maintenance. Over time, cloud may offer better cost-efficiency for scaling, while on-premise may suit companies needing tight system control or long-term stability.

Cloud-based LLMs offer faster setup, easier scaling, and lower infrastructure demands. They enable real-time updates, collaboration, and access to powerful resources without heavy IT support. This makes them ideal for businesses needing quick deployments, continuous improvements, and minimal operational complexity.

On-premises deployment offers greater control over data and security, making it ideal for sensitive or regulated environments. However, cloud providers also offer strong, often enterprise-grade security. The most secure model depends on your organization’s needs, internal policies, and ability to manage security risks effectively.