How to Reduce LLM Costs: Top 6 Cost Optimization Strategies

In recent years, industries from finance to healthcare have raced to integrate large language models (LLMs). It has transformed how we analyze data, automate workflows, and power intelligent interfaces.

But as adoption surges, so do computational and infrastructure costs, turning promising prototypes into budget headaches. To make LLMs viable at scale, cost optimization isn’t just a nice-to-have: it’s essential.

In this article, we’ll explore major cost drivers with LLMs, top cost optimization strategies, top 3 tools to use, and real-world examples of how teams can control their LLM expenses.

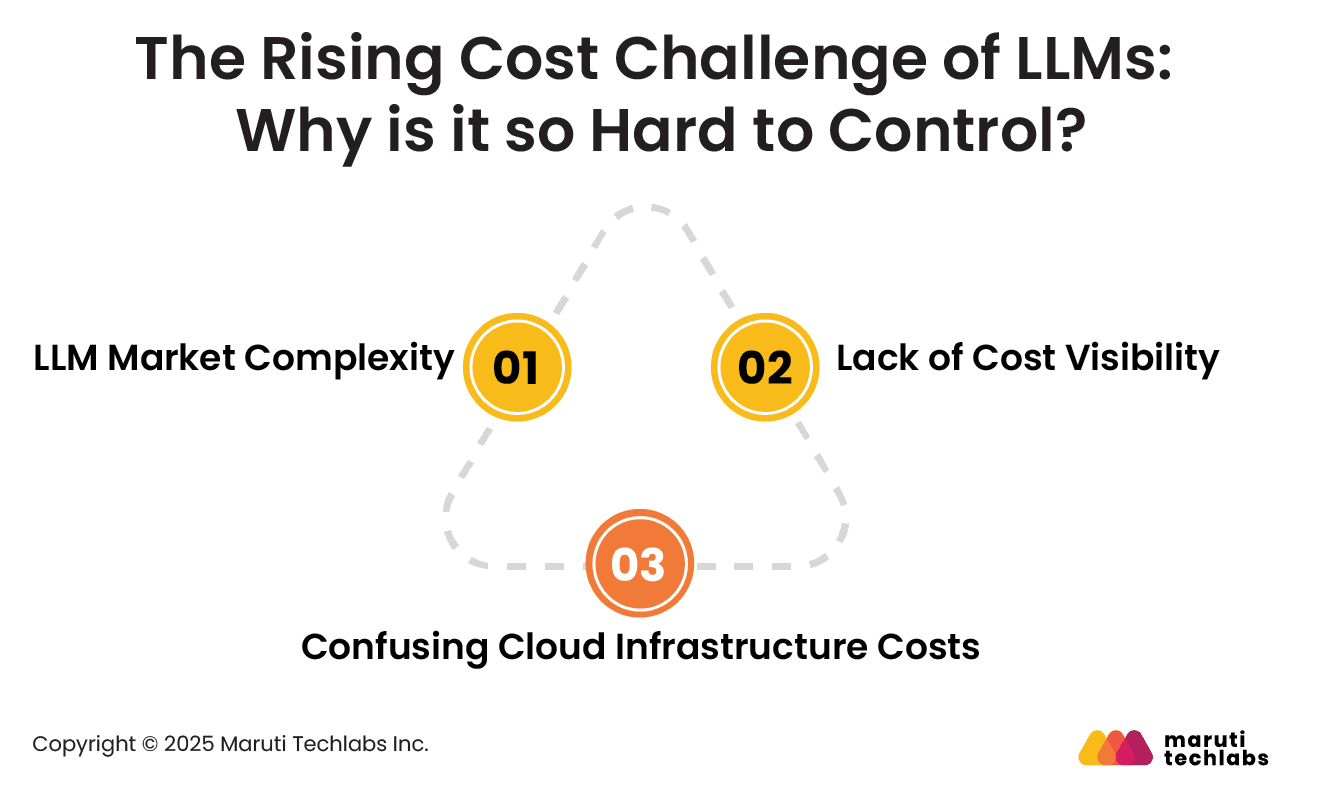

Managing LLM expenses has become a growing concern for many organizations today. Here are some prominent reasons why LLM costs are difficult to predict and control.

Companies require different LLM providers because relying on a single provider might not be appropriate for all their use cases. So, they invest in expensive and resource-intensive models.

At times, organizations fail to explore other options, thereby missing out on cost-effective solutions. This adds to unnecessary expenses and resource use, as these models aren’t optimized to handle niche tasks of the organization.

Teams like DevOps or MLOps that develop and maintain Gen AI solutions often don’t have the tools to monitor and manage LLM costs.

They have the skills to deploy and maintain AI models. However, they don’t have the means to obtain real-time insights into data usage or compute resources.

This makes expense tracking difficult, causing budget overruns. Subsequently, without a clear understanding of LLM usage patterns, teams can implement cost-saving measures or identify areas for optimization.

There are hundreds of compute instances to choose from, primarily when operating GenAI workloads on Kubernetes. These extensive choice options with varied pricing, performance, and configuration add to the complexity of decision-making.

Without apt guidance and automation tools, teams can struggle to find specific instances for their niche workloads. This almost always results in a compromised choice that adds to expenses without delivering the desired performance.

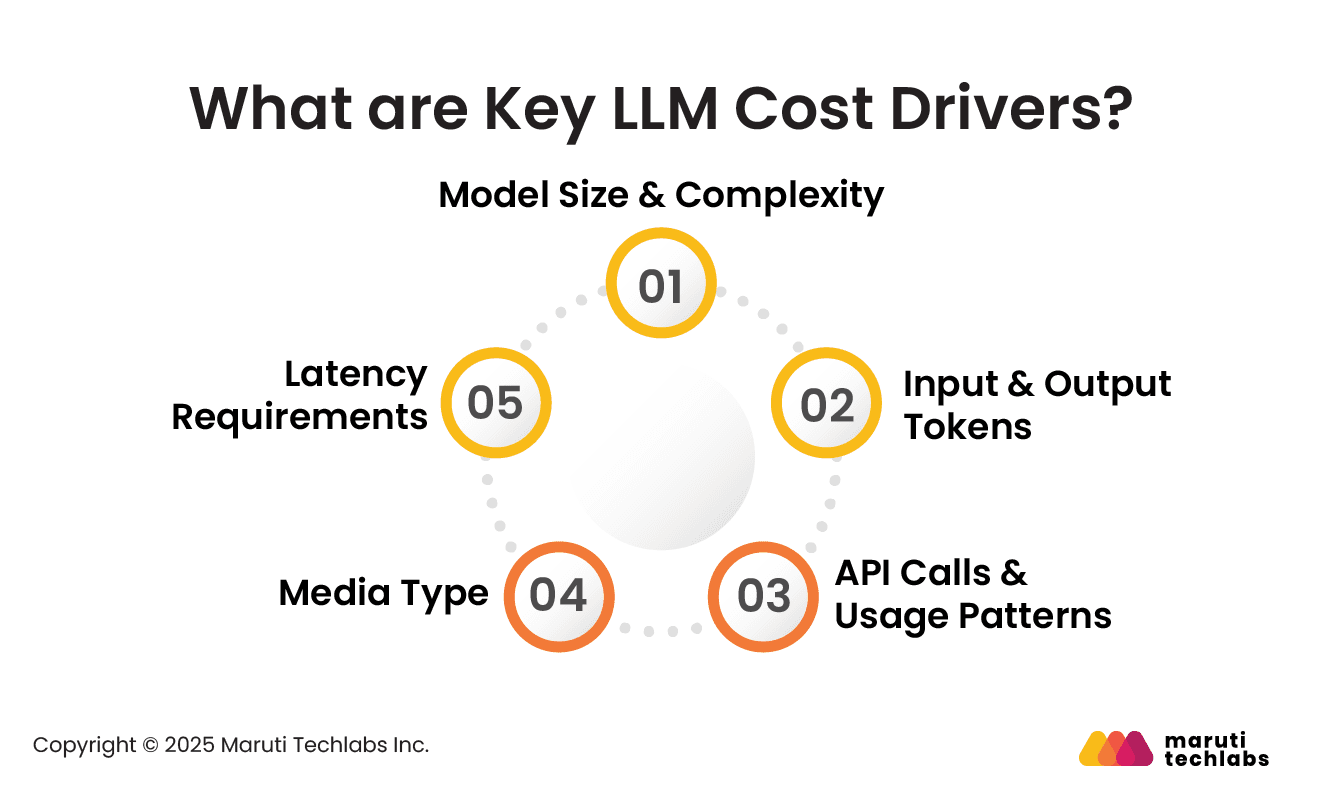

Understanding what drives these costs is the first step toward managing them effectively. Let’s explore the key contributors to LLM costs.

‘Parameters’, which typically contribute to the size of an LLM, are significant cost drivers. Large models with higher capability also require more training and inference resources. This subsequently adds to costs with:

LLM providers, including OpenAI, charge based on the number of processed tokens. Tokens are chunks of text processed by a model, which may consist of a few characters or an entire word. Costs are incurred for the text sent to the model (input) and for the text generated by the model.

Having a complete understanding of this pricing is critical, as it's directly related to the structure of your prompts and the management of model output.

The volume and frequency of API calls to LLM services directly affect costs. Here are a few factors to consider:

If usage patterns are not adequately monitored, they can lead to unexpected cost spikes.

LLM costs are influenced by the type of media used. Media types such as text, audio, and video significantly impact the price. If the LLM has to process audio and video at scale, costs will increase. A core reason for this is the inherently complex and large datasets.

The overall cost also depends on how quickly you want your LLM to respond to prompts. The models will be expensive if they have low-latency requirements, as they will require higher computational power and optimized infrastructure.

The company you partner with to develop your LLM can help you in the process, primarily with defining the latency requirements.

As LLMs become an evident part of the AI ecosystem, managing their overall costs has become increasingly important.

Here are six strategies that can help keep these expenses in check.

Model selection is primary when it comes to cost savings. While one might be tempted to use models such as GPT-4 and Claude, their requirements and latency make it too costly.

Here are a few tips to select the right model.

LLMs charge based on token usage. Here are some suggestions concerning token optimization.

Model refinement helps compress large models while mimicking their performance. In addition, fine-tuning uses a base model to perform niche tasks. Here are some tips to implement these characteristics.

Apt deployment and infrastructure strategies can introduce stability and long-term benefits. Here are some key tactics to implement.

RAG utilizes model computation techniques to enhance business cost efficiency. Let’s explore some essential practices for deploying RAG and hybrid architectures.

Automation plays a vital role in infrastructure and workflow management. It ensures cost optimization by monitoring and controlling expenses.

Here are some essential practices to follow.

To manage growing LLM expenses, organizations are turning to specialized tools and platforms. Let’s explore 3 tools that help with LLM cost control.

Platforms such as Helicone offer real-time insights into LLM spending, helping teams quickly spot cost surges and find opportunities to optimize usage.

They track token consumption, highlight high-cost queries, and send automated alerts when budgets are exceeded.

AWS Bedrock offers flexible, cost-efficient ways to use large language models. On-demand pricing charges per 1,000 tokens, ideal for variable workloads and ad-hoc inference. Provisioned throughput ensures consistent performance with discounted rates for one or six-month reservations.

Bedrock also lets you leverage multiple providers without heavy infrastructure, helping control expenses on storage, data transfer, and model customization.

Azure OpenAI provides a range of models tailored for specific tasks, billed per million tokens and varying by region. Flexible pricing and scalable options enable businesses to optimize LLM usage, control costs, and efficiently match workloads without overprovisioning.

Optimizing LLMs involves more than cutting costs; it requires balancing efficiency and performance. Here are some practical examples of cost-optimization strategies for running LLMs.

Whether it's AWS, Azure, or Google Cloud, spot instances can offer on-demand pricing. It can be used for interruptible workloads, such as AI model training and batch processing. Uber uses spot instances to train ML models for its AI platform, Michelangelo, while keeping costs to a minimum.

AI spend can be reduced with a Cloud FinOps infrastructure. It offers a range of perks, including monitoring, allocation, and cost optimization. It also identifies spikes in AI model inference costs with real-time anomaly detection.

SpotServe deployed generative LLMs on preemptible instances. It leveraged spot VMs with adaptive graph parallelism to cut costs by half without sacrificing performance. This approach also improved reliability by reducing downtime.

Optimizing LLM costs isn’t just about choosing the cheapest model but about making smart architectural and operational decisions.

From using embedding-based retrievals to limit context size to leveraging monitoring tools like Helicone for cost visibility to selecting the right pricing model for your workloads, each choice directly impacts efficiency and ROI.

Balancing performance with cost control requires a mix of strategy, monitoring, and scalability planning. Minor adjustments, such as caching, pruning, and selecting the appropriate inference mode, can yield significant savings.

If you’re planning to maximize the potential of AI, you can leverage Maruti Techlabs’ specialized Artificial Intelligence Services along with their comprehensive Generative AI Services to develop customized, intelligent, and reliable solutions tailored to your unique needs.

Before scaling your AI systems further, assess your organization’s preparedness with our AI Readiness Assessment Tool — a quick way to identify opportunities for optimization and ensure your infrastructure is ready for cost-efficient AI adoption.

The cost of using an LLM typically depends on token consumption. You’re charged for both input and output tokens processed. For example, API rates might range from fractions of a cent per 1,000 tokens to several dollars, depending on the provider and model size.

Training large models demands massive computing resources. For instance, training GPT-3 was estimated at ~$500K to $4.6M, while training models like GPT-4 reportedly cost over $100M in compute alone.

Building an LLM (including infrastructure, model, data pipelines, inference, and maintenance) often runs into seven-figure costs. API usage alone can hit ~$700K per year in high-volume settings.