The Ultimate Guide to AI Governance: Top 5 Best Practices

Organizations across the globe are keen on using AI to automate and simplify their business processes. However, this desperation to be the first one can wrongly motivate companies to develop and deploy unmonitored AI.

The risks associated with using unchecked AI are no longer hypothetical. Its adverse effects are already observed across industries. While quick implementation may be the goal, the long-term success of any AI system depends on how secure, ethical, and governed the AI system is.

Ignoring AI governance may result in technical debt, higher costs, and reputational damage. In contrast, investing in governance practices can pave the way for sustainable, scalable, and secure AI growth.

This article provides crucial insights into what AI governance is, why it matters, the top threats of unmonitored AI, key frameworks to use, and best practices to follow.

Artificial Intelligence (AI) governance is a set of guardrails and standards for processes, ensuring AI systems are ethical and safe to use.

AI governance frameworks guide AI’s research, development, and application while respecting human rights and fairness.

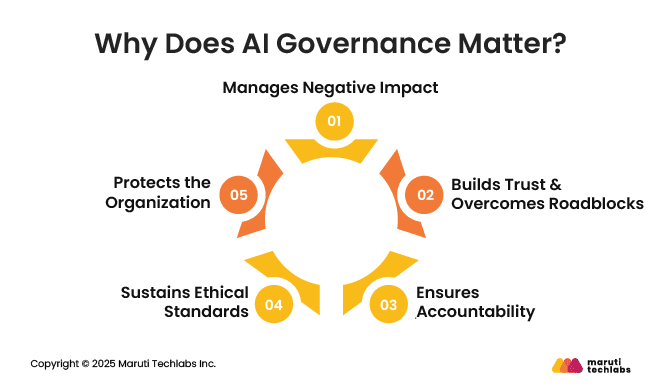

AI governance is crucial because it directly addresses the challenges of compliance, trust, and efficiency in AI development.

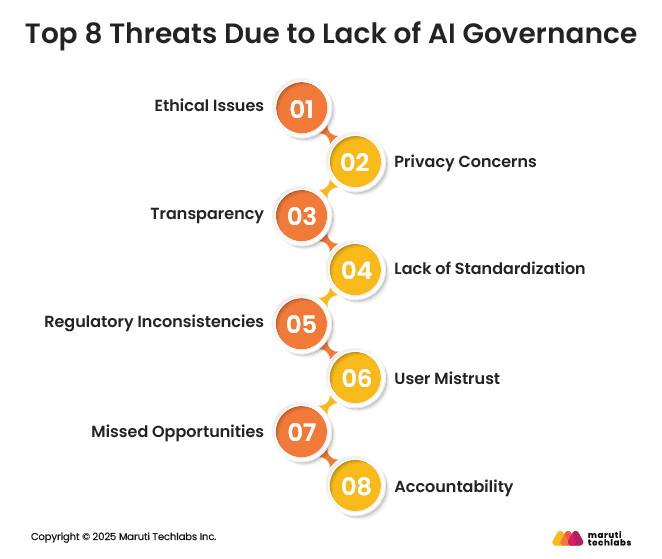

AI governance is critical to mitigating risks and adhering to regulatory requirements. Here are some common yet severe threats observed due to a lack of appropriate AI governance.

Issues such as bias, unfair treatment, and discrimination within AI systems are common occurrences without proper governance. Such oversights may add to societal inequalities and other unprecedented consequences.

AI systems have to deal with personal data. A lack of governance can lead to improper data protection measures, causing breaches with sensitive information.

Transparency helps users understand an AI’s decision-making process. Governance compels programmers and AI experts to share information about algorithms. This enhances the assessment of issues related to trust and accountability.

The lack of standardization of practices can result in different departments following varied approaches to AI development. This hinders interoperability and the establishment of universal ethical norms.

Regulatory frameworks can become insufficient in the absence of AI governance. This can cause AI deployment without proper oversight, resulting in abuse or misuse.

Potential risks and concerns with ethical implications can negatively affect public trust. A lack of governance can hamper AI adoption, leading to uncertainty and skepticism.

AI governance is critical for secure AI development. However, an ambiguous framework with too many restrictions can restrict innovation. It’s crucial to strike the perfect balance between fostering technological advancements and mitigating risks.

In case of failures, it becomes difficult to assign responsibility without clear governance structures. To ensure the continual development of AI systems and address issues promptly, it’s essential to establish accountability.

AI risk management frameworks offer a well-defined process to classify risks, determine controls, and stay updated with changing regulations.

Here are the three most renowned frameworks used by organizations.

The NIST AI Risk Management Framework (AI RMF) is by the U.S. National Institute of Standards and Technology. It offers voluntary guidance for managing AI risks across sectors through four functions:

ISO/IEC 23894 provides global guidance for managing AI risks across the system lifecycle, adapting traditional risk practices to AI’s unique traits.

The EU AI Act is the first comprehensive law regulating AI use across sectors, emphasizing real-world impact. The act categorizes AI risks into 4 categories.

While AI is capable of doing the most astonishing things, it also holds the capability to make blunders. Here’s a list of its achievements and mistakes.

A class-action lawsuit against Paramount highlights the adversities of weak AI governance. The company allegedly mishandled subscriber data. This underscores that AI-driven personalization must ensure transparent data lineage and consent management to avoid serious legal and compliance risks.

A major bank faced backlash for granting women lower credit limits than men with similar profiles. The issue stemmed from biased historical data, and without AI lineage tracking, the bank couldn’t trace or correct the bias, causing legal and reputational damage.

A leading surgical robotics firm built an AI tool to help surgeons by using data such as experience and specialty. But AI-created attributes risked revealing hidden personal data. Regular scans missed this issue, highlighting the importance of continuous monitoring.

A global e-commerce brand faced AI governance challenges as it scaled. Tracking customer data across websites, payments, and recommendations was complex.

They implemented end-to-end data lineage. This provided data visibility, ensured consent-based AI decisions, met GDPR and CCPA requirements, and strengthened customer trust.

A leading bank used real-time AI monitoring to spot and fix bias before deployment. This included flagging issues, auditing decisions, and tracking data lineage. They embedded governance early to turn fairness into a strong competitive edge.

A healthcare AI firm ensured HIPAA and GDPR compliance through continuous monitoring. By doing this, they secured patient data, tracked AI-generated information, and validated models. Subsequently, they avoided regulatory issues while fostering safe, widespread adoption of AI diagnostics.

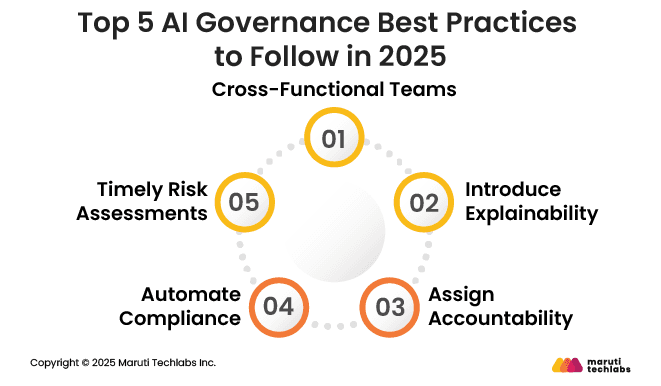

As AI adoption accelerates, establishing strong governance frameworks is becoming a key priority for organizations. Here are some best practices that companies can include in their workflows to ensure accountability and long-term value.

Governance demands oversight from different experts in compliance, legal, technical, and business stakeholders. This helps identify risks from all areas.

To ensure this is implemented correctly, companies should:

Explainability is a testament to responsible AI use, making it more trustworthy. It accounts for efficient audits, reassures stakeholders and regulators, and decreases bias.

To introduce explainability, organizations can:

Assigning ownership makes sure that the right individuals handle issues. It also ensures that AI systems are aligned with organizational values.

Accountability can be strengthened by:

Manual oversight isn’t enough to keep vigilance in AI governance. Automating compliance offers a more comprehensive approach to governance.

Organizations can streamline this process by:

As AI models are updated, the risk associated with them also changes. Timely risk assessments maintain safety, compliance, and fairness over time.

To perform continual assessments that are effective, companies should:

AI governance is no longer optional; it's essential for any organization leveraging AI. As companies increasingly rely on AI for decision-making and operational efficiency, the risks of bias, privacy violations, and regulatory non-compliance grow exponentially.

Without clear policies, continuous monitoring, and end-to-end data lineage, even well-intentioned AI initiatives can backfire, causing legal, financial, and reputational damage.

Robust AI governance ensures transparency, accountability, and fairness in AI-driven processes. It allows organizations to track how data flows through models, detect bias early, maintain regulatory compliance, and safeguard customer trust.

By embedding governance into broader AI Strategy & Readiness efforts from the start, businesses can not only mitigate risks but also unlock AI’s full potential to drive innovation and competitive advantage.

At Maruti Techlabs, we offer Artificial Intelligence Services that provide end-to-end support from strategy and compliance to monitoring and optimization.

Connect with us and explore how our experts help organizations implement trustworthy, scalable AI while staying ahead in a rapidly evolving landscape.

AI governance ensures transparency, accountability, and fairness in AI systems. It helps organizations track data usage, prevent bias, comply with regulations, and protect privacy.

By embedding governance early, businesses can build trust with customers, mitigate risks, and maximize the benefits of AI while avoiding costly legal or reputational issues.

Without AI governance, organizations face biased decisions, privacy breaches, regulatory penalties, and reputational damage. Unmonitored AI models can perpetuate historical inequities, mismanage sensitive data, and produce unexplainable outcomes.

In addition, a lack of oversight reduces accountability, making it difficult to trace errors or justify decisions, potentially leading to financial and legal consequences.

AI can enhance governance by automating monitoring, detecting anomalies, and providing explainable insights into model decisions. It can track data lineage, flag bias, ensure compliance, and generate real-time reports.

Leveraging AI for governance allows organizations to maintain transparency, strengthen internal controls, and proactively manage risks efficiently.