5 Proven Tools That Make AI Explainability Automatic & Transparent

When a Machine Learning model reaches over 95% accuracy, it may seem like the highest standard has been achieved. However, accuracy alone does not determine the trustworthiness of a system.

When using AI in industries like finance, healthcare, or hiring, it’s critical to uncover the ‘Why’ behind decisions. A model that is accurate with its predictions but lacks explainability is a black box. Black boxes are dangerous.

Therefore, interpretability and explainability are crucial for enhancing transparency and accountability in decisions made by AI. Explainability, though necessary, is done using manual efforts.

Manual explainability often relies on documentation, reviews, and human interpretation, which can be slow, inconsistent, and hard to scale as models grow more complex. Automation changes this by continuously tracking data lineage, transformations, and model behavior in real time. This creates a living map of context, enabling explainability to scale alongside innovation, making AI systems transparent, auditable, and compliant without slowing down development.

This article explores the myths behind automating AI explainability (XAI), how to embed it into the ML lifecycle, essential tools, use cases, and challenges.

AI explainability isn’t just about technology; it’s about using AI safely and fairly.

Many people think making AI explainable means slowing everything down with endless paperwork and manual reviews. But that isn’t true. The smarter way is to automate the process of creating context and explanations as the system runs.

Imagine always having a live map that shows how data moves through your system.

This constant tracking means you don’t have to figure things out later. You already know what influenced an AI decision, and you can spot risks or compliance issues early. In other words, explainability becomes part of the system itself, not an extra chore at the end. That way, teams can innovate freely while staying safe and transparent.

By using automation to continually track this context, teams can both innovate quickly while meeting compliance and ethical standards.

Embedding explainability into every stage of the ML lifecycle means making the model’s decisions understandable, trustworthy, and transparent, not just after deployment, but from the very beginning.

At the data collection stage, you record where data comes from, note any sensitive or personal fields, and check for imbalances or bias. During feature engineering and data preprocessing, you track the transformations you apply so you know precisely how raw inputs become model features.

When you move into model training, you choose either interpretable models (like decision trees or linear models) or use tools that help explain black-box models (e.g., SHAP, LIME).

During validation, you examine explanations of model behavior to find unexpected bias, overfitting, or misinterpreted features. Then, upon deployment, you should continue monitoring: are the explanations consistent? Does model behavior degrade or drift? Who can interpret the decisions, and is there feedback from domain experts or users?

Finally, incorporate feedback loops: the insights from explainability should feed back into future data collection, model updates, stakeholder communications, and compliance reviews. Embedding explainability this way helps build models that are performant and ethically aligned, trustworthy, and auditable.

As organizations place greater emphasis on transparency and trust in AI, several tools have emerged to make model behavior easier to interpret. Here are the top tools you can leverage to conduct explainable AI.

Shapley Additive exPlanations (SHAP) is an AI framework that has received high adoption in various industries. The paper explains why a model makes a particular prediction. It states that optimal accuracy is only achievable with complex models, developing a relationship between interpretability and accuracy.

It employs the game-theoretical approach to predict outputs of ML models. Using classic Shapley values, SHAP connects local explanations with optimal credit allocations. These values can be used to interpret any ML model, including:

Local Interpretable Model-Agnostic Explanations (LIME), developed by researchers at the University of Washington, promotes higher transparency with algorithms. It’s designed to interpret multi-class black box classifiers easily.

It promotes the conceptualization and optimization of ML models, thereby enhancing their understandability. It supports the understability criteria concerning domains and tasks. Additionally, its modular approach provides reliable and in-depth predictions from models.

ELI5 is a Python package that helps debug ML classifiers and understand predictions. It extends its support for the following frameworks and packages.

InterpretML is an open-source Python library that uses two approaches for AI explainability:

Key features include:

This is also a free and accessible Python package that provides explanations of ML algorithms, along with proxy explainability metrics, for enhanced understanding. Key features provided include time series data, tabular, image, and text-based support, intuitive UI, and stepwise walkthrough of different use cases.

Supported explainability algorithms include:

With the growing need for transparency in AI-driven decisions, specific industries have been quicker to adopt explainable AI than others. Here are the two most well-known sectors that are making ample use of explainable AI.

1. Healthcare: It does image analysis, resource optimization, accelerates diagnostics, and medical diagnosis. It enhances patient care by improving transparency and traceability in the decision-making process. In addition, the pharmaceutical approval process can be streamlined using AI.

A great example of this is IBM Watson, which offers diagnostic support, treatment planning, and personalized patient management.

2. Financial Services: With financial services, AI can enhance customer experiences with a straightforward credit and loan approval process. It speeds up credit risk, wealth management, and economic crime risk assessments. It also offers quick solutions to complaints and issues. Furthermore, it provides confident pricing, product recommendations, and investment options.

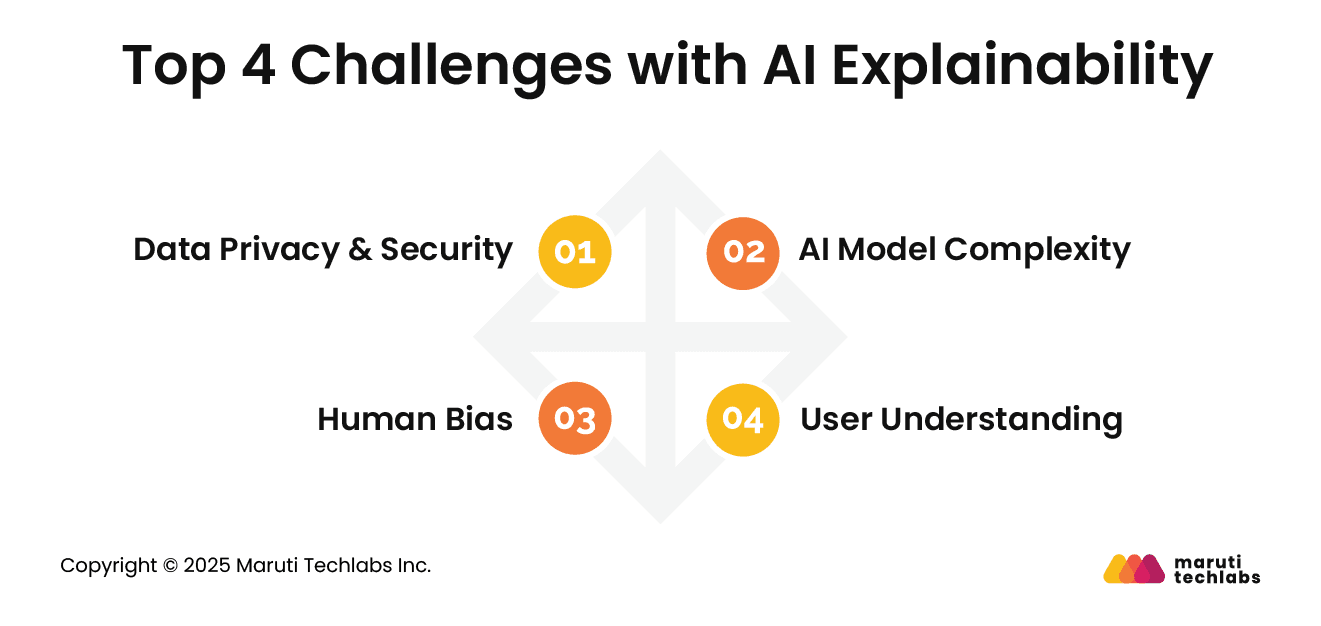

Despite its benefits, explainable AI presents its own set of challenges that organizations must address. Here are the most prominent challenges observed with explainable AI.

To explain certain decisions, there may be a need to use sensitive data along with public and private data. This data needs enhanced security to protect it from hackers and vulnerabilities. Compromising the privacy and security of individuals and organizations can have serious consequences. So, it’s crucial to have clear protocols that protect data and the security of explainable AI.

To meet the needs of an organization, AI models evolve. The need for improved decision-making can make you choose AI models that are cumbersome to explain.

Explainable AI systems, too, must continuously evolve to retain their ability to offer explainability to AI decisions. This demands investment in research and development that ensures the challenges posed by complex and changing AI models are addressed with explainable AI techniques.

While explainable AI aims to be transparent, it can still be affected by bias in the data and algorithms used to analyze it. This is because humans set the parameters for the data used to train XAI systems.

The data sources and algorithms used must be carefully selected to mitigate human bias in these systems. It also requires continuous efforts to promote diversity and inclusion while developing AI technology.

Although XAI is designed to enhance the understanding of AI models, many users may lack the necessary background knowledge to comprehend the provided explanations fully. Therefore, XAI systems should be designed with users of different knowledge bases in mind.

They can also include interactive interfaces, visual aids, or other add-ons to help simplify learning behind the AI decision-making process.

Automating AI explainability is not just a technical upgrade; it's a strategic shift toward building trustworthy, transparent, and responsible AI systems.

By embedding automation into the ML lifecycle, organizations move beyond static reports and manual reviews to create a living, continuous understanding of how models make decisions.

This ensures compliance and accountability while freeing teams to focus on innovation rather than paperwork. More importantly, it empowers stakeholders from developers to regulators to end-users with clarity and confidence in AI outcomes.

As AI adoption accelerates, the organizations that embrace automated explainability will not only reduce risk but also earn trust, drive adoption, and set themselves apart as leaders in responsible innovation. The future of explainability lies in automation that scales with AI itself.

If you’re keen on learning more about how to introduce AI explainability in your automated systems, our Artificial Intelligence Consultants can surely help you out.

Connect with us today to garner more knowledge on the subject and stay ahead of the competition.

Curious about your organization’s AI maturity? Try our AI Readiness Assessment Tool to evaluate your preparedness for building transparent and explainable AI systems.

Cloud providers like AWS, Azure, and Google Cloud offer AI platforms with built-in explainability tools, such as SageMaker Clarify, Azure Machine Learning Interpretability, and Google Cloud’s Explainable AI. These infrastructures provide automated insights, transparency, and monitoring throughout the ML lifecycle.

Machine learning model explainability is the ability to show how and why a model makes specific predictions clearly. It involves tracing data inputs, features, and transformations, and then presenting this information in a manner that is understandable to humans. Explainability ensures trust, accountability, and compliance, and helps identify errors or biases in AI systems.