The Real Cost of Kubernetes Over-Provisioning and How to Fix It

Overprovisioning in Kubernetes means giving applications more CPU, memory, or storage than they need. It’s a common practice, often done with the best intentions, to avoid downtime or performance issues. However, in an effort to be cautious, teams often reserve far more resources than their workloads actually use.

Kubernetes makes it easy to scale and manage applications, but that same flexibility can lead to wasted resources. For example, an app that only uses 4 vCPUs might be assigned 16, or a database needing 16GB RAM may sit on a 64GB setup. The unused capacity adds up quickly, especially in clusters running multiple services.

This habit of over-allocating becomes expensive over time. You’re essentially paying for cloud resources that just sit idle. With smarter Kubernetes autoscaling and shifting toward Kubernetes cost optimization, teams can maintain reliability without overspending.

In this blog, we’ll cover the impact of Kubernetes overprovisioning on cloud bills, how developer habits contribute to the problem, and the best Kubernetes monitoring and cost optimization tools.

Over-provisioning in Kubernetes is one of the top reasons cloud bills spiral out of control. Consider it like renting a large office building when your team could easily fit into a small coworking space. You end up paying for empty rooms you never use.

According to CAST AI’s 2024 Kubernetes Cost Benchmark report, only 13% of provisioned CPUs and 20% of memory were actually used in clusters with over 50 CPUs. That means a huge chunk of resources sits idle, yet you’re still footing the bill for all of it.

This often happens when teams set high resource requests “just in case.” Maybe you expect traffic spikes or want to play it safe, but the reality is that most workloads rarely hit those peak levels. The unused capacity doesn’t just sit there quietly; it adds up quickly on cloud bills, especially with providers like AWS, GCP, or Azure.

Idle nodes, unused storage, and underutilized pods silently drain your cloud budget month after month. The real fix lies in spotting where you’ve over-allocated and applying Kubernetes cost optimization techniques without putting reliability at risk.

Over-provisioning in Kubernetes doesn’t always stem from negligence—it’s often the result of small, everyday decisions that add up over time. Developers usually act with caution to avoid service disruptions, but that caution often translates into allocating more resources than necessary. In addition to that, there is a lack of visibility into cloud bills or performance data, and it becomes easy to overspend without realizing it.

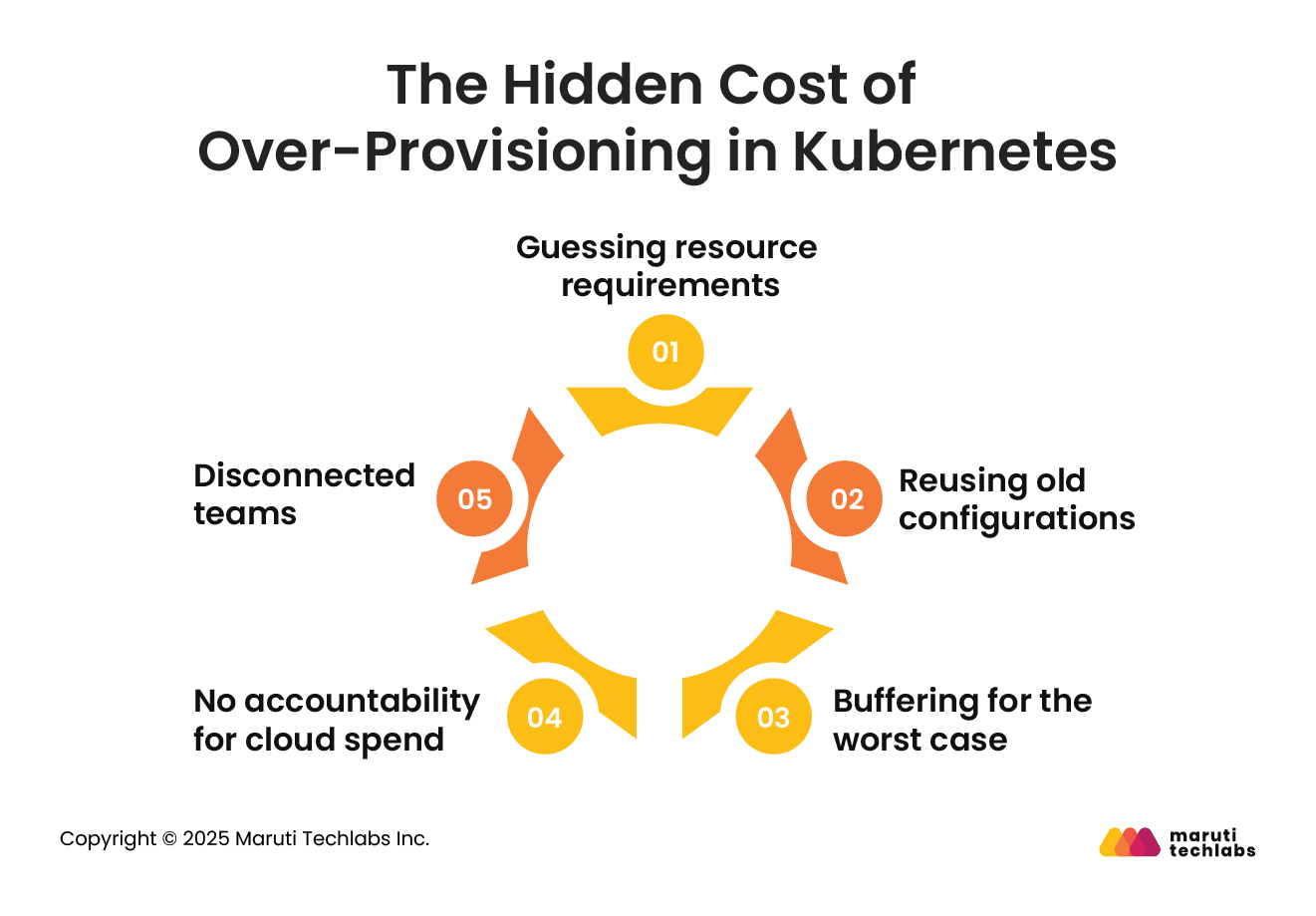

Here are some of the common habits that contribute to the problem:

Many developers don’t have access to detailed usage patterns when deploying an app. So, they make rough estimates for CPU and memory, often erring on the side of safety. These guesses might work temporarily, but can easily result in long-term waste.

In fast-paced development cycles, it's common to copy configuration files from previous services. If an older app used high limits, those limits are often applied to new services without questioning whether they’re really needed.

Developers sometimes allocate resources based on peak load expectations, even if those peaks occur rarely. This “just in case” thinking leads to overprovisioning by default, with resources sitting idle most of the time.

Beyond individual habits, organizational culture plays a significant role too:

In many teams, developers focus on shipping features, not on the cost of running them. If no one tracks how much unused CPU or memory is costing the business, it’s hard to change behavior.

In siloed environments, developers decide how much to request, while operations teams handle infrastructure and billing. This separation means ops can see the waste but can’t always change the settings, and devs don’t see the financial impact of their choices.

Fixing these issues requires more than just better tooling—it starts with awareness. Teams need access to real-time usage data, visibility into cloud costs, and a shared responsibility for Kubernetes cost optimization. Simple changes like reviewing resource limits regularly or setting default limits based on real-world metrics can go a long way in avoiding over-provisioning without sacrificing reliability.

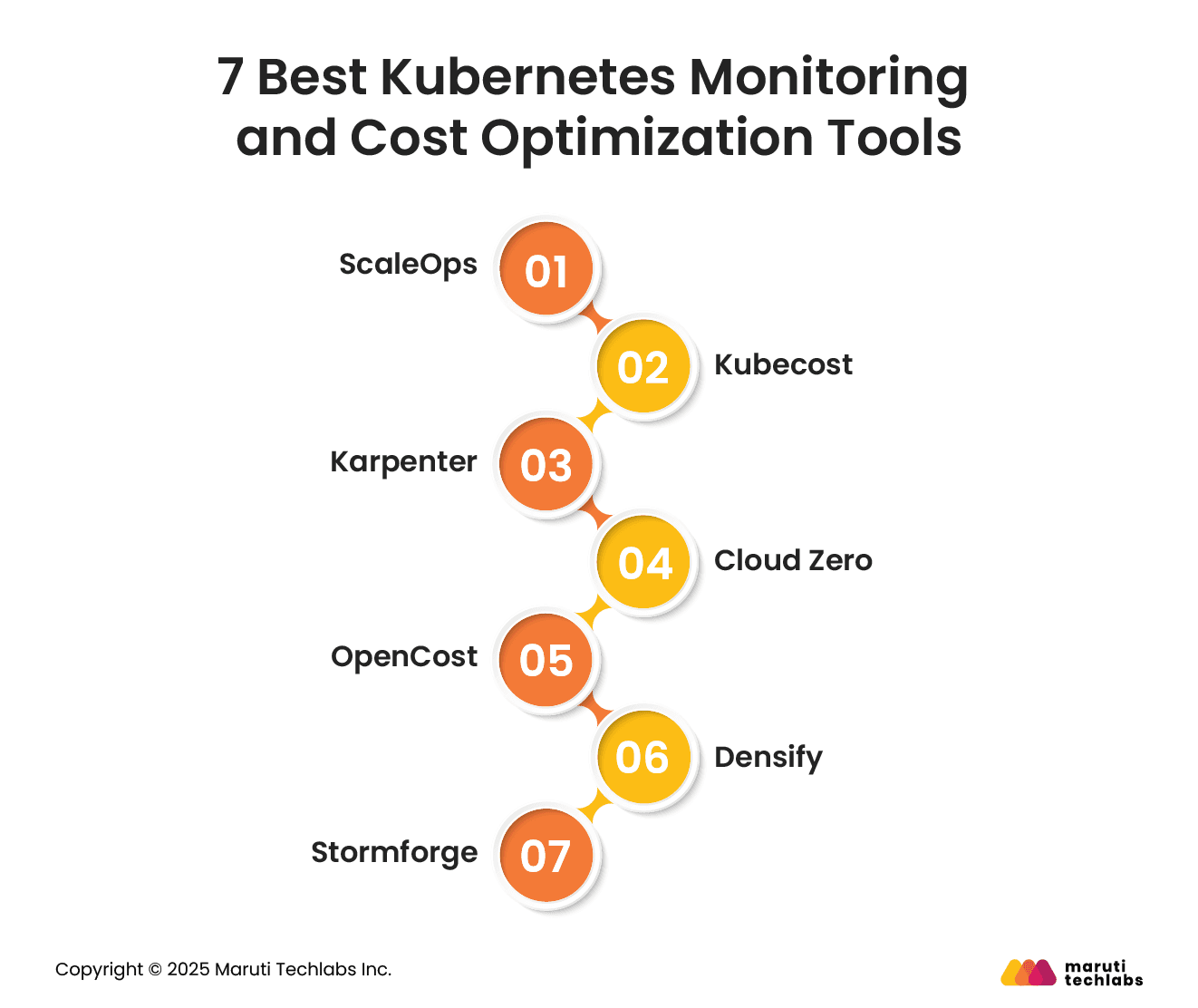

Managing Kubernetes costs while keeping performance high is a growing challenge as cloud-native environments get more complex. The right tools can help optimize resources, reduce waste, and provide deeper visibility into usage. Here are the seven top tools that can help you monitor and optimize your Kubernetes workloads effectively with a strong focus on Kubernetes cost optimization and intelligent Kubernetes autoscaling strategies.

ScaleOps helps you save cloud costs by automatically adjusting Kubernetes resources based on what’s actually needed. It watches how your pods are being used and updates CPU and memory settings in real time. So if a pod is using less than it was given—say 300m instead of 500m CPU—ScaleOps will lower the limit to match, cutting waste without slowing things down.

It also identifies under-utilized nodes and consolidates workloads to reduce the number of active nodes. Real-time analytics and alerts give teams visibility into spending patterns and allow them to act on anomalies quickly.

Kubecost offers detailed cost monitoring and resource insights for Kubernetes environments. It helps teams track the cost of different namespaces or deployments and identify underused resources that could be downsized. With built-in budgeting tools and alerting features, teams can set financial limits and receive notifications if exceeded.

Kubecost supports data-driven decision-making, helping optimize resource allocation to ensure spending is aligned with actual usage.

Karpenter is an open-source tool from AWS that helps you manage Kubernetes clusters more efficiently. It adds or removes resources based on what your applications need at the moment, so you’re not stuck paying for extra capacity you don’t use.

This is especially helpful when demand fluctuates frequently. Instead of overprovisioning or running into shortages, Karpenter automatically scales things up or down to keep performance smooth and costs under control.

CloudZero provides unified cloud cost visibility across multiple providers, including Kubernetes environments. It delivers real-time recommendations based on actual usage patterns and helps identify inefficient spending areas.

Teams managing large-scale or multi-cloud deployments benefit from CloudZero’s ability to break down costs by team, project, or application. It enables better budgeting, collaboration, and decision-making across departments, reducing surprises in cloud bills.

OpenCost is an open-source solution that brings transparency to Kubernetes resource costs. It integrates directly with your cluster to show how much is being spent on specific workloads. Ideal for teams that want cost control without adopting a proprietary solution, OpenCost offers customizable metrics and dashboards to track and manage Kubernetes spending efficiently.

Densify uses intelligent analytics to optimize Kubernetes resources by recommending changes to cluster configurations, pod sizing, and workload placement. It helps reduce costs while improving application performance.

Particularly suited for complex cloud environments, Densify continuously evaluates workloads and provides actionable insights to ensure the infrastructure matches demand.

StormForge leverages machine learning to optimize Kubernetes application performance and resource usage. It runs experiments on different configurations to find the most efficient setup for your applications. This proactive approach is ideal for teams dealing with diverse workloads and performance bottlenecks.

By applying StormForge’s recommendations, organizations can reduce cloud spend and improve reliability without manual tuning.

Each of these tools supports a smarter, more cost-effective way to run Kubernetes environments, helping you strike the right balance between performance and budget.

Kubernetes gives teams powerful control over their infrastructure, but without regular checks, it’s easy to end up using and paying for far more than you need. Extra resources often go unnoticed until the cloud bill arrives; by then, the waste has already added up. What starts as a cautious move often turns into long-term overspending.

Continuous monitoring is key to keeping things efficient. When teams track actual usage and understand how their apps perform, they can confidently fine-tune resource settings.

Pairing this visibility with smart Kubernetes autoscaling tools and a shared focus on Kubernetes cost optimization helps keep both performance and budgets in check. But tools alone aren’t enough. Developers, operations teams, and business leaders all need to understand how their choices impact cloud costs and how small changes can lead to big savings over time.

At Maruti Techlabs, we help businesses build and manage Kubernetes environments correctly. From Kubernetes autoscaling strategies to optimizing workloads for better Kubernetes cost optimization, our DevOps consulting services help you through every step of your container orchestration journey. Contact us to explore how you can adopt Kubernetes with better visibility, performance, and control without the hidden costs.

Provisioning in Kubernetes refers to the process of allocating CPU, memory, and storage resources to applications running inside containers. It ensures each workload gets the resources it needs for stable performance.

Kubernetes uses resource requests and limits to define these allocations. Proper provisioning avoids both underperformance and overprovisioning, helping teams manage costs and ensure high availability within the cluster.

Provisioning a Kubernetes cluster involves setting up control plane components and worker nodes that host containers. You can do this manually using tools like kubeadm or automate the setup with cloud providers like GKE, EKS, or AKS.

Provisioning includes defining node configurations, networking, storage, and authentication settings. It’s also essential to configure autoscaling and resource limits to optimize workload performance and avoid unnecessary cloud expenses.

The cheapest way to run Kubernetes is by combining Kubernetes cost optimization practices with efficient infrastructure planning. This includes right-sizing workloads, enabling Kubernetes autoscaling, using spot or reserved instances, and eliminating idle or overprovisioned resources.

Managed services like GKE Autopilot or EKS Fargate can further reduce overhead. Continuous monitoring also plays a vital role in spotting inefficiencies and helping you make cost-effective adjustments over time.

Kubernetes manages resources using a control plane that schedules workloads across a cluster of nodes. Each application runs inside a pod, where resource requests and limits define how much CPU and memory it can use.

The system continuously monitors utilization and can reschedule workloads or trigger autoscaling. This approach ensures efficient resource usage, avoids overload, and supports reliable performance, even in environments with thousands of running containers.